AI, deep learning and machine learning are often mistaken for each other. Take a look at how they differ in this interesting article.

From being dismissed as science fiction to becoming an integral part of multiple, wildly popular movie series, especially the one starring Arnold Schwarzenegger, artificial intelligence has been a part of our life for longer than we realise. The idea of machines that can think has widely been attributed to a British mathematician and WWII code-breaker, Alan Turing. In fact, the Turing Test, often used for benchmarking the ‘intelligence’ in artificial intelligence, is an interesting process in which AI has to convince a human, through a conversation, that it is not a robot. There have been a number of other tests developed to verify how evolved AI is, including Goertzel’s Coffee Test and Nilsson’s Employment Test that compare a robot’s performance in different human tasks.

As a field, AI has probably seen the most ups and downs over the past 50 years. On the one hand it is hailed as the frontier of the next technological revolution, while on the other, it is viewed with fear, since it is believed to have the potential to surpass human intelligence and hence achieve world domination! However, most scientists agree that we are in the nascent stages of developing AI that is capable of such feats, and research continues unfettered by the fears.

Applications of AI

Back in the early days, the goal of researchers was to construct complex machines capable of exhibiting some semblance of human intelligence, a concept we now term ‘general intelligence’. While it has been a popular concept in movies and in science fiction, we are a long way from developing it for real.

Specialised applications of AI, however, allow us to use image classification and facial recognition as well as smart personal assistants such as Siri and Alexa. These usually leverage multiple algorithms to provide this functionality to the end user, but may broadly be classified as AI.

Machine learning (ML)

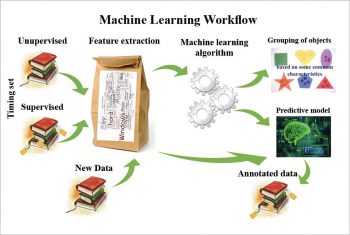

Machine learning is a subset of practices commonly aggregated under AI techniques. The term was originally used to describe the process of leveraging algorithms to parse data, build models that could learn from it, and ultimately make predictions using these learnt parameters. It encompassed various strategies including decision trees, clustering, regression, and Bayesian approaches that didn’t quite achieve the ultimate goal of ‘general intelligence’.

While it began as a small part of AI, burgeoning interest has propelled ML to the forefront of research and it is now used across domains. Growing hardware support as well as improvements in algorithms, especially pattern recognition, has led to ML being accessible for a much larger audience, leading to wider adoption.

Applications of ML

Initially, the primary applications of ML were limited to the field of computer vision and pattern recognition. This was prior to the stellar success and accuracy it enjoys today. Back then, ML seemed a pretty tame field, with its scope limited to education and academics.

Today we use ML without even being aware of how dependent we are on it for our daily activities. From Google’s search team trying to replace the PageRank algorithm with an improved ML algorithm named RankBrain, to Facebook automatically suggesting friends to tag in a picture, we are surrounded by use cases for ML algorithms.

Deep learning (DL)

A key ML approach that remained dormant for a few decades was artificial neural networks. This eventually gained wide acceptance when improved processing capabilities became available. A neural network simulates the activity of a brain’s neurons in a layered fashion, and the propagation of data occurs in a similar manner, enabling machines to learn more about a given set of observations and make accurate predictions.

These neural networks that had until recently been ignored, save for a few researchers led by Geoffrey Hinton, have today demonstrated an exceptional potential for handling large volumes of data and enhancing the practical applications of machine learning. The accuracy of these models allows reliable services to be offered to end users, since the false positives have been eliminated almost entirely.

Applications of DL

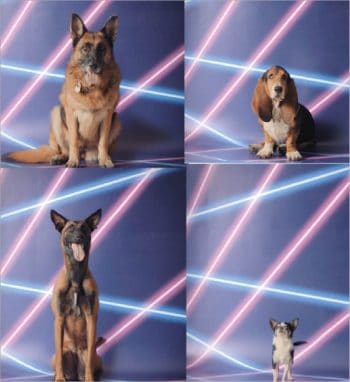

DL has large scale business applications because of its capacity to learn from millions of observations at once. Although computationally intensive, it is still the preferred alternative because of its unparalleled accuracy. This encompasses a number of image recognition applications that conventionally relied on computer vision practices until the emergence of DL. Autonomous vehicles and recommendation systems (such as those used by Netflix and Amazon) are among the most popular applications of DL algorithms.

Comparing AI, ML and DL

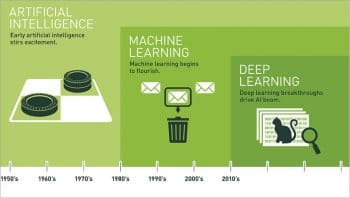

Comparing the techniques: The term AI was defined in the Dartmouth Conference (1956) as follows: “Every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” It is a broad definition that covers use cases that range from a game-playing bot to a voice recognition system within Siri, as well as converting text to speech and vice versa. It is conventionally thought to have three categories:

- Narrow AI specialised for a specific task

- Artificial general intelligence (AGI) that can simulate human thinking

- Super-intelligent AI, which implies a point where AI surpasses human intelligence entirely

ML is a subset of AI that seems to represent its most successful business use cases. It entails learning from data in order to make informed decisions at a later point, and enables AI to be applied to a broad spectrum of problems. ML allows systems to make their own decisions following a learning process that trains the system towards a goal. A number of tools have emerged that allow a wider audience access to the power of ML algorithms, including Python libraries such as scikit-learn, frameworks such as MLib for Apache Spark, software such as RapidMiner, and so on.

A further sub-division and subset of AI would be DL, which harnesses the power of deep neural networks in order to train models on large data sets, and make accurate predictions in the fields of image, face and voice recognition, among others. The low trade-off between training time and computation errors makes it a lucrative option for many businesses to switch their core practices to DL or integrate these algorithms into their system.

Classifying applications: There are very fuzzy boundaries that distinguish the applications of AI, ML and DL. However, since there is a demarcation of the scope, it is possible to identify which subset a specific application belongs to. Usually, we classify personal assistants and other forms of bots that aid with specialised tasks, such as playing games, as AI due to their broader nature. These include the applications of search capabilities, filtering and short-listing, voice recognition and text-to-speech conversion bundled into an agent.

Practices that fall into a narrower category such as those involving Big Data analytics and data mining, pattern recognition and the like, are placed under the spectrum of ML algorithms. Typically, these involve systems that ‘learn’ from data and apply that learning to a specialised task.

Finally, applications belonging to a niche category, which encompasses a large corpus of text or image-based data utilised to train a model on graphics processing units (GPUs) involve the use of DL algorithms. These often include specialised image and video recognition tasks applied to a broader usage, such as autonomous driving and navigation.

Outstanding. I will keep it as good reference. I congratulate the author.

Dr.A.Jagadeesh Nellore(AP),India