Artificial intelligence and machine learning are being increasingly used by businesses to enhance productivity. These technologies are also being deployed in the cloud with the help of open source tools and platforms. An important platform in this domain is Hugging Face, which has turned into an indispensable tool for modern AI-driven organisations due to its flexibility and scalability.

Artificial intelligence (AI) and machine learning (ML) are transformative technologies that have revolutionised various industries by enabling machines to mimic human intelligence and learn from data without explicit programming. AI encompasses a wide array of subfields, including natural language processing, computer vision, robotics, and more, each contributing to the development of systems capable of performing tasks that typically require human intelligence. Machine learning, a subset of AI, focuses on algorithms and statistical models that allow computers to identify patterns and make decisions with minimal human intervention. This paradigm shift towards automation and intelligent systems is driving significant innovation across sectors such as healthcare, finance, manufacturing, and more.

The rapid advancements in AI and ML have not only spurred tech growth but have also led to an exponential increase in market size. As organisations recognise the potential of these technologies to optimise processes, enhance customer experiences, and generate actionable insights from vast datasets, investment in AI and ML is soaring. According to recent market analyses, the global AI market is poised to grow at a compound annual growth rate (CAGR) of over 40%, potentially reaching several hundred billion dollars within the next decade. This surge is attributed to the increasing adoption of AI-driven solutions, such as predictive analytics, autonomous vehicles, and personalised recommendations, which are becoming integral to business strategies worldwide.

The market dynamics of AI and ML are also shaped by the growing demand for cloud-based services and the integration of AI into Internet of Things (IoT) devices. These trends are facilitating the widespread deployment of AI applications, further expanding the market size. Additionally, as AI and ML technologies continue to evolve, they are expected to drive the emergence of new business models and industries, creating a ripple effect that will influence the global economy at large. Consequently, the AI and ML market is not just expanding in terms of revenue, but also in its impact on the broader technological landscape and societal advancement.

According to Precedence Research (Source: precedenceresearch.com/artificial-intelligence-market), the global artificial intelligence (AI) market is currently valued at approximately US$ 638.23 billion and is projected to experience substantial growth over the next decade. By 2034, the market is expected to reach an estimated value of around US$ 3,680.47 billion, driven by a robust compound annual growth rate (CAGR) of 19.1% from 2024 to 2034. North America continues to be a leading hub for AI innovation, fuelled by significant investments in research and development, a thriving tech ecosystem, and widespread AI integration across business operations.

The integration of AI into sectors such as healthcare and finance is particularly noteworthy. In healthcare, AI and ML are being leveraged for tasks like early disease detection, personalised medicine, and drug discovery, significantly improving clinical outcomes and operational efficiency. The finance sector is also seeing extensive use of AI in areas like risk management, compliance, and financial analytics, which is transforming how financial services are delivered.

In terms of technological advancements, deep learning and natural language processing (NLP) are leading the charge. Deep learning, in particular, has been instrumental in automating complex data-driven tasks such as speech and image recognition, while NLP continues to revolutionise customer service through chatbots and virtual assistants. The adoption of AI hardware, like GPUs and specialised AI chips, is also accelerating, enabling more powerful and efficient AI applications.

The AI market is not just growing in size but also in scope, with emerging applications in areas such as autonomous vehicles, AI robotics, and generative AI, which is increasingly being used to create content like text, images, and videos. Companies like NVIDIA, IBM, and Microsoft are at the forefront of this growth.

Tools and frameworks for AI deployment

Deploying AI models into production environments involves a series of sophisticated processes, necessitating the use of specialised tools and frameworks. These tools ensure not only the seamless transition from development to deployment but also the ongoing management, scalability, and monitoring of AI systems.

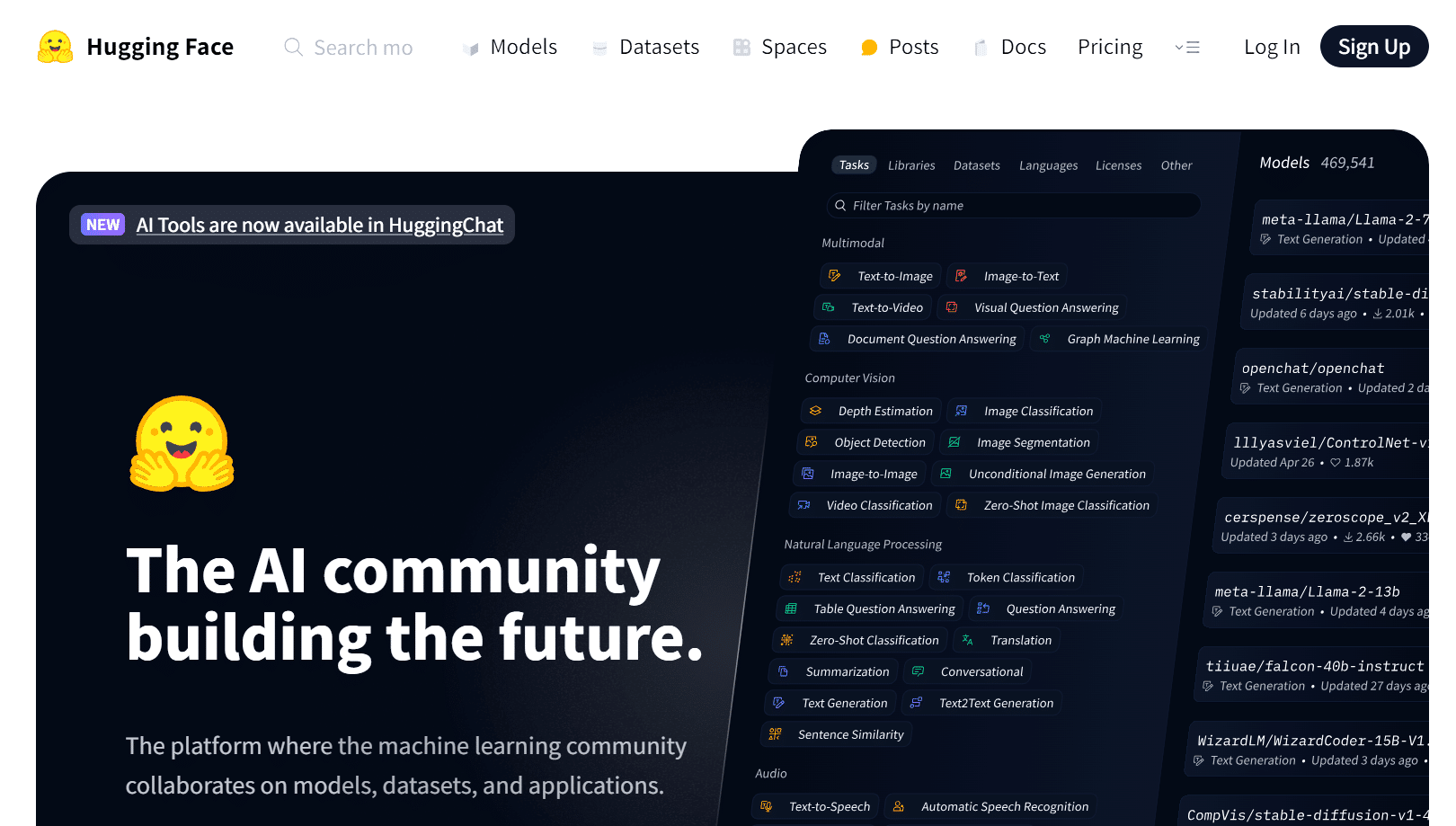

Hugging Face

URL: huggingface.co

Hugging Face has emerged as a pivotal platform for the deployment of AI models on the cloud, providing an expansive ecosystem that facilitates the entire lifecycle of machine learning models. Renowned for its comprehensive libraries such as Transformers, Hugging Face has revolutionised the ease with which developers and data scientists can deploy sophisticated AI models. One of its most significant advantages is the ability to integrate seamlessly with various cloud platforms, offering flexibility and scalability crucial for enterprise-level applications.

Docker and Kubernetes

URLs: docker.com and kubernetes.io

One of the cornerstones of modern AI deployment is containerisation. Docker allows developers to package an AI model along with its dependencies into a portable container, ensuring consistency across various environments. Kubernetes, on the other hand, orchestrates the deployment, scaling, and management of these containers in a clustered environment. Together, Docker and Kubernetes form a robust foundation for scalable and resilient AI deployments.

TensorFlow Serving and TorchServe

URLs: tensorflow.org/tfx/guide/serving and github.com/pytorch/serve

TensorFlow Serving and TorchServe are specialised serving frameworks designed for deploying models built with TensorFlow and PyTorch, respectively. These tools provide a flexible and high-performance serving system for machine learning models, enabling developers to deploy models at scale while supporting features such as versioning, monitoring, and multi-model serving.

MLflow

URL: mlflow.org

MLflow is an open source platform that handles the complete machine learning life cycle, including experimentation, reproducibility, and deployment. With MLflow, models can be deployed through various means, such as REST API endpoints or integration with cloud services. It also supports tracking experiments and versioning models, making it easier to manage and deploy models in a collaborative environment.

Apache Airflow

URL: airflow.apache.org

Apache Airflow is a powerful workflow management tool that can automate complex ML pipelines. It is particularly useful in orchestrating data processing, model training, and deployment workflows. By using directed acyclic graphs (DAGs), Airflow allows for the scheduling and monitoring of workflows, ensuring that every step in the AI pipeline is executed in the correct order and on time.

Seldon Core

URL: seldon.io

Seldon Core is an open source platform designed for deploying, scaling, and managing thousands of machine learning models on Kubernetes. It provides a framework for deploying machine learning models as microservices, with built-in support for metrics, logging, and monitoring. Seldon Core integrates well with existing CI/CD pipelines, making it a valuable tool for organisations looking to scale their AI operations.

ONNX and ONNX Runtime

URL: onnx.ai

The Open Neural Network Exchange (ONNX) is an open format that allows models to be trained in one framework and deployed on another. ONNX Runtime is a high-performance inference engine for deploying ONNX models. It is highly optimised for performance across a wide range of hardware, making it an essential tool for deploying AI models in production environments.

Kubeflow

URL: kubeflow.org

Kubeflow is a Kubernetes-native platform tailored for machine learning workflows. It simplifies the process of deploying scalable machine learning models and managing end-to-end ML pipelines. Kubeflow supports multiple frameworks, including TensorFlow, PyTorch, and scikit-learn, and integrates well with CI/CD tools, making it an excellent choice for organisations embracing MLOps practices.

Cloud deployment of AI integrated applications using Hugging Face

The deployment process on Hugging Face is remarkably streamlined, thanks to its user-friendly interface and extensive documentation. By leveraging the Model Hub, users can easily push and pull models, enabling quick deployment across different environments. This capability is particularly beneficial for teams that require rapid iterations and testing, as it reduces the friction typically associated with model deployment. Moreover, Hugging Face’s Inference API simplifies the deployment process by allowing models to be served directly from the cloud without the need for extensive infrastructure setup.

Hugging Face also supports deployment on various cloud platforms, including AWS, Google Cloud, and Azure, making it a versatile choice for organisations with diverse cloud strategies. The integration with these platforms is enhanced by Hugging Face’s robust API and SDKs, which provide the necessary tools to deploy, monitor, and scale AI models effectively. This cross-cloud compatibility ensures that organisations can maintain consistency in their deployment strategies while taking advantage of the unique benefits offered by each cloud provider.

The commitment of Hugging Face to open source principles ensures that a wide range of pre-trained models is available for deployment, significantly reducing the time required for model training and fine-tuning. This vast repository of models, combined with the platform’s advanced deployment features, positions Hugging Face as an indispensable tool for AI practitioners looking to deploy models efficiently and at scale. Whether for natural language processing, computer vision, or other AI applications, Hugging Face provides a robust, cloud-ready solution that is both powerful and easy to use.

The advantages of Hugging Face

Versatility across cloud platforms: Hugging Face stands out for its extensive versatility, offering seamless integration with major cloud service providers like AWS, Google Cloud Platform (GCP), and Microsoft Azure. This cross-platform compatibility allows organisations to choose a cloud environment that best suits their existing infrastructure and operational needs. The platform’s API and SDKs facilitate smooth interactions between Hugging Face models and the cloud, enabling developers to deploy models without needing to worry about underlying infrastructure complexities. This flexibility is particularly valuable in hybrid or multi-cloud strategies, where businesses can deploy models across different clouds while maintaining a unified management approach.

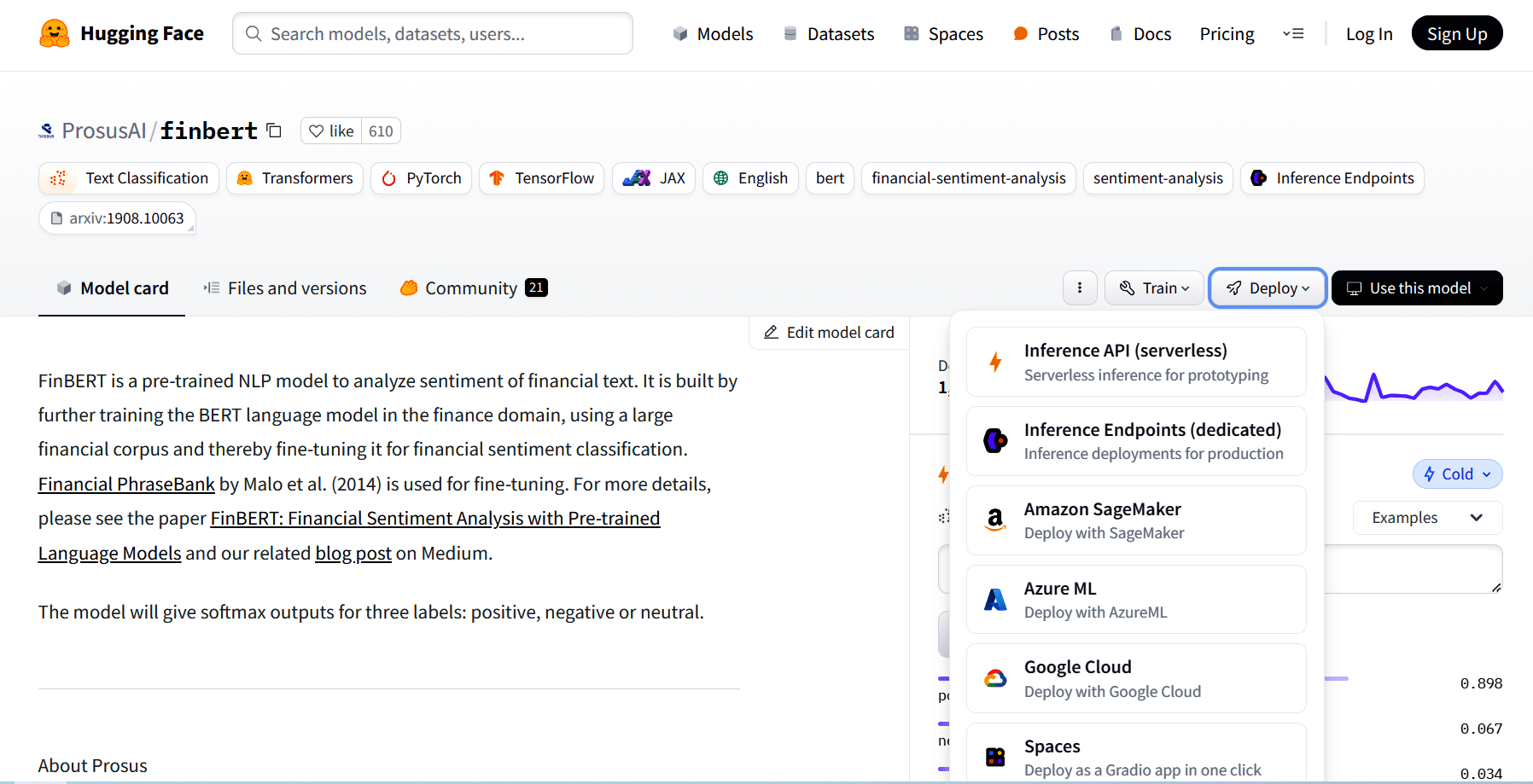

Pre-trained models and transfer learning: Hugging Face’s Model Hub hosts a vast array of pre-trained models, particularly in natural language processing (NLP) and extending to computer vision, speech processing, and more. These models can be fine-tuned on specific datasets, reducing the time and computational resources required to develop robust AI solutions from scratch. The availability of these pre-trained models is crucial for accelerating deployment timelines, as it allows organisations to leverage state-of-the-art models for their applications with minimal customisation. This is especially important in industries where time-to-market is a critical factor.

Simplified deployment processes: Hugging Face’s deployment process is designed to be intuitive and user-friendly, catering to both AI experts and those less familiar with the intricacies of machine learning model deployment. The Inference API, for example, abstracts much of the complexity involved in serving models on the cloud, allowing developers to deploy models with just a few lines of code. This ease of use lowers the barrier to entry for deploying AI models, making it accessible to a broader audience, including startups and smaller enterprises that may lack dedicated DevOps resources.

Scalability and performance optimisation: In cloud deployments, scalability is a critical consideration. Hugging Face addresses this through its support for large-scale deployments, enabling models to handle varying loads efficiently. The platform provides tools for optimising performance, such as model quantization and distillation, which reduce the computational overhead without significantly compromising accuracy. These features are essential for deploying models in production environments, where latency and resource utilisation are key performance metrics.

Monitoring and maintenance: Post-deployment, the ability to monitor and maintain models is crucial for ensuring long-term performance and reliability. Hugging Face provides integrations with cloud monitoring tools that help track model performance, detect drifts, and manage updates. This capability is important for organisations that deploy AI models in dynamic environments where data distributions can change over time, requiring continuous monitoring and adjustment of models.

Security and compliance: With the growing emphasis on data privacy and security, especially in regulated industries like finance and healthcare, Hugging Face’s ability to integrate with cloud platforms’ native security features is a significant advantage. Cloud providers offer robust security measures, including encryption, identity management, and compliance certifications, which can be leveraged when deploying Hugging Face models. This ensures that deployments meet stringent regulatory requirements while protecting sensitive data.

Community and ecosystem: Hugging Face has fostered a vibrant community of developers, researchers, and AI enthusiasts. This community-driven ecosystem is a valuable resource for users looking to deploy models on the cloud, as it provides access to a wealth of shared knowledge, best practices, and open source tools. The community also contributes to the continuous improvement of the platform, ensuring that it remains at the cutting edge of AI and cloud deployment technologies.

The scope of Hugging Face in the cloud deployment landscape is expansive, covering everything from model preparation and deployment to monitoring and scaling. Its versatility across cloud platforms, extensive library of pre-trained models, and simplified deployment processes make it a go-to solution for organisations aiming to deploy AI models quickly and efficiently. Additionally, its emphasis on scalability, performance, security, and community engagement positions it as a leading platform in the ever-evolving AI and cloud computing space.