Kubernetes is more than just a tool; it serves as a robust platform, streamlining the deployment of applications, as well as their scaling and operation in various environments.

Kubernetes, often referred to as ‘K8s’ or ‘kube’, is an open source container orchestration platform. Its primary role is to automate software scaling, management, and deployment.

Kubernetes is a Greek name that means helmsman or pilot. K8s, the abbreviation, indicates the eight letters between the ‘K’ and the ‘s’. Google open sourced the Kubernetes project in 2014. Kubernetes combines over 15 years of Google’s experience running production workloads at scale with best-of-breed ideas and practices from the community. It was donated to the Cloud Native Computing Foundation (CNCF) in 2015.

What is Kubernetes?

Kubernetes automates operational tasks of container management and includes built-in commands for deploying applications, scaling applications up and down to fit changing needs, monitoring applications, and making it easier to manage them.

- Kubernetes architecture and operations

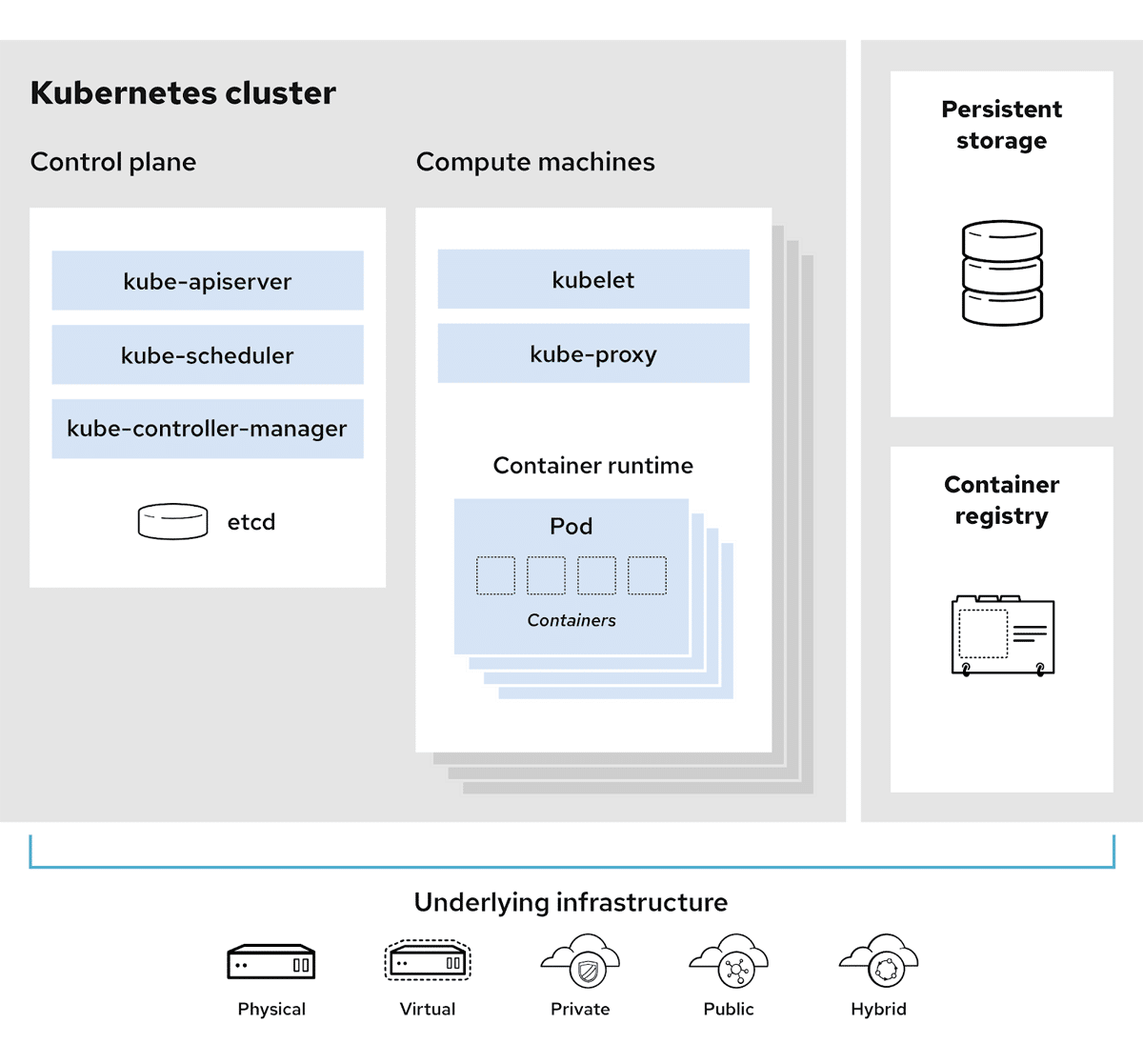

Containers serve as a portable and easily deployable form for encapsulating applications. Kubernetes, designed specifically for running containerised applications, has a distinctive architecture. In a Kubernetes cluster, there is a minimum of one control plane and one worker node (often a physical or virtual server). The control plane has dual responsibilities: exposing the Kubernetes API through the API server and overseeing the cluster’s constituent nodes. It plays a key role in decision-making regarding cluster management and effectively responds to various cluster events.

At the core of Kubernetes is the concept of a pod, which represents the smallest execution unit for an application. Pods, comprising one or more containers, are executed on worker nodes.

- Key components of the Kubernetes control plane and nodes

The control plane of Kubernetes comprises four essential components responsible for managing communications, node administration, and maintaining the cluster’s state.

- Kube-apiserver: As implied by its name, kube-apiserver serves the role of exposing the Kubernetes API.

- etcd: This is a key-value store housing all data about the Kubernetes cluster, ensuring efficient data management.

- Kube-scheduler: Tasked with monitoring new Kubernetes pods lacking assigned nodes, kube-scheduler allocates them to a suitable node based on considerations such as resources, policies, and ‘affinity’ specifications.

- Kube-controller-manager: All functions related to controllers within the control plane are consolidated into a unified binary known as kube-controller-manager.

A Kubernetes node is equipped with three major components.

- Kubelet: As an agent, Kubelet ensures the necessary containers are actively running within a Kubernetes pod.

- Kube-proxy: This network proxy, operating on each node in the cluster, upholds network rules and facilitates communication.

- Container runtime: This is the application that manages the operation of containers. Any runtime that complies with the Kubernetes CRI (container runtime interface) is supported by Kubernetes.

Kubernetes is used to create applications that are easy to manage and deploy anywhere. When available as a managed service, Kubernetes offers a range of solutions to meet your needs. Here are some major use cases of Kubernetes.

- Increasing development velocity: Kubernetes helps to build cloud-native microservices-based apps. It also supports containerising existing apps, becoming the foundation of application modernisation and letting you develop apps faster.

- Deploying applications anywhere: Kubernetes is built to be used anywhere, allowing you to run your applications across on-site public clouds and hybrid deployments. So you can run your applications where you need them.

- Running efficient services: Kubernetes can automatically adjust the cluster size required to run a service. It enables you to automatically scale your applications up and down, based on the demand, and run them efficiently.

Why Kubernetes?

In a production scenario, continuous availability and efficient resource utilisation are critical. Kubernetes addresses these needs by automating the deployment, scaling, and management of containerised applications.

Let’s explore some key aspects that make Kubernetes indispensable.

- Service discovery and load balancing: Containers can be publicly visible with Kubernetes using dedicated IP addresses or DNS names. This makes load balancing more effective and ensures stable deployment even with heavy network traffic.

- Storage orchestration: The platform allows for the automatic mounting of storage devices and provides a range of flexible options, including integration with public cloud providers and local storage.

- Automated rollouts and rollbacks: Kubernetes may coordinate changes at a controlled rate by specifying the desired state for deployed containers. It involves automating processes such as resource transfer smoothly, creating new containers, and removing old ones.

- Automatic bin packing: Kubernetes optimises resource utilisation by automatically distributing containers to available nodes based on information about each container’s CPU and memory requirements.

- Self-healing capabilities: Based on user-defined health checks, Kubernetes continuously monitors containers and takes action, such as restarting, replacing, or removing unresponsive containers. It guarantees that clients are only exposed to healthy containers.

- Secret and configuration management: Sensitive information such as passwords and tokens can be securely stored and managed by Kubernetes. It includes deploying and updating secrets and configurations without rebuilding container images.

- Batch execution: Kubernetes can handle workloads involving batch and continuous integration, and managing services. It contributes to a dependable and robust operating environment by replacing malfunctioning containers.

- Horizontal scaling: Applications may easily scale up or down in response to demand because of Kubernetes’ simplification of the scaling process. Simple commands, an intuitive user interface, or CPU usage-based scaling can all help achieve this.

- IPv4/IPv6 dual-stack support: Kubernetes allows IPv4 and IPv6 addresses to be assigned to pods and services to accommodate changing networking standards.

- Designed for extensibility: The architecture of Kubernetes is built to support extensibility. Users can include new functionalities in their clusters without changing the source code upstream.

Where to use Kubernetes?

As discussed, there are many benefits of using Kubernetes. Let’s see where exactly you can use it.

- Microservices architecture: Microservices-based systems are especially well-suited for Kubernetes management. It provides isolation and flexibility by enabling each microservice to operate in its container.

- Continuous integration/continuous deployment (CI/CD): Kubernetes allows for automated testing, deployment, and rollbacks by integrating easily with CI/CD pipelines. The software development life cycle is accelerated as a result.

- Hybrid and multi-cloud environments: Kubernetes offers a unified platform for on-premises and cloud environments from many providers. This portability is useful for businesses using multi-cloud or hybrid strategies.

- Edge computing: Kubernetes is increasingly utilised to orchestrate containerised apps at the edge as edge computing gains traction. Regardless of where an application is deployed, it guarantees uniform application management.

- Stateful applications: Kubernetes was initially created to enable stateless apps, but it has since developed to allow stateful applications. For example, StatefulSets facilitates the deployment and scalability of stateful applications.

To sum up, Kubernetes stands out as a transformative force in container orchestration, providing unmatched benefits for the management and scaling of containerised applications.