Built on the InstructLab open-source project, RHEL AI simplifies the creation, testing, and deployment of Granite generative AI models, empowering domain experts and fostering open collaboration.

Red Hat has unveiled a new developer preview of Red Hat Enterprise Linux AI (RHEL AI), marking a significant step in the realm of artificial intelligence (AI) development. RHEL AI serves as a foundational model platform aimed at simplifying the creation, testing, and deployment of cutting-edge, open-source Granite generative AI models, which are pivotal for powering enterprise applications.

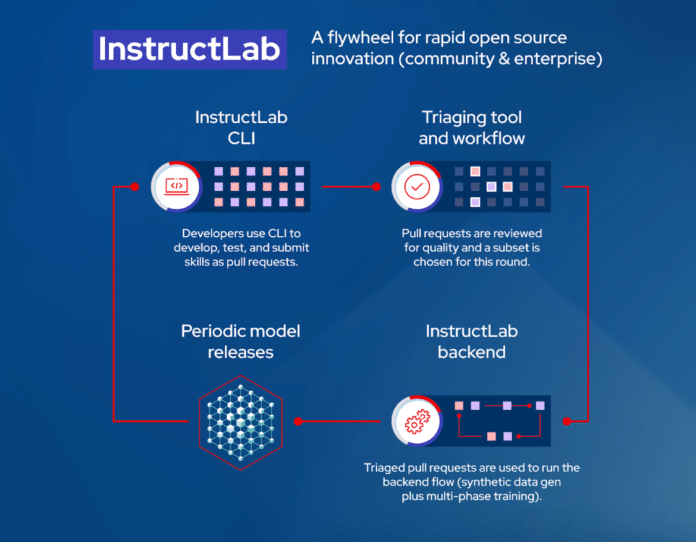

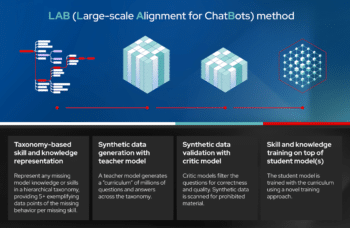

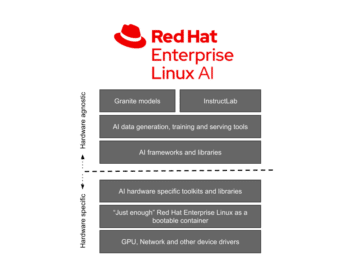

Built upon the InstructLab open-source project, RHEL AI integrates open-source-licensed Granite large language models from IBM Research with InstructLab model alignment tools. These tools, based on the LAB (Large-scale Alignment for chatBots) methodology, are packaged into an optimised, bootable RHEL image, streamlining server deployments.

The primary objective behind RHEL AI and the InstructLab project is to empower domain experts to contribute directly to Large Language Models, thereby facilitating the development of AI-infused applications such as chatbots. RHEL AI offers a comprehensive solution by leveraging community-driven innovation, providing user-friendly software tools tailored for domain experts, and incorporating optimized AI hardware enablement.

Large Language Models have gained significant traction in enterprise organizations, with closed-source models dominating the landscape. Red Hat envisions a shift towards purpose-built, cost-optimized models supported by robust MLOps tooling, prioritizing data privacy and confidentiality.

The training pipeline for fine-tuning Large Language Models typically demands data science expertise and substantial resources, posing challenges in terms of both cost and accessibility. Red Hat, in collaboration with IBM and the open-source community, aims to democratize AI development by introducing familiar open-source contributor workflows and permissive licensing to foster collaboration and innovation.

Red Hat Enterprise Linux AI comprises four foundational components: Open Granite models, InstructLab model alignment, optimized bootable Red Hat Enterprise Linux, and enterprise support and indemnification.

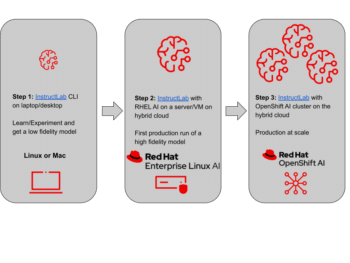

To facilitate experimentation and eventual production deployment, Red Hat outlines a phased approach, beginning with experimentation on local devices using the InstructLab CLI, followed by deployment on bare metal servers or cloud-based virtual machines, and culminating in production deployment at scale with OpenShift AI.

Overall, Red Hat’s initiative signifies a transformative shift towards open collaboration and innovation in the realm of Large Language Models and enterprise AI software, promising abundant opportunities for advancement and growth.