Why stick with a 6GB Docker image that functions? Downtime’s crucial; services require updates and lightweight images mean faster deployments, saving time.

I work on a project called Kube-ez. It is a simple Golang project which has an image of nearly 0.5GB in size. This resulted in excessive time consumption while spinning up any container with this image. This issue not only affected deployments but also slowed down the CI jobs, leading to a delay in my entire software development life cycle.

I was able to fix this issue by reducing the image size by 97%, turning it from ~0.5GB to a mere 16MB. This change enabled me to build more reliable deployments and faster CI jobs.

Let me present the approach I adopted to address this problem, and discuss some alternative solutions.

Understanding the problem

In the world of microservices, most production relies on containers. Images act like the CDs/flash drives used to play a certain song (your software) in your container.

Docker images aim to bring unity among the developers despite the platform and environment. However, achieving this should not come at the cost of memory and speed.

- Bulky images take longer to download.

- You need increased time to spin up a container using them, slowing deployments and CI pipelines.

- They increase the load and cost of the image registry.

- With upgrades, it becomes harder to check for vulnerabilities.

Why solve this problem?

I often hear from developers: “So what if the Docker image is 6GB; it still runs, right? Don’t touch it.”

However, I disagree based on my experience as a site reliability engineer at Juspay (an Indian fintech startup).

Keeping a service up and running is always a significant challenge. But let’s agree that no company has an SLO of 100%. Each second of downtime counts!

Someday, the service is bound to crash or run into errors. Even in the best-case scenario, you will need to update it sometime. On that day, when you need to spin up a new container or an updated container, having a lighter Docker image will help.

A lighter Docker image ensures that containers spin up faster, saving precious seconds for your company. Also, services like AWS ECR charge you nearly $0.10/GB of images stored each month, making it a cost-effective choice.

Ways to reduce the Docker image size

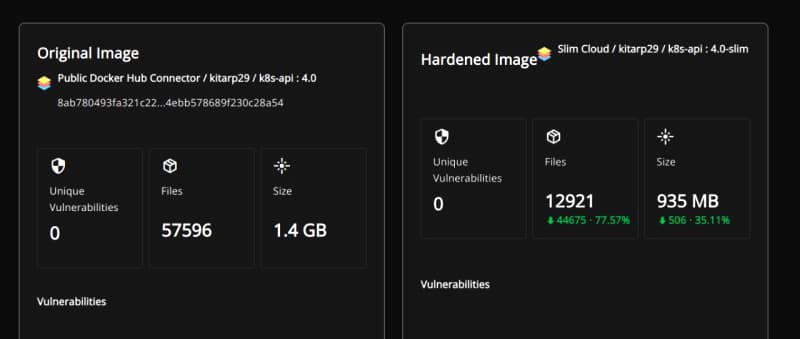

There are three different approaches to this task. The first option is to use a third-party service such as Docker Slim to handle the heavy lifting. The next one is to delve deep into Docker images and improve them layer by layer. Lastly, use Docker’s own service, which has been recently introduced and simplifies the process of developing Docker images according to best practices.

Docker Slim

Docker Slim (https://slimtoolkit.org/) is the best and easiest tool to solve this problem.

- Easily reduces the size of your Docker images.

- Connects to DockerHub and gives you a user-friendly platform for managing images on the registry.

- Checks and fixes vulnerabilities.

- Installation and usage are straightforward.

However, it would be great to see APK and other package installation methods in the future from this platform. As you can see from the results (Figure 1), this service can help you reduce the image size by around 37%.

If you wish to reduce the size of a local Docker image, you can refer to the article by the RedHat Developer Group at https://developers.redhat.com/articles/2022/01/17/reduce-size-container-images-dockerslim#using_dockerslim.

Manual approach

This approach requires a good understanding of your project. There are two steps in this approach, and you can stop after the first step if it satisfies your needs. Let’s dig deeper.

Using lighter base images: This is the easiest and the first option to consider. Use a lighter image as the base layer for your container. You can opt for an Alpine image of the OS or the programming language you are using.

Alpine images are basic, barebone OS, missing essential software and drivers like graphics, Wi-Fi, and more.

Your DockerFile would look something like this:

FROM golang:alpine WORKDIR /app COPY . . RUN go build -o app CMD [“./app”]

Multistage build: The idea is to use two different stages in the image. One is to build the binary, and the next is to simply use it!

In my case, I applied a multistage build pattern, creating a binary in the build stage and using it to run the container. Now the image size is ~35 MB. Further size reduction was achieved by optimising the GCC dependency, resulting in an image size of around 16MB.

Since Go binaries can run independently, I did not need a Go Alpine image.

Here is my final DockerFile:

# Build FROM golang:alpine AS build WORKDIR /k8-api COPY . . RUN go mod download && \ GO111MODULE=on CGO_ENABLED=0 go build -ldflags “-s -w” # Deploy FROM alpine COPY --from=build /k8-api/k8-api . ENTRYPOINT [“./k8-api”] EXPOSE 8000

Docker init

Docker has just released a feature called ‘docker init’, which automates the entire process. It not only reduces the image size but also follows best practices for creating a Docker image. I am still trying it with different projects but the preliminary tests seem promising.

You can read about docker init at https://docs.docker.com/engine/reference/commandline/init/

We have discussed three different approaches for reducing Docker image size, and provided tips and resources for further optimisation. You can choose the approach that best suits your needs in order to improve production performance and reliability.