Discover how this approach, based on pipeline templates, guarantees fast recovery without sacrificing training throughput, making it a game-changer in generative AI.

The ever-growing demand for generative AI technologies has propelled researchers to find innovative solutions to meet the surging processing needs for model training while ensuring fault tolerance. In a groundbreaking development, a team of researchers from the University of Michigan has unveiled “Oobleck,” an open-source large-model training framework designed to address the challenges of modern AI workloads.

This model draws its name from the quirky Dr. Seuss substance, offers a cutting-edge approach to enhance the resilience of large model pre-training. The framework leverages the concept of pipeline templates to provide lightning-fast and guaranteed fault recovery without compromising training throughput. Mosharaf Chowdhury, an associate professor of electrical engineering and computer science at the University of Michigan and the corresponding author of the paper, emphasised model’s significance, stating, ” model is a general-purpose solution to add efficient resilience to any large model pre-training. As a result, its impact will be felt in foundation model pre-training for the entire range of their applications, from big tech and high-performance computing to science and medical fields.”

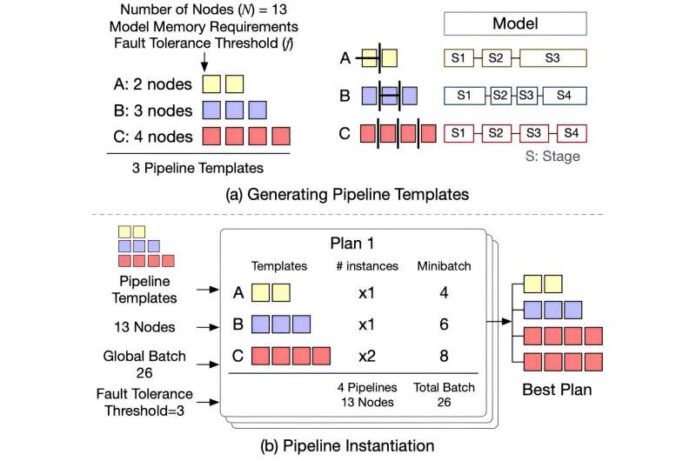

The critical challenge with large language models lies in their heavy reliance on massive GPU clusters for prolonged periods during pre-training, which increases the likelihood of failures. When failures do occur, the synchronous nature of large language model pre-training exacerbates the problem, causing all participating GPUs to remain idle until the issue is resolved. Existing frameworks offer limited support for fault tolerance during large language model pre-training, relying on time-consuming checkpointing or recomputation methods that cause cluster-wide idleness during recovery, with no formal guarantees of fault tolerance. Its innovation lies in its use of pipeline templates at its core. These templates, which specify training pipeline execution for a given number of nodes, are used to create pipeline replicas. These templates are logically equivalent but physically heterogeneous, allowing them to be used together to train the same model.

Large Language Model Fault Tolerance and Training Efficiency

Researchers explained that the model is the first work that exploits inherent redundancy in large language models for fault tolerance while combining pre-generated heterogeneous templates. This framework provides high throughput with maximum utilisation, guaranteed fault tolerance, and fast recovery without the overheads of checkpointing- or recomputation-based approaches.

Its execution engine, starting with a maximum number of failures to tolerate, instantiates at least that many heterogeneous pipelines from the generated set of templates. The global batch is then distributed proportionally to the computing capability of pipeline replicas to prevent training synchronisation issues. In the event of failures, it seamlessly re-instantiates pipelines from precomputed templates, eliminating the need for time-consuming runtime configuration analysis. It’s approach is likely to recover from for fewer failures, providing a robust solution to the challenges of large model pre-training.

The introduction marks a significant milestone in resilient distributed computing, offering a balance between speed and effectiveness in addressing unpredictable events. Researchers envision that pipeline templates could be applied to enhance the resilience of various distributed computing systems in the future, starting with GenAI applications and inference serving systems. As the demand for generative AI continues to grow, model’s innovative approach promises to play a crucial role in meeting the field’s evolving needs.