The authors describe in detail how to build an application that shares the terminal output on the Internet, and can also search the files that are shared.

Most educational institutes in India have mandatory projects for students as part of their courses. Working together for a project generally involves sharing the terminal outputs, which can be cumbersome for the teams collaborating with each other. The Web application described in this article shares the terminal output on the Internet and can also search the files that are shared. This application can be used by students and teachers, as well as by engineers working with IoT devices. It has been designed such that it can help share any kind of file format.

The motivation behind the project described in this article was to be able to incorporate all these tasks so that it could support many console output systems, ranging from education to IoT. Its GUI features ensure the system can be used without any technical knowledge.

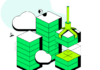

The name of this application is ‘Real_time_sharing’. It is Web application software, which helps to share the terminal output. By registering in the application through an email ID, the student is allowed to login and share the output files to the database using MongoDB. And by logging in, the faculty can access all the files shared by every student, evaluate the work based on its novelty, and then upload the evaluated files.

The developed application is platform-independent. It supports durability, monitoring and a secure connection with ssh enabled databases, i.e., MongoDB. We have provided the login system for the authentication of appropriate users.

The workflow of the application is depicted in Figure 1. The source code for this project is available at https://github.com/cmouli96/real_time_sharing. The setup is as follows.

System configuration

Ubuntu version 18.04LTS, 8GB RAM and 4 core CPU

Features

- Users can upload output files from the terminal in any format up to any size.

- The guidelines for the file creation are given.

- Users can download and delete the files from the database.

- Users can search the files from the database.

- Async/await mechanism is used to create a promise chain.

- To structure and handle the application, an express framework is used.

- Body-parser is used to transfer data from one get request body to another one.

- The template engine-EJS is used to simplify the setting up of dynamic content in html pages.

- Files are stored as chunks; this helps to store files of any size to the database.

- Mongoose is used to create schema and store the files in the database.

Functionality

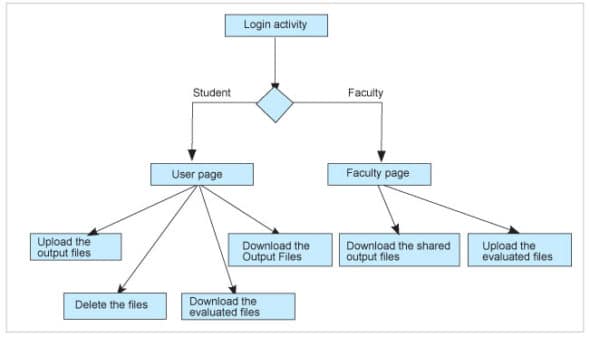

Login/register: The application helps you to register by providing an email ID, user name, phone number, and the password. You can register as a student or admin. The admin can see all the users’ files, and upload or download them. The details provided by you are stored in the database after checking their credentials. The user registration template is shown in Figure 2.

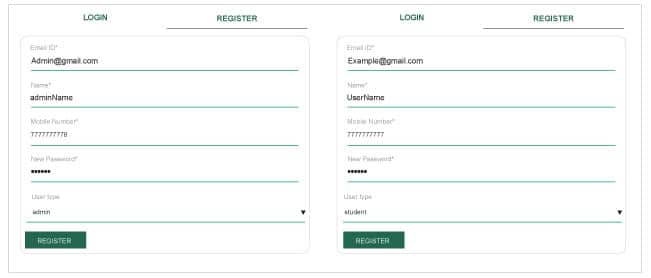

You can login using the email ID and password provided while registering. The application will validate the details and redirect to the user page; otherwise, it will give an error message that says ‘email-id/password incorrect’. The user login page is shown in Figure 3.

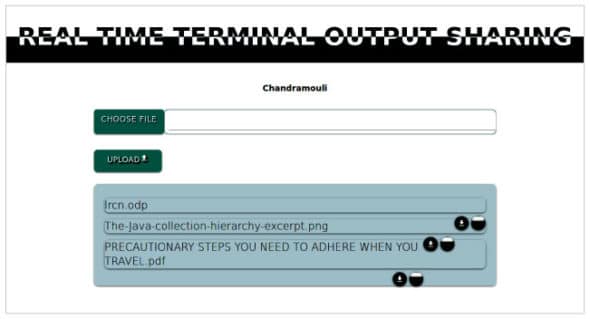

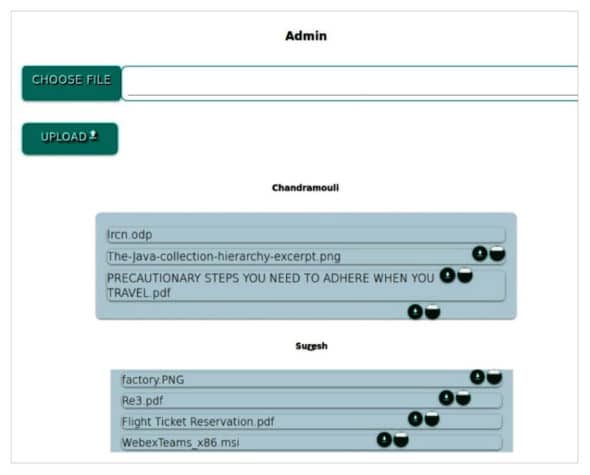

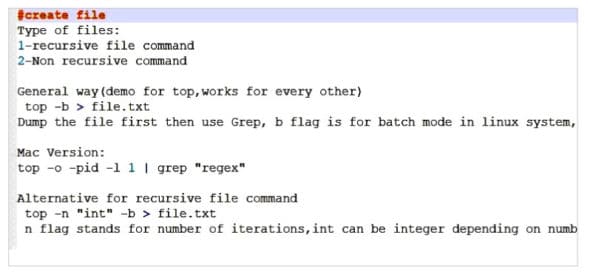

User page: You can upload files to the application, and all your files can be displayed on this page. You can also download the files or delete them anytime. The files that you choose to upload can be of any size, as all of these are stored in the form of small chunks. Figure 4 shows the user page, Figure 5 shows the admin page, and Figure 6 demonstrates how to create a console file.

Gridfs: Gridfs multi-storage is used to store all your files. It divides files into small byte chunks, which helps to store the data efficiently. Gridfs gives every chunk of a file an ID, so that the same order is followed when the files are retrieved.

Connection to MongoDB: Gridfs uses the given location to store the files in the form of chunks. Mongoose is used to help the storage in MongoDB. First, connect to MongoDB. Then, create a schema in it and use that to fix a location address as storage. That address is sent to Gridfs, with the help of Multer. Gridfs and Mongoose are able to store the user files in the database.

Retrieving files from storage: Students are able to view all the files in their account, while the admin is able to view all the files in the database. Files are sorted in the order of the time taken to upload them.

Uploading files to a database: The upload function is already defined. Here, it uploads your files into the user database.

Downloading files from a database: You can download any file that is retrieved and displayed in the user page by clicking the ‘Download’ button.

Deleting files from a database: You can delete any file that is retrieved and displayed in the user page by using the ‘Delete’ button.

ELK logger

Gelf: The graylog extended format provides better functionality compared to the traditional syslog format. It is space optimised and reduces the payload. It has well-defined data structures where strings and numbers can be differentiated clearly, which is absent in traditional syslog. It provides compression features vividly. We have routed the incoming traffic from Gelf to Logstash and forwarded that to Elasticsearch at port 9200.

Elasticsearch, Logstash and Kibana (ELK): We have configured the ELK stack in a Docker image with the appropriate networks and host such that it can work anywhere, provided there is an appropriate path from Gelf and Logstash.

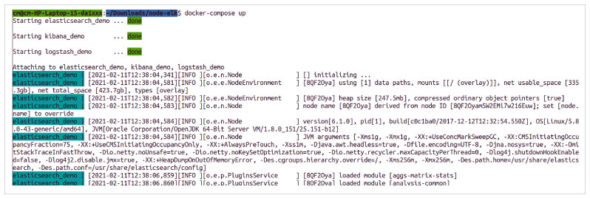

We have written a docker-compose file for three containers. We have to type the command docker-compose to start these containers. Figure 7 shows the command execution screenshot.

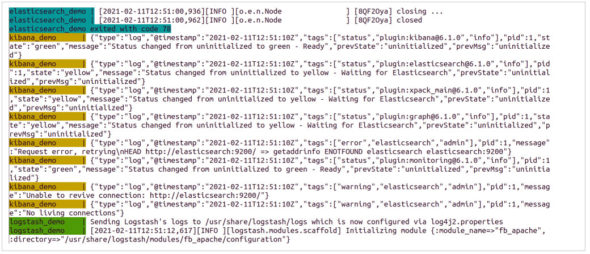

The three containers sync up, and the environments get configured according to the docker-compose.yml file. Then Logstash collects the logs, which is shown in Figure 8.

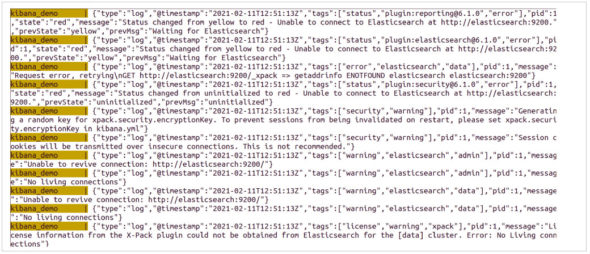

Elasticsearch and Kibana parse the input log file and colour code the incoming log message, with green being the most appropriate and correct, which is shown in Figure 9. The red coloured text is the most inappropriate and severe.

Now, Kibana and ELK run parallelly to fetch the logs automatically at a certain interval.

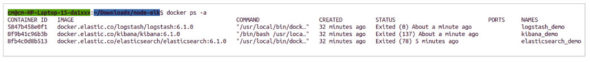

You can check that all containers are in sync with the Docker $ps – a command, which shows the time of running for all the three containers (Figure 10).

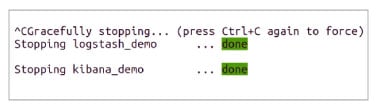

You can terminate the docker-compose command with CTRL+C/CTRL+X, which will delete all the containers sequentially and maintain the states. The screenshot for this is shown in Figure 11.

This application can be used as a platform for online exams and for IoT based receivers. It can be used to track real-time console outputs for the evaluation of any project, including audio and video based projects apart from the routine text and image based ones. IoT sensors require a huge chunk of data to be transferred frequently in order to work properly. You can add a cron job and use the functionality of this project. Video proctoring features can also be added to this application.