EXPLAIN with different queries. Then we found a situation where EXPLAIN showed unexpected results. So now it’s time to continue the discussion.Let me recapitulate briefly what we discussed earlier. We created a table with the following script:

CREATE TABLE 'student_master' (

'student_id' INT(10) NOT NULL DEFAULT '0',

'full_name' VARCHAR(100) NOT NULL,

'email_address' VARCHAR(100) NOT NULL,

'password' VARCHAR(100) NOT NULL,

'deleted' TINYINT(4) NOT NULL,

PRIMARY KEY ('student_id')

)

COLLATE='utf8_general_ci'

ENGINE=InnoDB

ROW_FORMAT=DEFAULT

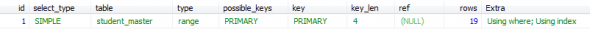

We inserted 100 rows in the table, then executed a series of queries with EXPLAIN. The last two queries were as follows; their results are shown in Figures 1 and 2, respectively:

EXPLAIN SELECT student_id FROM student_master WHERE student_id IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 20)

EXPLAIN SELECT student_id FROM student_master WHERE student_id IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20)

We only added one value to the IN list, 19, and as you can see in the result, the ‘type’ changed from ‘range’ to ‘index’. We also discussed the fact that an index type of scan is costlier than the range type of scan on a table. This is where we left off last month, and you were requested to find out the reason for this change from range to index.

To understand this, we must first get a thorough understanding of some of the internals of MySQL, after which we will come back to this point.

One important aspect that differentiates MySQL from most other popular databases is the availability of multiple storage engines. This has its advantages and disadvantages. The two biggest advantages are that, first, you can choose the storage engine that best serves the purpose. For instance, in some cases, MyISAM is a great choice, and in some, InnoDB is unavoidable. Second, though it is not easy, you can plug in storage engines. For instance, InnoDB is compiled and linked with a typical MySQL distribution. However, you can download a later version, and use that as a plugin, which will give you better performance.

Having mentioned the advantages, the biggest problem regarding the choice of storage engines is that users (developers and DB designers) have to understand the internals of not only MySQL, but multiple other storage engines as well. What might appear as the perfect indexing/querying strategy for a MyISAM-based table may not be perfect for an InnoDB-based table.

It’s now time for some more queries that will change your perception towards some of the frequently used queries by you.

The problem of expression evaluation

Create a table named table_with_date_field, with the following query:

CREATE TABLE 'table_with_date_field' (

'pk_column' INT(10) NOT NULL AUTO_INCREMENT,

'date_column' DATE NOT NULL,

PRIMARY KEY ('pk_column'),

INDEX 'date_column' ('date_column')

)

COLLATE='utf8_general_ci'

ENGINE=InnoDB

ROW_FORMAT=DEFAULT

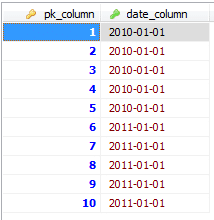

We have two columns, pk_column and date_column. The first is the primary key, an integer field. The second is a date field, which is also indexed. Now add 10 rows in the table, so that you have data in the table as shown in Figure 3.

We now have five rows with the date 2010-01-01 and the other five with 2011-01-01. Now, if we want to get all the rows where the date belongs to the year 2010, the simple query given below should be sufficient:

SELECT * FROM table_with_date_field WHERE date_column < '2011'

As date_column is indexed, we believe any operation done on date_column will be highly optimised. Let’s check that out. Issue the following query (the results are shown in Figure 4):

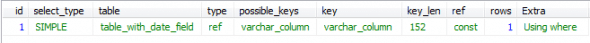

EXPLAIN SELECT * FROM table_with_date_field WHERE date_column < '2011'

As you can see, we have the table scan devil back. Why? We have added an index for date_column, and the WHEREclause includes only one column. The problem is Expression Evaluation. Make a small change in the query and execute the following (Query 4):

EXPLAIN SELECT * FROM table_with_date_field WHERE date_column < '2011-01-01'

The results are shown in Figure 5. Hurray! We got rid of the table scan, and the scan type has also changed from ‘index’ to ‘range’! But why? This is because an expression like WHERE date_column < '2011' is incomplete, and of almost no use. This forces MySQL to evaluate every row in the table. We have an index on date_column, otherwise, this query is bad enough to cause a scan of type ‘ALL’. The presence of the index changed the scan type to ‘index’, which isn’t good either.

Now add one VARCHAR column in the table (you could alter the table, or drop it and use this new creation script):

CREATE TABLE 'table_with_date_field' (

'pk_column' INT(10) NOT NULL AUTO_INCREMENT,

'date_column' DATE NOT NULL,

'varchar_column' VARCHAR(50) NOT NULL,

PRIMARY KEY ('pk_column'),

INDEX 'date_column' ('date_column'),

INDEX 'varchar_column' ('varchar_column')

)

COLLATE='utf8_general_ci'

ENGINE=InnoDB

ROW_FORMAT=DEFAULT

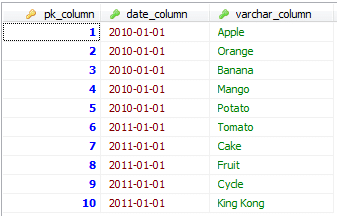

Add some data in the table, so that it looks like what’s shown in Figure 6 (if you’re using a set of INSERT statements, save them — they will come in handy below). Please note that we have created an index for varchar_column too, hoping that any search based on this column will be very efficient. Now, execute the following (Query 5):

EXPLAIN SELECT * FROM table_with_date_field WHERE varchar_column = 'Apple'

The result (Figure 7) is excellent, as we have a scan of type ‘ref’; it cannot be better.

Now imagine that you have a Web page where users enter some text for a search, which you use with a WHERE LIKE '%whatever%' kind of clause. For example, a user enters ‘pple’ , and you issue a query as follows:

SELECT * FROM table_with_date_field WHERE varchar_column like '%pple'

No problem, right? We have varchar_column indexed; this should be extremely fast. Let’s see:

EXPLAIN SELECT * FROM table_with_date_field WHERE varchar_column like '%pple'

Oh! A full-table scan? Why? Because MySQL is accessing every row, to check if %pple is true. But what about the index? Sorry — the index is of no use here. Some say this problem is specific to InnoDB, so let’s try changing this to MyISAM.

ALTER TABLE 'table_with_date_field' ENGINE=MyISAM

Issue the EXPLAIN statement again; you will find that the problem persists. The problem is with how indexing is done with textual data, and how that fits in the concept of B-Tree and hashing. We will have more details on this when we discuss the MyISAM and InnoDB storage engines.

However, it is very important for you to remember Rule No 6 (mentioned in the first article in the series). Indexes are not a solution to everything. If expression evaluation demands that MySQL has to access every row and compare the values (or perform any other arithmetical or logical operation), the purpose of indexes is defeated!

The myth regarding Full-Text search

You may find someone suggesting that you use Full-Text Search as a solution to the WHERE LIKE '%whatever%' table-scan problem. We will discuss what Full-Text Search is later, but it isn’t meant to be a solution in such scenarios. You can verify that by modifying the table to use MyISAM, and changing the key type of varchar_column to FULLTEXT (InnoDB doesn’t support Full-Text-Search indexes). Given below is what the new table definition script would look like:

CREATE TABLE 'table_with_date_field' (

'pk_column' INT(10) NOT NULL AUTO_INCREMENT,

'date_column' DATE NOT NULL,

'varchar_column' VARCHAR(50) NOT NULL,

PRIMARY KEY ('pk_column'),

INDEX 'date_column' ('date_column'),

FULLTEXT INDEX 'varchar_column' ('varchar_column')

)

COLLATE='utf8_general_ci'

ENGINE=MyISAM

ROW_FORMAT=DEFAULT

Re-insert the data in the table with the saved INSERT statements, so that it looks like Figure 6, and then issue the following two queries:

EXPLAIN SELECT * FROM table_with_date_field WHERE varchar_column = 'King Kong' EXPLAIN SELECT * FROM table_with_date_field WHERE varchar_column LIKE '%Kong'

You will find very similar results — full-table scans.

The story of UCASE and LCASE

UCASE and LCASE are classic examples of expression evaluation causing full-table scans. It is very common for applications to do case-insensitive searches. For example, WHERE UCASE (some_column) = 'SOME VALUE IN UPPER CASE'. This causes MySQL to ignore the indexes, and convert the value of some_column in every row to uppercase, and then compare the result with the supplied value. Issue the following query to verify this:

EXPLAIN SELECT * FROM table_with_date_field WHERE UCASE(varchar_column) = 'KING KONG';

A solution to this problem is to store either uppercase or lowercase values in the database, doing the necessary case conversion when inserting the record, and accordingly convert the case of the value to be compared in the SELECT query. For example:

EXPLAIN SELECT * FROM table_with_date_field WHERE varchar_column = UCASE('anything');

This causes MySQL to convert the supplied ‘for-comparison’ value into uppercase to match a storage rule that stores only uppercase values in varchar_column. And as we have an index for varchar_column the scan is of type ‘ref’, which is the best one can get.

Is I/O a problem? And how fast is your I/O device?

Yes, I/O is a problem — a potential bottleneck. But what causes I/O? And what is the solution to this problem?

Let me share my experience with you. My last project, which I stopped working on almost two years back, uses a massive database. It has more than 500 tables, over 2,000 stored procedures, and is based on MS SQL Server 2005. Everything worked fine during the development and testing phase. However, on the live server, we always found CPU usage hanging close to 80-90 per cent, with no memory left for anything other than MS SQL Server. The server appeared to be nothing more than a dead donkey. The performance monitor also showed massive I/O going on.

Someone suggested that the I/O throughput of the disks wasn’t enough, and hence caused problems. Someone else suggested that MS SQL Server (and MS Windows Server 2003) cannot handle so much data, and hence the chaos.

So we accessed our dear Google, and started looking for I/O issues with MS SQL Server. Endless articles with benchmarks and statistical figures forced us into thinking in the direction of getting higher-throughput disks. That’s one thread in the story.

Another thread was that we created a backup of the database, and created a replica local environment, and started testing. What we found was nothing but bad queries. We were shaken out of our belief in the common wisdom of having more RAM for cache for the database, plus ultra-high-throughput disks. To use a simple analogy: if you are driving your car with the hand-brake on, don’t blame the engine, tyre pressure and the quality of the last service you had done on the car.

Once we were done with all the tuning work, we found that a simple x86 desktop machine with (approximately) a 2.4 GHz dual-core CPU, 2 GB RAM, and a 7,200 RPM SATA HDD could handle 100+ concurrent transactions — and this in an application which has a huge database schema and a large number of rows in the tables.

Please note: during tests with a load of 100+ concurrent transactions, we found that the CPU usage never exceeded 30-40 per cent. For a typical Web-based application, out of the entire logged-on user-base, only 10-20 per cent (in the extreme case) cause concurrent transactions. A number of ‘100 concurrent database transactions’ means a minimum of 500 logged-on users — an extreme case for the application.

Understanding all this isn’t very difficult. At the lowest layer, any database stores all the tables and data in a single or in multiple files. Frequent reads and writes are bound to occur, and that is what makes people believe that I/O is a bottleneck.

Yes, I/O is a bottleneck, but only when I/O is expected beyond the capacity of the hardware. Bad queries can kill even the best of machines.

Logically, there are two portions of data: the data itself (often termed as ‘rows’), and the indexes. Whenever any query is issued, the database has to ultimately read data from the data section to generate the results. In between, though, there are multiple phases and operations. If, for some reason, the database has to read the entire data section to generate the results, there is total chaos.

Consider the following situation to visualise this: You are asked, many times a day, to open a 1,000-page book and read out the contents of all the pages where some specific text is written. Each time, you will take hours to find the relevant pages. Instead of this, you may be asked to read the contents of a particular page, or from Page x to Page y, or to read everything excluding Pages l, m, n, o and p. The last situation, in the case of databases, can be achieved only with the help of appropriate indexing, and smart queries.

Another common misconception is the need for a great amount of RAM for MySQL caching. It is true that caching can be improved tremendously, but not when you have bad queries!

I hope all my readers find that there are simple and logical techniques that, when used in the correct fashion, can lead to the great performance of their MySQL instances.

yupz linux for me

minta distro back trak terbaru dongs ^^