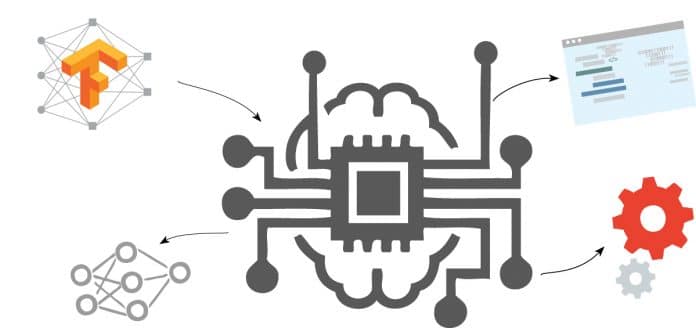

This article covers TensorFlow, the machine learning tool developed by the Google Brain Team and open sourced in November 2015. Read on to learn what makes this tool suitable for machine learning applications and how to use it. You will understand why everyone at Google holds it in such high regard.

A few years ago, the Google Brain team developed a software library to perform machine learning across a range of tasks. The aim was to cater to the needs of the team’s machine learning systems, those that were capable of building and training neural networks. The software was meant to help such systems detect and decipher patterns and correlations, just like the way human beings learn and reason.

In November 2015, Google released this library under the Apache 2.0 licence, making it open for use, providing everyone the opportunity to work on their own artificial intelligence (AI) based projects. By June 2016, 1500 repositories on GitHub mentioned the software, of which only five were from Google.

Working with TensorFlow

When you import TensorFlow into the Python environment, you get complete access over its classes, methods and symbols. You can take TensorFlow operations and arrange these into a graph of nodes called the computational graph. Typically, each node takes tensors as inputs and produces a corresponding output tensor. Values for the nodes get evaluated as and when a session is run. You can combine nodes with operations, which are also nodes in a certain form, to build more complicated computations.

Customise and improvise

To tune TensorFlow for your machine learning application, you need to construct the model such that it can take arbitrary inputs and deliver outputs accordingly. The way to do this with TensorFlow is to add variables, reflecting trainable parameters. Each of these has a type and an initial value, letting you tune your system to the required behaviour.

How do you know if your system is functioning exactly the way you intended it to? Simple… just introduce a loss function. TensorFlow provides optimisers that slowly change each variable so that loss functions can be minimised. There are also higher abstractions for common patterns, structures and functionality.

Multiple APIs for easier control

As a new user to any software, it is important to enjoy the experience. TensorFlow is built with that mindset, with the highest-level application program interface (API) tuned for easy learning and usage. With experience, you will learn how to handle the tool, and know which modification will result in changing the entire functionality and in what way this will happen.

It is then obvious to want to be able to work around the model and have fine levels of control over these aspects. TensorFlow’s core API, which is the lowest-level, helps you achieve this fine control. Other higher-level APIs are built on top of this very core. The higher the level of the API, the easier it is to perform repetitive tasks and to keep the flow consistent between multiple users.

MNIST is ‘Hello World’ to machine learning

The Mixed National Institute of Standards and Technology (MNIST) database is the computer vision data set that is used to train the machine learning system. It is basically a set of handwritten digits that the system has to learn and identify by the corresponding label. The accuracy of your model will depend on the intensity of your training. The broader the training data set, the better the accuracy of your model.

One example is the Softmax Regression model, which exploits the concept of probability to decipher a given image. As every image in MNIST is a handwritten digit between zero and nine, the image you are analysing can be only one of the ten digits. Based on this understanding, the principle of Softmax Regression allots a certain probability of being a particular number, to every image under test.

Smart handling of resources

As this process might involve a good bit of heavy lifting, just like other compute-heavy operations, TensorFlow offloads the heavy lifting outside the Python environment. As the developers describe it, instead of running a single expensive operation independently from Python, TensorFlow lets you describe a graph of interacting operations that run entirely outside Python.

A few noteworthy features

Using TensorFlow to train your system comes with a few added benefits.

Visualising learning: No matter what you hear or read, it is only when you visually see something that the concept stays in your mind. The easiest way to understand the computational graph is, of course, to understand it pictorially. A utility called TensorBoard can display this very picture. The representation is very similar to a flow or a block diagram.

Graph visualisation: Computational graphs are complicated and not easy to view or comprehend. The graph visualisation feature of TensorBoard helps you understand and debug the graphs easily. You can zoom in or out, click on blocks to check their internals, check how data is flowing from one block to another, and so on. Name your scopes as clearly as possible in order to visualise better.