Fuel is an open source deployment and management tool for OpenStack, and is a community-driven initiative. It has a GUI for the deployment and management of OpenStack, using its many components like Nailgun, Astute, Keystone, Postgres, etc.

Fuel is an open source tool for deploying OpenStack with high availability in multiple racks of servers or for a proof-of-concept environment with one or two servers.

Fuel has many key features, some of which are listed below:

- Management of multiple OpenStack environments

- Auto discovery of new nodes and their hardware

- IP address management

- HA and non-HA deployment

- Centralised logging

- Support for popular Software Defined Networks (SAN), storage and other features through external plugins

- Support for multiple distros

- Industry standard high-availability configuration

- Support for multiple hypervisors

The components of Fuel

Nailgun: This is a RESTful application that can create environments, change settings and configure networks; it uses Postgres to store data and uses AMQP to interact with workers.

Astute: This process executes instructions given by Nailgun, reads the information from AMQP put up by Nailgun, and applies it accordingly on the managed nodes. This interacts with Cobbler, Puppet and other components for deployment.

Keystone: This is for authenticating and authorising users.

Postgres: This is the database used for storing all the environment and configuration details.

Cobbler: This is a popular open source OS deployment tool, which manages TFTP and DHCP services. Astute interacts with Cobbler to create a profile for each managed node.

Puppet: Most of the deployment is done through Puppet manifests, which are synced to all managed nodes and executed by the MCollective agent running on each node.

rsync: This runs as a daemon, which helps sync all deployment scripts with managed nodes. Scripts are pulled before the deployment by managed nodes.

Configuring and installing Fuel

In this set-up, I will use three nodes for running OpenStack services and two nodes for compute.

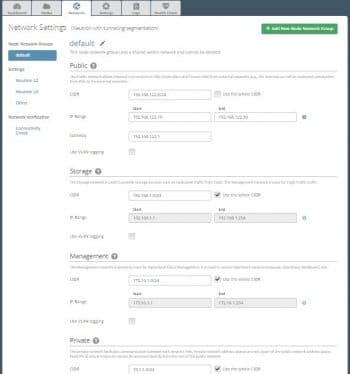

Prerequisites: Fuel uses five different networks for five different purposes, so the data centre network has to be configured accordingly.

The Fuel master node (the one on which Fuel will be installed) must have a PXE network and public network.

Networks: The networks in Fuel are listed below.

Fuelweb_admin/PXE: This is used for PXE booting of all nodes in the environment, OS provisioning and hardware discovery.

Public network: This is used for the nodes’ external communication.

Management network: This is for API and inter-service communication.

Storage network: This is for all storage communication like Swift, Cinder, etc.

Private network: This is the overlay network. All tenant virtual network communication happens over it. This network will be configured based on the network model you choose. For VLAN based segmentation, this network will not be created. This is required only for a tunnel network.

Storage requirements: Make sure the Fuel master has more than 60GB of disk space to install the OS and its services. In a production environment with hundreds of servers, it is recommended to have at least 512GB of storage as Fuel acts as a syslog server for all the nodes in the environment, which needs a lot of disk space. But with proper archiving and log rotation, this can be taken care of.

Installing Fuel

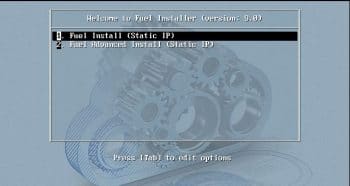

Download the latest Fuel ISO. As of writing this article, the latest available release is Fuel 9, which is based on OpenStack Mitaka. This can be downloaded from https://www.mirantis.com/software/mirantis-openstack/releases/

Configuring Fuel

Bring up the Fuel master with at least 60GB of disk space, two NICs, four CPUs and 8GB of RAM, with the first interface connected to the PXE network and the second interface connected to the public network (this interface will initially be used by all managed nodes to download packages from the Internet). Attach the ISO to the server, and follow the onscreen prompts to complete the installation, which will take less than 10 minutes. Fuel uses the Centos7 OS to run its services and has an automated installation procedure using Kickstart.

Once the installation is over, go to the console and configure eth1 with the proper IP/subnet and gateway with Internet access.

Configure the security set-up and add the subnet that is allowed to SSH to Fuel. If you set it to 0.0.0.0/0, all networks will have SSH access to Fuel, which is not recommended.

Once all the changes are done based on the environment, select Quit setup, and then Save and Quit.

It may take another 10 minutes to set up all the Fuel components and the bootstrap image. Once Fuel is completely configured based on the input provided, it will show a login prompt like what’s shown in Figure 4.

The Bootstrap image is an Ubuntu 14.04 image with Nailgun and MCollective agents installed, with which Fuel will be able to detect new nodes and their hardware details.

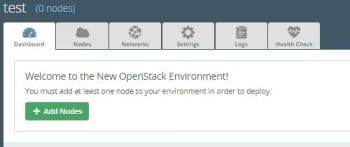

Creating an environment in Fuel

Access https://fuelip:8443 and log in as admin/admin (the default username and password, unless changed during deployment).

Click on ‘New Openstack Environment’ and then choose KVM/QEMU hypervisor (based on the type of hardware).

Select Neutron with the tunnelling segmentation and complete the environment creation by clicking on Create.

Configuring the environment

Defining the networks: Define all five subnets in Fuel with the correct VLAN ID or without VLAN, based on the data centre’s switch configuration.

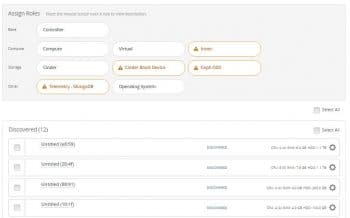

Adding nodes: Once the environment is created, start all the other nodes with the PXE network as the boot device. Fuel will boot all nodes with the bootstrap image, which will be shown on the Web UI. Once it’s discovered, click on Add nodes.

Figure 5 shows all the discovered nodes along with the different available roles.

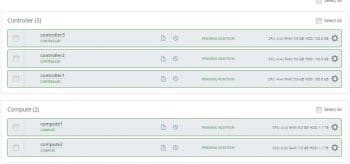

Assigning roles: Assign nodes with relevant roles. In this set-up, I have assigned three nodes with controller roles and two nodes with compute roles. At least three nodes are required for controller high availability (Figure 7).

Configuring a network on discovered nodes: Figure 9 shows the configuration interface for the nodes.

Deploying changes: Click on Deploy changes, which will provision the operating system using image based provision; then configure OpenStack on it with high availability for services.

Once the deployment is done, Fuel will show the IP address of the horizon dashboard, and you can log in with admin/admin credentials (unless changed before deployment).

Accessing nodes after deployment: Once the deployment is over, you can access all the nodes from the Fuel master. The node.ssh public keys are pushed to all the nodes after OS provisioning.

fuel node

This will show all the nodes, along with their node ids. To list a particular node, use the following code.

fuel node --node-id 23 id | status | name | cluster | ip | mac | roles | pending_roles | online | group_id ---+-------+-------------+--------+-----------+-----------+ 23 | ready | controller2 | 1 | 10.20.0.13 | 52:54:00:da:ba:79 | controller | | 1 | 1

IP shown is the IP assigned on admin/PXE interface, and you can ssh to that node without a password.

ssh fuel@10.20.0.13

Once you are logged into any of the nodes, you will be able to see all five networks.

{

fuel@node-23:~$ brctl show

bridge name bridge id STP enabled interfaces

br-ex 8000.1655a9613cc9 no eth3

p_ff798dba-0

v_public

v_vrouter_pub

br-fw-admin 8000.525400daba79 no eth0

br-mesh 8000.52540027a872 no eth4

br-mgmt 8000.5254009b320e no eth1 mgmt-conntrd

v_vrouter

br-storage 8000.525400ec6d44 no eth2

Advanced configuration of Fuel

On a large environment with hundreds of servers spread across multiple racks, a single broadcast domain can cause performance and maintenance issues. In order to address this, Fuel has the networknodegroup concept, where each rack is organised into one nodegroup with different network subnets and gateways.

The following is an environment with seven racks of servers:

to list nodegroups from cli fuel nodegroup id | cluster_id | name ---+------------+-------- 1 | 1 | default 2 | 1 | rack10 3 | 1 | rack11 4 | 1 | rack12 5 | 1 | rack13 6 | 1 | rack14 7 | 1 | rack15

Distributing controller roles

In this set-up, I have used three servers to run all the controller services; but it is possible to have separate Keystone, Rabbitmq, MySQL, MongoDB and HAproxy services roles assigned to different servers by installing additional Fuel plugins. In a large environment, it is recommended to have separate controllers for each service, for better performance.