In this article, lets explore some of the technology that works behind the cloud. To set up enterprise level cloud computing infrastructure, we not only need server grade hardware and cloud software but also something more. To ensure continuous availability and integrity of user data and application resources, we need a storage solution which can protect service continuity from individual server failures, network failures or even natural disasters. DRBD or Distributed Replicated Block Device is an open source solution that provides a highly available, efficient, distributed storage system for the cluster environment. It is developed and maintained by LINBIT, Austria and is available under GPLv2.

Architecture

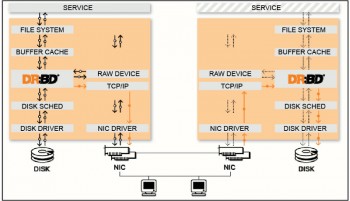

Lets understand the DRBD architecture with the help of an illustration (Figure 1) taken directly from the DRBD documentation. The two boxes represent two DRBD nodes forming a high availability (HA) cluster. A typical Linux distribution contains a stack of modules which more or less include a file system, a buffer cache, a disk scheduler and a disk driver to handle storage devices. The DRBD module sits in the middle of this stack, i.e., between the buffer cache and the disk scheduler.

The black arrow represents a typical flow of data in response to the normal application disk usage. The orange arrow represents data flow initiated by DRBD itself for replication purposes, from one node to another via the network. For the purposes of our discussion, lets assume the left node to be the primary and the right node to be the secondary DRBD node. Whenever a new disk block is updated in the primary module, DRBD sends back the same block content to the secondary DRBD module in the peer node to replicate in its local disk, usually via a dedicated NIC. This way, DRBD keeps the data in both the nodes in sync. The primary node holds the service IP, i.e., the IP with which external applications communicate with the services provided. If the primary node fails, the secondary node takes over as the primary and the service IP by means of the HA service.

Terminology

Before we proceed further lets understand a few DRBD terms.

Resource: A resource is a set of things that form a replicated data set. It consists of a resource name, volumes, DRBD devices and a connection.

Resource name: Any arbitrary ASCII string excluding white space.

Volumes: Every resource consists of a set of volumes that share a single replication stream. Volumes contain the data set and some meta-data used by DRBD itself.

DRBD device: A virtual block device /dev/drbdX, corresponds to each volume in the resource, where X is the devices minor number.

Connection: A communication link between two nodes. Each resource will have exactly one connection.

Feature list

Though DRBD has matured into a system with a rich set of functionalities, lets look at some of the most important ones.

- Supports shared-secret authentication

- LVM (Logical Volume Manager) compatible

- Supports heartbeat/pacemaker resource agent integration

- Supports load balancing of read requests

- Automatic detection of the most up-to-date data after complete failure

- Delta resynchronisation

- Existing deployment can be configured with DRBD without losing data

- Automatic bandwidth management

- Customisable tuning parameters

- Online data verification with peer

Components

DRBD functionalities are implemented in the following components:

drbd: This is a kernel modulethe corewhich actually does the work for us.

drbdadm: This is a userland administrative tool. It reads the configuration file and invokes the following tools with the required parameters, to get the job done.

drbdsetup: This is a userland administrative tool. It configures the drbd kernel module. Usually, direct invocation by the user is not required. drbdadmin invokes whenever required.

drbdmeta: This userland administrative tool manipulates the meta data structure. Usually, direct invocation is not required.

Redundancy modes

DRBD can be set up in the following redundancy modes:

Single primary mode: In this mode, a DRBD resource takes the primary role on only one cluster member at a time. Since only the primary manipulates data, non-sharing file systems like ext3 and ext4 can be used in this configuration. This approach is used to set up a fail-over (HA) capable cluster.

Dual primary mode: In this mode, a DRBD resource takes the primary role on both the cluster members at a time. Since both nodes manipulate data, a sharing file system with distributed locking mechanisms like OCFS2 and GFC is required. This approach is used for load-balancing a cluster.

Replication modes

Different replication modes of DRBD are as follows:

Asynchronous (Protocol A): In this mode, a write operation on the primary is considered complete as soon as it is written on the local disk and is put in the TCP send buffer for replicating in peers. Data loss in the secondary may occur if the secondary fails before this write actually gets a chance to reach the secondary node disk. This is most often used in long distance replication set-ups where communication delay is a considerable factor.

Semi-synchronous (Protocol B): In this mode, a write operation on the primary is considered complete as soon as it is written on the local disk and the former has reached the peer node (not written to peer disk yet). Forced failure may not cause data loss, because the primary can detect that data has not reached a peer, so marks it as a failure and takes necessary action. But if the secondary fails after the data has reached it and before it is written to its local disk, data loss will occur.

Synchronous (Protocol C): A write operation on the primary is considered complete only after both primary and secondary disks confirm the write. So, data protection is ensured in case of the previous two failure scenarios. This is the most common replication mode.

Installation and configuration

Now lets start our DRBD installation. For this set-up, I have taken two nodes running Ubuntu 14.04 LTS Server OS. The host IDs of the nodes are drbd-server1 and drbd-server2. We also assume both the nodes have two NICs the first is eth0 for the Service IP, which will be accessed by external usage and managed by the HA cluster agent. The second NIC interface, i.e, eth1 is used for DRBD replication puposes. Configured IP addresses are 10.0.6.100 and 10.0.6.101, respectively. Configuring the HA agent is beyond the scope of this article.

Step1: Edit/etc/network/interfaces on drbd-server1 and add the following:

auto eth1iface eth1 inet staticaddress 10.0.6.100netmask 255.255.255.0network 10.0.6.0broadcast 10.0.6.255 |

On drbd-server2, the configuration is similar except for its own IP 10.0.6.101.

Step 2: Edit /etc/hosts on both nodes and add the following:

10.0.6.100 drbd-server110.0.6.101 drbd-server2 |

Reboot both the nodes.

Step 3: Install the DRBD package on both the nodes, as follows:

sudo apt-get install drbd8-utils |

Step 4: Check /etc/drbd.conf for the following two entries, on both the nodes:

include /etc/drbd.d/global_common.conf;include /etc/drbd.d/*.res; |

Step 5: Edit /etc/drbd.d/global_common.conf on both the nodes as follows:

global {usage-count yes;}common {net {protocol C;}} |

The usage-count yes option enables collecting real-time statistics of DRBD, and Protocol C sets up a synchronous mode replication as described earlier in the Replication Modes section.

Step 6: Edit /etc/drbd.d/r0.res on both the nodes:

resource r0 {device /dev/drbd1;disk /dev/sdb1;meta-disk /dev/sda2;on drbd-server1 {address 10.0.6.100:7789;}on drbd-server2 {address 10.0.6.101:7789;}} |

r0 is our resource name. Here, /dev/drbd1 is the virtual block device to be created by DRBD. The partition /dev/sdb1 holds our replicated data set. The partition /dev/sda2 holds meta information for internal use by DRBD. The port number 7789 is used by DRBD for replication connection, so make sure that it is not in use by any other application running on your system.

Step 7: Clear the meta-disk /dev/sda2 to avoid DRBD configuration issues:

sudo dd if=/dev/zero of=/dev/sda2 |

This takes some time, depending on the partition size.

Step 8: Create meta-data for the resource r0, as follows:

sudo drbdadm create-md r0 |

Step 9: Enable the resource r0 as follows:

sudo drbdadm up r0 |

Step 10: Check the DRBD status in the /proc/drbd virtual file system, as follows:

cat /proc/drbd |

Check the disk state or ds field for Inconsistent/Inconsistent’, which is normal at this stage since we have not done the initial synchronisation.

Step 11: Start the initial synchronisation from drbd-server1, to make it primary and other node secondary.

sudo drbdadm primary --force r0 |

Now, check the status in /proc/drbd for the ongoing synchronisation. This step takes some time, depending on the disk size. On completion, the disk state ds shows UpToDate/UpToDate’ and roles ro shows Primary/Secondary’. Here, primary indicates that the local node resource is in primary mode and the peer is in secondary mode. Now, our DRBD set-up is ready for replication.

To test the set-up, let us mount our virtual block device /dev/drbd1 and create a file in it.

Lets assume that the user name and group name of our system are the same, which is drbd.

mkdir $HOME/safedisksudo mount /dev/drbd1 $HOME/safedisksudo chgrp drbd $HOME/safedisksudo chown drbd $HOME/safediskcd $HOME/safediskecho DRBD is up and running! > myfile |

Now shut down the primary to simulate a failure condition. Go to secondary (drbd-server2) and run the following command to change it to primary:

sudo drbdadm primary r0 |

Next, on drbd-server2, mount /dev/drbd1 to a local directory as described above and check myfile and its content. It should be the same as what we have written from the previous primary node.

This is only an introductory article on DRBD. Interested readers are advised to explore further to find out other features, configuration options and use-cases of DRBD. Online training is also available at http://www.linbit.com/en/p/services/training by LINBIT.

References

[1] http://drbd.linbit.com/

[2] https://en.wikipedia.org/wiki/

Distributed_Replicated_Block_Device

[3] https://help.ubuntu.com/lts/serverguide/drbd.html