Collaborating with UNESCO, we invite partners to enhance machine translation and linguistic diversity. Additionally, we’re advancing audio processing, multilingual communication, and language technologies to support AMI development.

Meta’s Fundamental AI Research (FAIR) has always aimed to achieve Advanced Machine Intelligence (AMI) and bring about innovation that benefits all. With that mission in mind, we’re unveiling some of our latest developments, emphasizing open-source contributions and reproducible science.

One standout project is PARTNR, a research framework designed to enhance human-robot collaboration. Today’s robots often operate in isolation, limiting their potential. PARTNR changes that by creating a large-scale benchmark, dataset, and model to enable robots to collaborate seamlessly with humans. By using simulations and large-scale training, PARTNR advances robots’ ability to perform everyday tasks, from cleaning to cooking, and adapts to dynamic home environments. The PARTNR benchmark, with 100,000 tasks, will help ensure these robots can function in both virtual and physical settings, addressing issues like task coordination and recovery from errors. This model is already performing better than current systems in both speed and efficiency, with a focus on long-horizon task execution. We’ve even deployed this model on Boston Dynamics’ Spot robot.

In parallel, Meta is also expanding language accessibility with its Language Technology Partner Program. This initiative invites linguists to work together to enhance speech recognition and machine translation for underserved languages. The goal is to broaden digital inclusivity and contribute to the International Decade of Indigenous Languages. We’re already collaborating with the Government of Nunavut and looking for more partners to improve these technologies.

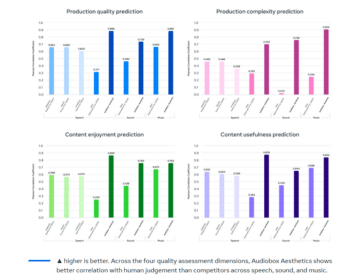

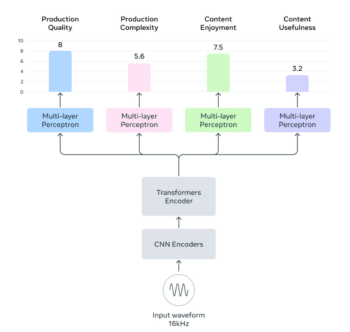

Meta Audiobox Aesthetics is another leap forward in audio technology. This open-source model evaluates audio quality—be it speech, music, or sound—by predicting factors like content enjoyment and production complexity. With its ability to consistently assess audio aesthetics, Audiobox Aesthetics is a game-changer for creating higher-quality audio content and advancing generative audio models.

Lastly, Meta’s WhatsApp Voice Message Transcripts feature leverages on-device technology to securely generate transcripts of voice messages. This feature is available in multiple languages, promoting more seamless and inclusive communication. Through these initiatives, Meta continues to drive the field of AI forward, contributing to technology that empowers people globally.