We focus on the basics of natural language processing and its applications using one of the most popular NLP libraries known as Natural Language Toolkit (NLTK)

In the age of generative AI, natural language processing (NLP) is a popular subject of interest. One of the most popular NLP libraries is NLTK or Natural Language Toolkit and we will learn how to use it effectively in this article.

We will start by looking at the installation process of this library using Google Colab (https://colab.research.google.com/) as the IDE. Let’s open the link as shown in Figure 1.

Now open a new notebook and, in the first cell, give the command pip install nltk to install NLTK.

Tokenisation

The first NLP process we will be looking at is tokenisation. Tokenisation is a natural language processing step in which we break a given piece of text into smaller parts. These parts can be simple phrases, sentences, words, and even characters or sets of characters, and are called tokens. Tokenisation is an important preprocessing step in most NLP applications. This may be done for feature engineering, text preprocessing or to build vocabulary for tasks like sentiment analysis.

Let us look at how we can do this using NLTK. First, import the required functions as shown below:

from nltk.tokenize import sent_tokenize, word_tokenize

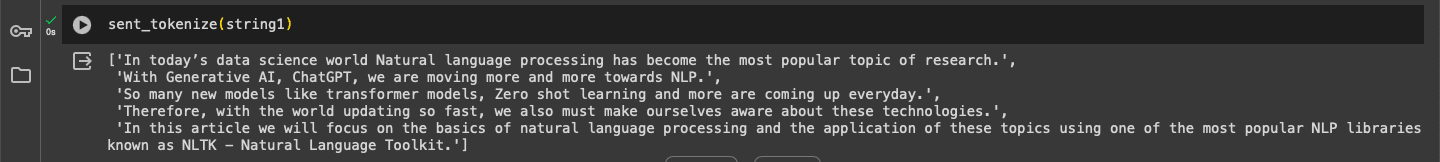

I’ve given a sample string, which is the first paragraph of this article, as a sample for us to analyse. We are going to use the sentence tokeniser as shown below and in Figure 2.

sent_tokenize(string1)

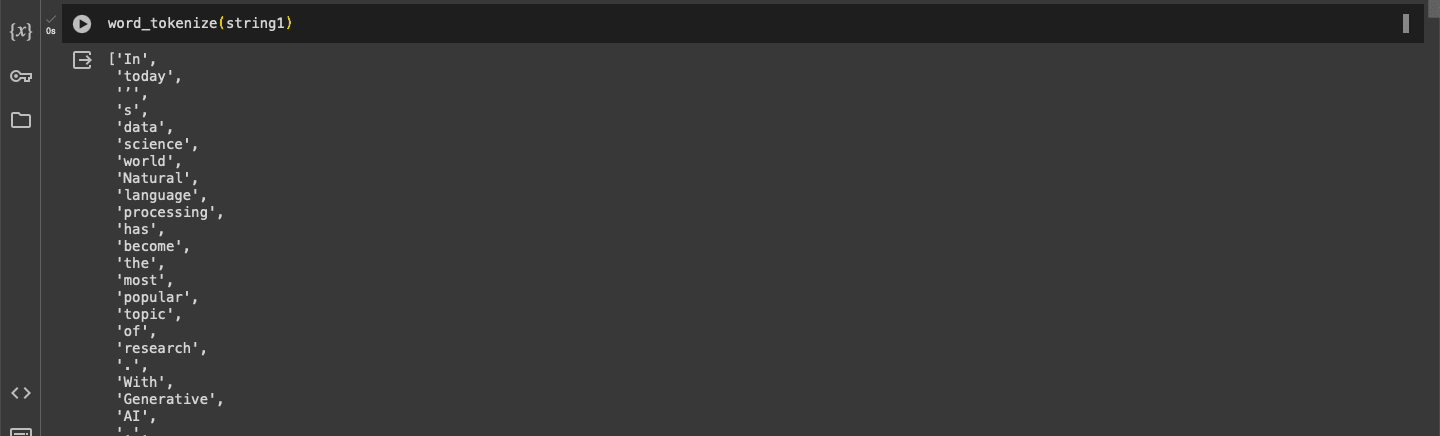

It can be seen that the function returns a list of sentences in the given text. Now let us use the word tokeniser, as shown in Figure 3. As we can see, a list of words is returned here, and the tokens are on the word level.

word_tokenize(string1)

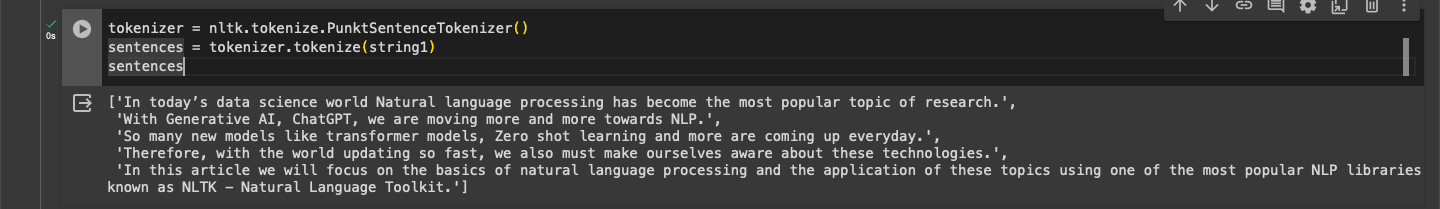

The next type of tokeniser is known as the punctuation tokeniser. We can use that with the following lines of code. We create the tokens based on the punctuation we give, as shown in Figure 4.

tokenizer = nltk.tokenize.PunktSentenceTokenizer() sentences = tokenizer.tokenize(string1) sentences

Stemming

The next major NLP process we are going to learn is called stemming. This is also a very important process in the field of NLP, in which we reduce a given word to its root form. For example, the words work, working and worked have the same sentiment or meaning. Therefore, it becomes easy for us to manage them, whether to create a vocabulary or for any other purpose. Stemming helps us improve the accuracy and the efficiency of the text. It basically ensures that all words with a similar meaning are treated uniformly.

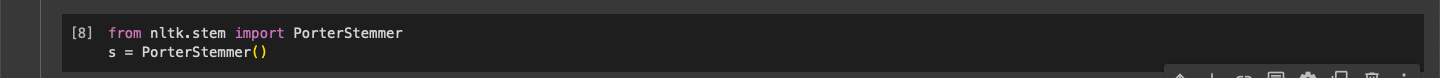

There are multiple methods or algorithms that help us perform this task, the most popular being Porter Stemmer. This method is defined by a set of rules that help us strip suffixes to obtain the base words, which are also known as stems. It’s a useful preprocessing step in many NLP tasks like text classification, information retrieval, and sentiment analysis. We first import the stemmer and then initialise the Porter Stemmer as shown in Figure 5.

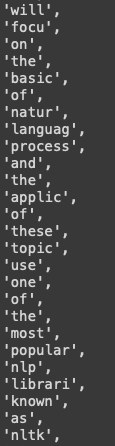

Let us now use the list of words we created above for checking out the stemming process. I’ve stored the list in the variable name ‘l’, using the following lines of code. We can call the stemmer on our list and print out the results.

after_stem = [s.stem(i) for i in l] after_stem

You can see the result in Figure 6. Words like ‘nature’ are converted to ‘natur’, and so are words like natural.

Frequency distribution

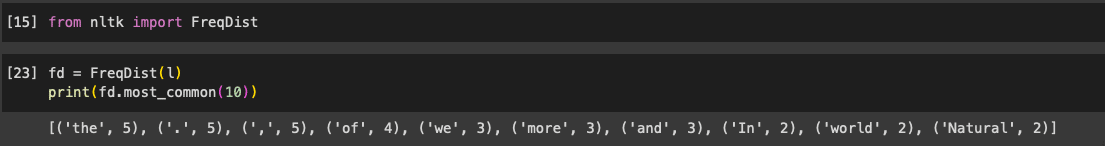

The next process is frequency distribution, a method usually used to count the vocabulary. The output is a dictionary, which may have the vocabulary as the key and the number of times the word has occurred in the text as the value. This technique can be used in processes like sentiment analysis, where we can count the number of positive words, negative words, etc. This process is also used in vocabulary analysis and feature selection.

We can import the function using the following lines of code.

from nltk import FreqDist fd = FreqDist(l) print(fd.most_common(10))

As we can see in Figure 7, the counts of each of the words are given in the form of a dictionary.

Tagging parts of speech, and chunking

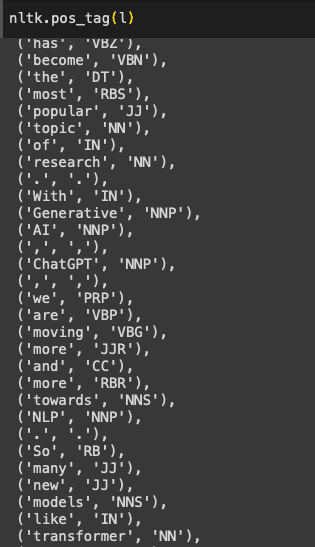

Another process that is important is tagging the parts of speech, which must be done accurately. Here we tag the parts of speech for each word in the given piece of text. Let us see how we can do that!

We can get the parts of speech using the following line of code. The same list of tokens is being used here.

nltk.pos_tag(l)

Another important process in the field of NLP is chunking. This is done to identify phrases, or groups of words that are generally used together, such as ‘a piece of cake’. We can use regex to identify these phrases.

Named entity recognition

The next process we are going to talk about is a very interesting one. This is called ‘named entity recognition’ or NER and has multiple business applications. We can use it to detect private data in text, as well as extract important names/dates, a news article or a normal piece of text. We can use this process for question answering, document summarisation, and more. The main objective of NER is to identify names, locations, dates, and more. Now let us see how we can use this. First, import NER using the following lines of code:

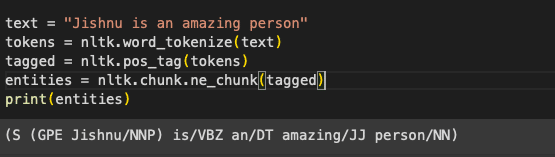

text = “Jishnu is an amazing person” tokens = nltk.word_tokenize(text) tagged = nltk.pos_tag(tokens) entities = nltk.chunk.ne_chunk(tagged) print(entities)

As you can see in in the output in Figure 9, the proper nouns, nouns, etc, have been identified correctly.

That’s it! You have learnt most of the basic concepts in the field of NLP. You can explore much more in this space as there are so many libraries today helping with various NLP tasks.