Featuring new deployment options, enhanced checkpointing, and optimized hardware detection, the update accelerates large language model development and simplifies multi-cloud AI workloads.

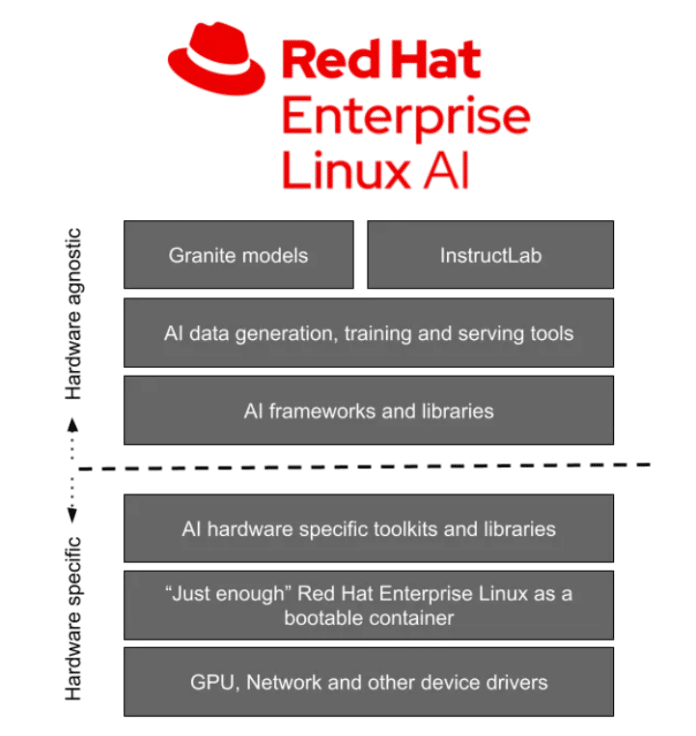

Red Hat has officially released Red Hat Enterprise Linux AI (RHEL AI) 1.2, advancing its foundation model platform for generative AI (GenAI). RHEL AI focuses on developing, testing, and running large language models (LLMs) for enterprise applications using Granite LLMs and InstructLab tools, distinct from the core RHEL operating system.

RHEL AI introduces AMD Instinct GPU support for the first time. With the integration of the ROCm open-source compute stack, organizations can leverage MI300X GPUs for both training and inference and MI210 GPUs for inference-only workloads. This addition enhances flexibility by complementing existing NVIDIA GPU and CPU-based operations.

It is now accessible on Microsoft Azure and Google Cloud Platform, enabling enterprises to deploy AI-based GPU instances effortlessly. This cloud support allows businesses to run AI workloads seamlessly across multi-cloud environments. Users can now deploy it on Lenovo ThinkSystem SR675 V3 servers. Preloaded deployment options simplify the setup process, ensuring better compatibility with hardware accelerators and enhancing overall efficiency. A new periodic checkpointing feature allows users to save long training sessions at specific intervals. This capability ensures that models can be fine-tuned from the latest checkpoint, improving efficiency and conserving computational resources.

The ilab CLI now includes automatic detection of GPUs and other hardware accelerators. This feature minimizes manual configuration efforts, optimizing performance by aligning with the available hardware. It introduces Fully Sharded Data Parallel (FSDP) via PyTorch, enabling distributed model training by sharding parameters, gradients, and optimizer states. This feature significantly reduces training time for complex models, enhancing overall productivity.