As AI solutions proliferate, ensuring they are not biased with respect to gender, religion, financial status, etc, has become of paramount importance. The good news is that there is a lot of work being done on that front.

Today, there is a paradigm shift from traditional AI systems that use statistical and mathematical probabilistic algorithms to those that use machine learning (ML) and deep learning (DL) models. As per Gartner, there are 34 different branches of AI system design in existence today. AIOps in cloud operations, DataOps in data engineering, predictive analytics in data science, and MLOps in industrial applications are some of the examples of new age AI applications.

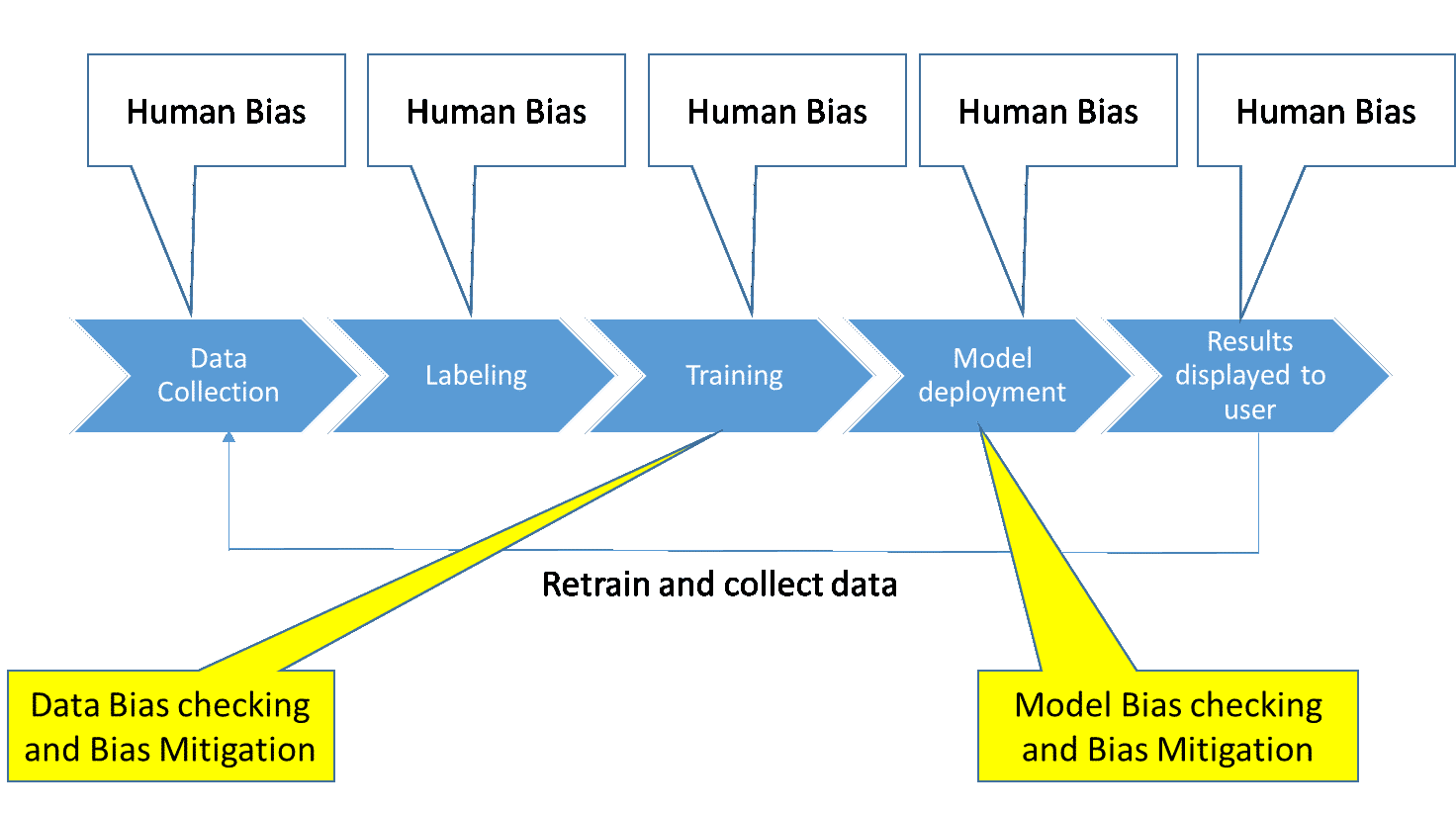

Ray Kurzweil coined the term ‘singularity’ in AI, which means bringing AI closer to human intelligence (or natural intelligence). To achieve the highest level of accuracy in ML training, modelling and functioning, it is of utmost important to ensure fairness and correct any bias in AIML implementation. Bias cannot occur on its own but is the result of human inputs during the various stages of developing the AIML-based solution.

When collecting data for training the model in ML, one must ensure the data is distributed fairly and that there is no bias. In the same way, when we label and group the data, train the model to simulate human-like thinking, deploy the model and interpret the results, we must do away with any pre-judgement or biased interests.

For example, bias with respect to race, income, sexual orientation, gender, religion, must be avoided when preparing the training data, training the system and interpreting the results from the ML execution.

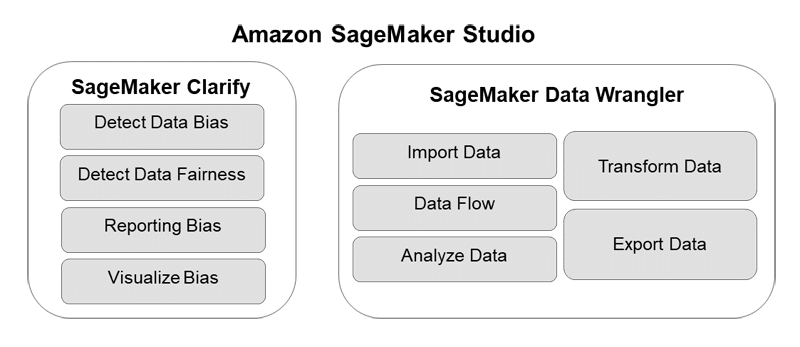

There are many popular tools like IBM’s AI Fairness 360, Microsoft’s Fairlearn and Google’s What-if that are very useful to identify any bias in the training model and data collection.

Addressing bias in AI systems

Bias in AI systems, unfortunately, is a real and concerning issue. It arises when the data or algorithms used to train an AI system reflect or amplify existing societal biases. This can lead to unfair and discriminatory outcomes like:

- Loan denials for individuals belonging to specific demographics

- Inaccurate facial recognition software failing to identify people of colour

- Algorithmic bias in hiring processes overlooking qualified candidates

Addressing this issue is crucial for ensuring responsible and ethical development of AI. Here are some key aspects to consider.

To start with, one must understand the probable sources of bias.

- Data bias: AI systems learn from the data they are fed. If the data itself is biased, containing skewed information or lacking diversity, the AI system will inherit and perpetuate those biases.

- Algorithmic bias: Certain algorithms can amplify existing biases in the data, leading to skewed outcomes.

There are a few methods that can be used to mitigate bias. One of them is data curation.

- Collecting diverse and representative datasets: This involves actively seeking data that reflects the intended population the AI system will interact with.

- Cleaning and debiasing existing datasets: Biases present in existing data can be identified and removed through various techniques like data scrubbing and augmentation.

Algorithmic techniques can also be used to mitigate bias.

- Fairness-aware algorithms: These algorithms are designed to be more sensitive to potential bias and produce fairer outcomes.

- Explainable AI (XAI): Making the decision-making process of AI systems transparent allows for identifying and addressing bias more effectively.

Human oversight and governance are important too.

- Robust frameworks and policies must be implemented for responsible AI development and deployment.

- Human oversight in crucial decision-making processes can really help mitigate potential bias in AI recommendations.

The challenges

Addressing bias is an ongoing process. As AI systems evolve and adapt, it’s crucial to continuously monitor and mitigate potential biases.

Trade-offs between fairness and other objectives: Mitigating bias sometimes involves making trade-offs with other objectives like system performance or accuracy. Finding the right balance is crucial.

Regulation and ethical considerations: Developing clear regulations and ethical guidelines for responsible AI development is essential for mitigating bias and ensuring trust in AI technology.

Fairness and accountability in AI algorithms

Fairness and accountability are two critical pillars of ethical and responsible AI development. They work hand-in-hand to ensure that AI algorithms function without bias and that their actions can be traced back to a responsible entity.

Fairness in AI

Definition: Refers to the absence of bias in the design, development, and deployment of AI systems. This means that the algorithms produce equitable outcomes for all individuals and groups, regardless of their background or characteristics.

Importance: Ensures that AI does not perpetuate or exacerbate existing societal inequalities. It fosters trust in AI by demonstrating its ability to treat everyone fairly.

Challenges: Achieving fairness in AI is complex. Biases can be embedded in the training data, the algorithms themselves, and even in the way AI systems are used.

Accountability in AI

Definition: Refers to the ability to identify and hold someone or something responsible for the decisions and actions of an AI system. This includes understanding how the system arrived at a specific decision and who is accountable for any negative consequences.

Importance: Crucial for building trust and ensuring that AI systems are used responsibly. It allows for corrective actions to be taken in case of errors or biases.

Challenges: Establishing accountability for AI systems can be challenging due to their complex nature and the often opaque decision-making processes.

The intersection of fairness and accountability

Fairness and accountability are interconnected. Achieving fairness in AI requires holding someone accountable for potential biases in the system; conversely, lacking accountability makes it difficult to ensure fairness.

Here are some strategies to help achieve fairness in an AIML model.

Transparency: Implementing techniques like explainable AI (XAI) to understand how AI systems reach decisions, fostering transparency and identifying potential biases.

Auditing and monitoring: Regularly auditing AI systems for bias and unintended consequences.

Clear governance frameworks: Establishing clear guidelines and regulations for responsible AI development and deployment, including well-defined roles and responsibilities.

By addressing both fairness and accountability, we can strive towards building AI systems that are not only powerful and efficient but also equitable and trustworthy. This requires ongoing collaboration between developers, policymakers, and the public to develop and implement responsible AI practices.

Industry standards and guidelines for ethical AI

Fortunately, several industry standards and guidelines have emerged to guide developers and users in building and utilising AI responsibly.

- The European Union (EU): The EU is playing a prominent role in shaping ethical AI practices. Its ‘Ethics Guidelines for Trustworthy AI’ emphasise seven key requirements.

1. Human agency and oversight: AI systems should be under human control and supervision.

2. Technical robustness and safety: AI systems should be robust, reliable, and secure to prevent harm.

3. Privacy and data governance: Personal data used in AI development and deployment needs to be handled ethically and responsibly.

4. Transparency: AI systems and their decision-making processes should be transparent and understandable.

5. Diversity, non-discrimination, and fairness: AI systems should be designed and used to avoid discrimination and societal biases.

6. Environmental and societal well-being: AI development should consider environmental sustainability and societal impact.

7. Accountability: Developers and users of AI should be accountable for the system’s consequences.

- The Organization for Economic Co-operation and Development (OECD): The OECD’s ‘AI Principles’ outline nine core principles for responsible AI development, focusing on human well-being, fairness, accountability, transparency, and safety.

- Other organisations: Several other entities, including UNESCO, the Institute of Electrical and Electronics Engineers (IEEE), and the Association for the Advancement of Artificial Intelligence (AAAI), have also developed their own sets of ethical guidelines and recommendations for AI development.

Key points of industry standards and guidelines

While specific details may vary, some key themes emerge across these different standards and guidelines.

- Human-centred approach: Emphasises that AI should be developed and used for the benefit of humanity, prioritising human well-being and respecting fundamental rights.

- Fairness and non-discrimination: Combating bias and discrimination in AI systems is crucial to ensure fairness and equal opportunities for all.

- Transparency and explainability: Developers and users should understand how AI systems work, and users should be aware when interacting with an AI system.

- Accountability and responsibility: Establishing clear lines of accountability for the development, deployment, and use of AI systems is essential.

- Privacy and data protection: Ensuring ethical handling of personal data used in AI systems and respecting individual privacy.

- Safety and security: Implementing safeguards to prevent harm and potential misuse of AI systems.

However, there are a few challenges.

- Implementation and enforcement: Translating these guidelines into practical implementation and ensuring effective enforcement remains an ongoing challenge.

- Global consistency: Achieving consistency and harmonisation of ethical AI standards across different countries and regions is crucial for responsible development and deployment.

- Continuous evaluation and adaptation: As AI technology evolves, ethical frameworks and guidelines need to be continuously evaluated and adapted to address new challenges.

Collaborative efforts to create responsible AI systems

Meta’s BlenderBot has been handling conversational AI with cognitive intelligence to combine skills like personality, empathy and knowledge. BlenderBot 3 has an exceptionally intelligent conversational AI agent to converse naturally with users. It incorporates what we term as ‘AI fairness’ and avoids bias in chat responses by learning and improvising its responses based on user feedback and response to chat results by classifying offensive, unrelated, problematic languages. It collects data from news feeds, classifies the truthfulness of the information, and then responds to user queries.

BlenderBot 3 has a built-in web crawler framework to seek responses for chat queries, and uses algorithms like SeeKer and Director to learn from user responses and rank them for fairness and relevance. Its self-learning capability recognises unhelpful and tricky responses where the user tries to trick the model and makes sure that fair responses are provided.

Currently, BlenderBot 3 is in beta and available in some regions. It is being tested to make it more effective. However, its general availability (GA) release is expected to answer almost any question with fairness and without bias.