Prompt engineering is the art of asking the right question to get better output from a generative AI model. Well-defined prompts can generate high-quality AI content.

Generative artificial intelligence (GenAI) technology is changing the way businesses operate. GenAI is now being used for every possible task, possibly even trending towards industrialisation. The most important activity in implementing GenAI is to develop and train models to generate the meaningful information needed by humans or systems. The process of feeding the information to generate responses is called prompt engineering.

In simple terms, prompt engineering is the process of creating effective prompts that enable GenAI models to generate responses based on given inputs. It is the art of asking the right question to get better output from a GenAI model. These GenAI models are called large language models (LLMs). They can be programmed in English as well as other languages.

Prompts are pieces of text that are used to provide context and guidance to GenAI models. These prompts learn from diverse input data, minimise biases, and provide additional guidance to the model to generate accurate output. A knowledgeable prompt generates high-quality AI content related to images, code, text, or data summaries.

Key components of prompt engineering

The key components of prompt formation include instruction, context, input data and output.

Instruction: We must write clear instructions to the model for completing the task. The instruction:

- Establishes the goal of the model

- Creates text that is being processed or transformed by the model

- Can be simple or complex

Context: Important details or context must be provided to get more relevant answers. The context:

- Provides details that help the model to answer

- Assists the model with necessary information style and tone

Input data: Actual data that the model will be using is referred to as input data.

- Express query as clearly as possible

- Query with specific, plain language and no unnecessary fillers

- Include details in query to get more relevant answers

- Include examples of the desired behaviour of the model

Output indicator: This instructs the model about the desired output creation. The indicator:

- Gives format guidance to model

- Supports content

- Continues to engineer the prompt until you achieve your desired results

- Assists model in generating more helpful solutions

Pillars of prompt engineering

The following are the pillars of prompt engineering techniques.

- Examples: These act as a tool for fine-tuning LLM models. They help in getting the desired response.

- Format: This describes user queries to receive the output results. It helps in generating productive outcomes from the LLM models.

- Direction: This helps in narrowing responses for effective prompt output.

- Parameters: These help in controlling the responses generated by LLM models.

- Chaining: This connects multiple GenAI calls to complete the desired task.

Principles of prompt engineering

The main principles of prompt engineering to optimise responses are:

- Clarity: Clearly communicate what content or information is most important. A good prompt enables the LLMs to respond with more accuracy.

- Structure the prompt: Start by defining its role, give context/input data, and then provide the instruction.

- Examples: Use specific examples to help the LLMs to narrow their focus and generate more accurate results.

- Constraints: Use constraints to limit the scope of the model’s output. This helps in receiving the desired response and avoids unwanted or unnecessary information.

- Tasks: Break down complex tasks into a sequence of simpler and compact prompts. Make it simpler for LLMs to handle the data to comprehend each enquiry and generate meaningful answers.

- Specific: Leave as little room for interpretation as possible. Restrict the operational space.

Prompt engineering framework

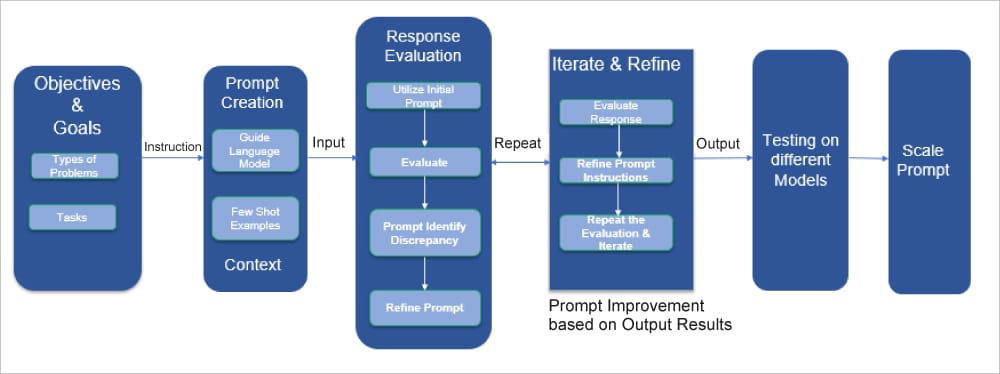

There are a few prompt engineering frameworks that perform prompt engineering tasks on AI models. These are Longchain, Semantic Kernel, Guidance, NeMo Guardrails, Fastrag, and LLamaIndex. Figure 1 depicts the prompt engineering framework.

Objectives and goals: The first step in the prompt engineering process is understanding the problem. Understanding the goal of the conversation and the objectives to be achieved are completed during this step. It addresses various problems like question-answering, text generation, sentiment analysis, etc. Analysis of tasks and constraints, and prediction of model’s responses to various inputs are done.

Prompt creation: Initial prompt creation and its importance are covered during this step. Relevant data and meaningful keywords to train the model for generating the desired response/output are identified.

Response evaluation: During this step, effective use of the initial prompt is made. Clear and concise instructions are provided in the prompt. These prompts are kept simple and clear by using plain language. Identification of discrepancies, understanding limitations, and refining of prompts are done during this step.

Iterate and refine: Assessment of the model’s output and its accuracy in terms of achieving the desired objective are done during this step. Any misalignment or flaws are captured as part of evaluation of the model’s response. The nature of misalignment is identified, and the prompt instructions are adjusted and refined. The evaluation of the model’s response is repeated to identify any remaining shortcomings. The process is iterated multiple times to refine the prompt effectively. This helps in improving the accuracy of the output.

Testing on different models: In this step, the performance of the generated prompts is evaluated and refined. A variety of test cases are developed to perform these tasks. Various large language models are selected and the refined prompt is applied to each model. The responses generated by each model are assessed, and the alignment between the responses and the desired objective is analysed. Finally, the processes are iterated by reapplying the refined prompt to the models based on insights gained from testing.

Scale prompt: This is a process to extend the value of a prompt across more contexts, tasks, or levels of automation. Automating prompt generation saves a lot of time and can minimise human error. It helps in establishing uniformity in the prompt creation procedure. It also helps in achieving better customer experience and cost reduction.

Table 1 summarises some of the recent language models and how they successfully apply the latest and most advanced prompt engineering techniques.

Table 1: Important language models

| Model | Release date | Description |

| Falcon LLM | September 2023 | This is a foundational large language model (LLM) with 180 billion parameters, trained on 3500 billion tokens. |

| Llama-2 | July 2023 | Meta AI developed LLaMA-2. It is an open and efficient foundation language model. It is trained on trillions of tokens with publicly available datasets. |

| ChatGPT | November 2022 | This model interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow up questions, admit its mistakes, challenge incorrect premises, and reject inappropriate requests. |

| Flan | October 2022 | This fine-tuned language model is a collection of datasets used to improve model performance and generalisation to unseen tasks. 1.8K tasks were phrased as instructions and used to fine tune the model. |

| Mistral 7B | September 2023 | This is a pretrained generative text model with 7 billion parameters. The model is used in mathematics, code generation, and reasoning. It is suitable for real-time applications where quick responses are essential. |

| PaLM2 | May 2023 | Language model performs multilingual and reasoning capabilities. |

| Code Llama | August 2023 | Designed for general code synthesis and understanding. It’s a 34-billion parameter language model. |

| Phi – 2 | June 2023 | Released by Microsoft Research Lab, this is a 2.7 billion parameter language model. Phi-2 can be prompted using a QA format, a chat format, and the code format. |

Prompt engineering helps us achieve optimised outputs with minimum effort. Well defined prompts help the generative AI models to create accurate and personalised responses. Also, prompt engineering is likely to become increasingly integrated with other technologies, such as virtual assistants, chatbots, and voice-enabled devices. This will enable users to interact with technology more seamlessly and effectively, improving the overall user experience.

Prompt engineering is more an art than technology. The basic rule is that good prompts lead to good results.

Disclaimer: The views expressed in this article are that of the author and HCL

does not subscribe to the substance, veracity, or truthfulness of the said opinion.