Dive into the world of service meshes — frameworks that simplify inter-service communication, especially in the complex web of microservices. They also help manage network traffic, enhance the security of communication channels, and monitor microservices.

A service mesh is a dedicated infrastructure layer that facilitates communication, observability, and control between microservices in a distributed application. As modern software architectures increasingly adopt microservices, the complexity of managing communication between these independently deployable units grows. Service mesh addresses this challenge by abstracting away the intricacies of inter-service communication, providing a centralised, transparent network layer.

At its core, a service mesh consists of a set of interconnected proxy instances, called sidecars, deployed alongside each microservice. These sidecars manage communication between services by intercepting and controlling traffic. One of the key advantages of a service mesh is its ability to decouple application logic from concerns such as security, load balancing, and monitoring, allowing developers to focus on building and deploying features without being burdened by these cross-cutting concerns.

Service meshes offer features like traffic management, enabling capabilities such as load balancing, circuit breaking, and A/B testing. They also enhance security by providing encryption and authentication between services, ensuring a secure communication channel. Additionally, service meshes offer observability tools, including metrics, logging, and tracing, allowing for comprehensive monitoring and troubleshooting of microservices.

Popular service mesh implementations include Istio, Linkerd, and Consul Connect. These frameworks provide a standardised way to manage the complexities of microservices communication, offering a consistent set of features and controls across various programming languages and platforms.

Microservices architecture using service mesh

One of the key advantages of microservices architecture is that different smaller groups of developers build different use cases in individual microservices using their own set of tools and programming language. They communicate with each other for an integrated solution. This helps microservices to build independently, fail individually, and upgrade, build and deploy without disturbing other services. Service to service communication is not flexible enough in microservices architecture. This is addressed in service mesh architecture, where a large number of discrete services can communicate with each other to make it an integrated functional application at an enterprise scale.

Linkerd was the first tool to provide service mesh networking architecture, and Istio is the open source alternative.

Service mesh – Do’s and Don’ts

The following highlight the do’s of a service mesh.

- Define clear service boundaries: Clearly define the boundaries of your microservices to ensure effective communication. This clarity helps in configuring and managing the service mesh components efficiently.

- Gradual adoption: Introduce service mesh incrementally rather than attempting a complete overhaul. Gradual adoption allows for thorough testing and minimises disruptions to existing services.

- Use traffic management features: Leverage traffic management features like load balancing, circuit breaking, and canary deployments provided by the service mesh to enhance the reliability and scalability of your microservices.

- Implement security measures: Take advantage of the service mesh’s security features, including encryption and mutual TLS authentication, to secure communication between microservices.

- Prioritise observability: Utilise observability tools for monitoring, logging, and tracing. This ensures better insights into the performance and behaviour of your microservices, aiding in troubleshooting and optimisation.

A few don’ts of a service mesh are:

- Overlook service mesh overhead: Be cautious of the additional latency and resource overhead introduced by the service mesh proxies. Carefully assess the impact on performance and optimise configurations accordingly.

- Neglect regular updates and maintenance: Stay updated with the latest releases and security patches for your chosen service mesh implementation. Regularly update configurations and address potential vulnerabilities to ensure a secure and well-maintained infrastructure.

- Assume service mesh solves all problems: While a service mesh provides valuable features, it is not a one-size-fits-all solution. Understand its capabilities and limitations, and complement it with other tools and practices as needed.

- Ignore developer involvement: Service mesh configurations often affect developers’ daily workflows. Involve them in the decision-making process, provide necessary training, and ensure they understand how to work effectively within the service mesh environment.

- Underestimate the learning curve: Implementing a service mesh introduces a learning curve for both operations and development teams. Invest in training and documentation to facilitate a smooth transition and prevent unnecessary hurdles.

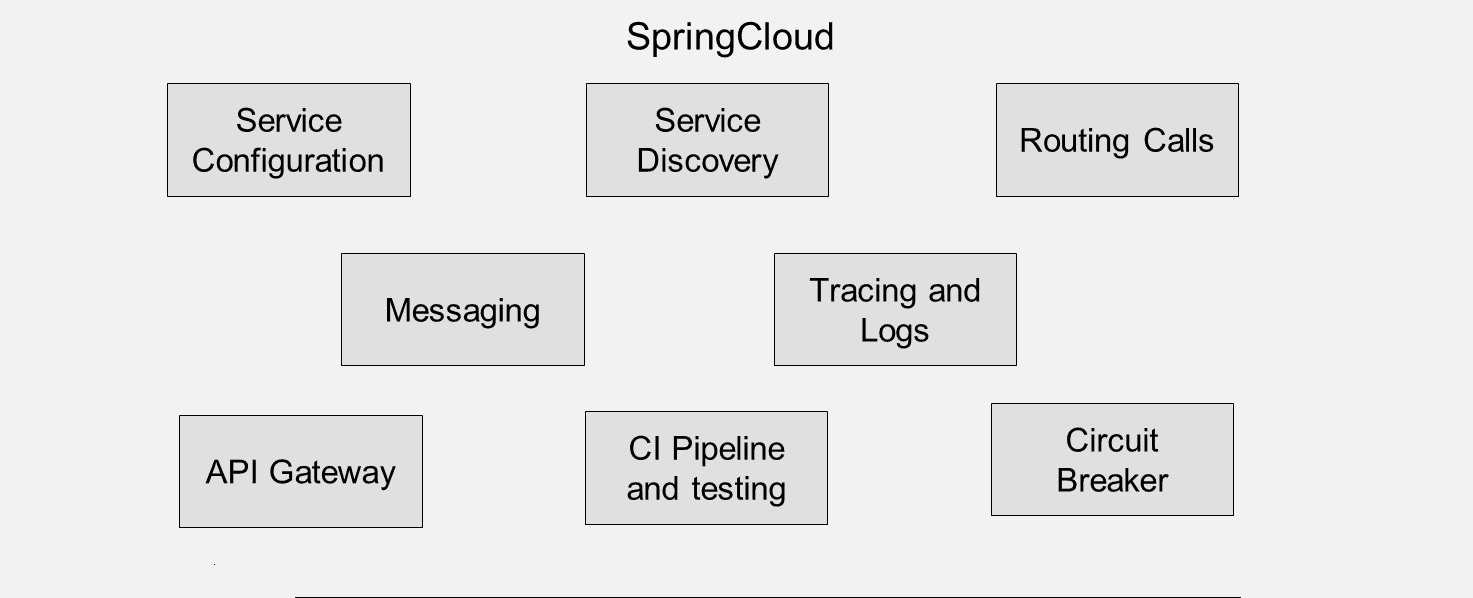

Figure 2: Spring Cloud

Spring Boot is a convenient framework for Java programmers to develop microservices and cloud native apps on-the-fly. It can also create web applications with the embedded Tomcat or Jetty servers, thus reducing the deployment of WAR or EAR files. However, when designing Spring Boot applications in the cloud, there is additional overhead in terms of configuration and security setup. As more microservices are deployed in the cloud, the inter-service communication of these Spring Boot applications requires additional steps of service discovery, inter-service communication, and gateway security.

This challenge can be easily addressed by using Spring Cloud framework on top of Spring Boot model, providing inter-service communication and making it suitable for cloud architecture. Configuration, circuit breakers, service discovery, API routing, messaging, monitoring and logging, tracing calls and service testing are integral features of Spring Cloud. These components are loosely coupled, reducing operational complexity. The circuit breaker pattern, derived from design patterns, ensures high availability, providing fault tolerance and resilience even when services experience downtime or slowdowns causing latency in calls.

Technically, Spring Cloud can be compared with service mesh like Istio as both address microservices architecture for inter-service communication, circuit breakers, and service discovery integrated framework. Spring Cloud has some advantages, such as annotation-based coding, flexible policy definition, and the ability to be stateful or stateless, but it adds overhead by adding tracing data to logs by instrumenting service calls through network proxies.

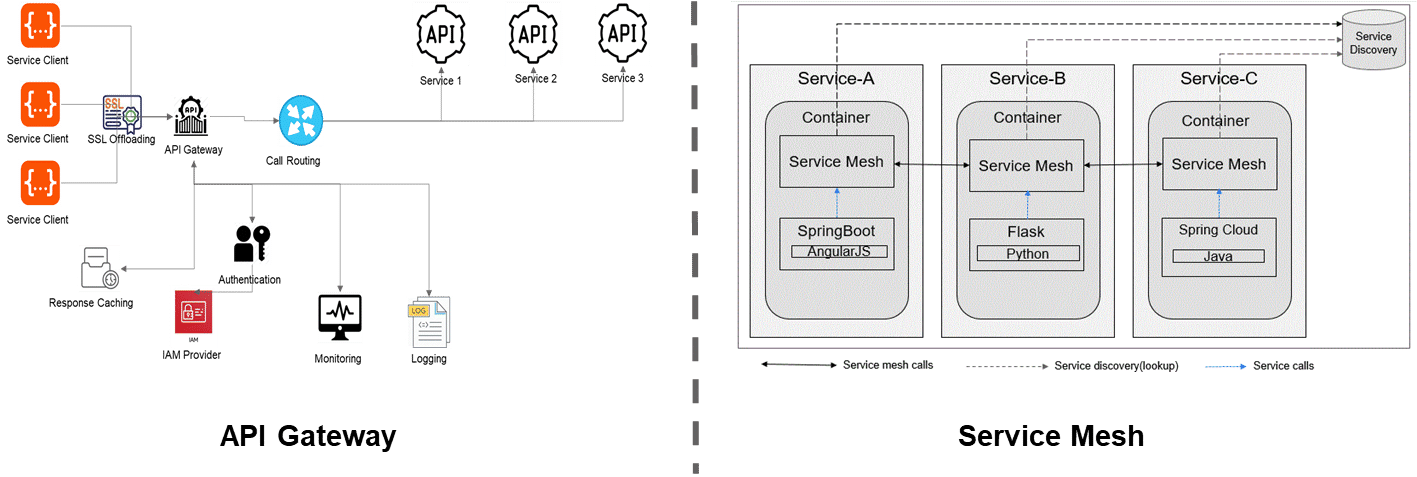

API gateway vs service mesh architecture

When developing microservices on cloud platforms, architects draw an analogy between service mesh architecture and API gateway architecture. In reality, they serve different purposes and should not be compared in architectural design.

An API gateway is commonly used in API development, acting as the routing interface between API services and the client calls (requests). It can be utilised for service discovery, security features like IAM authentication, role-based access, response caching for faster request processing, and integrated logging and monitoring services.

In contrast, a service mesh is a network of proxies for services, also called sidecars, improving communication between service-to-service calls. In network terminology, client-to-server calls are called north-south traffic and inter-communication of servers is called east-west traffic. In other words, API gateway handles north-south traffic and service mesh handles east-west traffic.

Service mesh handles the internal brokering of resources, sitting between network communication channels and application services to accelerate inter-service communication. When dealing with a large number of microservices/APIs with extensive inter-service communications, service mesh architecture is suitable for better resource handling and communication patterns.

API gateway manages external traffic from service clients to internal resources such as APIs/microservices. It enables routing to API services and exposes business functions to the external world. When you have many service client calls (request processing) to API services, you can choose an API gateway to handle the traffic efficiently, along with response caching and internal load balancing, ensuring high-availability without compromising security and performance.

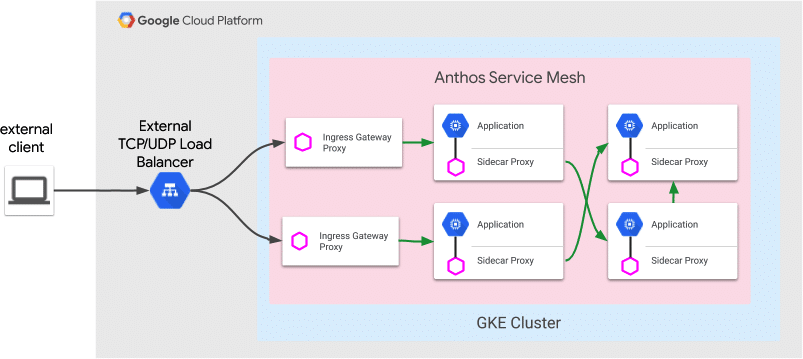

Anthos service mesh

Google’s native service mesh feature is available as Anthos service mesh, featuring one or more control planes (fully-managed services that can be configured with Google handling patch/upgrade, scalability, and security features) and a data plane handling workload instance communication activities.

Anthos Service Mesh is based on the powerful Istio framework and the control plane is based on a consolidated monolithic binary called Istiod. It uses a proxy to monitor all traffic in service communications. The service mesh provides fine-grained control over service communications and proxy services, and load-balancing between services. It controls service traffic flows in both directions, such as traffic into the mesh/services (ingress traffic) and outside services (egress traffic management).

Anthos Service Mesh is integrated with cloud monitoring, cloud logging, and cloud trace for native integration, offering observability insights. It employs higher security features like mutual transport layer security (mTLS) for authenticating service peers. It can manage managed instance groups (MIGs) of compute engine instances and Google Kubernetes Engine (GKE) clusters within the service mesh for reliable proxied communication.

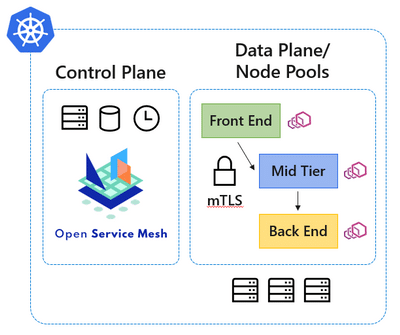

Open Service Mesh (OSM) add-on to enhance AKS features

Microsoft initially developed Open Service Mesh (OSM) for fully-managed service mesh features and later donated it to the Cloud Native Computing Foundation (CNCF) as an open source project. It serves as an envoy-based control plane on the Kubernetes engine, managing and configuring infrastructure services on-the-fly.

OSM was included as an add-on to Azure Kubernetes Service (AKS) as a lightweight service mesh feature for Kubernetes applications. It provides access control policies, monitoring, and debugging for these applications. In March 2021, the OSM add-on was announced as a public feature or general availability in AKS, enabling service-to-service communication in the Azure platform using mTLS, facilitating two-way encrypted TLS communication between services.

The Envoy proxy in OSM enables automatic sidecar injection to easily on-board new applications into the service mesh control plane setup. It is managed with fine-grained access control policies for each service, ensuring better security and flexible service management. It also provides native certification for mutual authentication using Key Vault services as well as third-party certificates for enhanced security features.

The Azure OSM add-on offers a standard interface for service mesh configuration on Kubernetes using service mesh interface (SMI). This can be used for building a traffic policy to encrypt transport across services, traffic management to shift traffic between services, and traffic telemetry to monitor and record key metrics like traffic latency and error rate between service communications.

Security considerations in service mesh

Implementing a service mesh introduces various security considerations to ensure the confidentiality, integrity, and availability of microservices communication. Some of these are listed below.

- Encryption and mutual TLS (mTLS): Enable encryption for communication between microservices using mTLS. This ensures that data exchanged between services is encrypted and authenticated, preventing unauthorised access or tampering.

- Identity and access managementn (IAM): Implement strong identity and access controls within the service mesh. Utilise mechanisms like role-based access control (RBAC) to restrict and manage permissions for service-to-service communication.

- Secure service discovery: Ensure that service discovery mechanisms within the service mesh are secure. Unauthorised services should not be able to register or discover services, preventing potential security breaches.

- Traffic authorisation and policies: Enforce fine-grained access control policies for service communication. Use policies to define which services are allowed to communicate with each other and under what conditions, reducing the attack surface.

- Observability for security monitoring: Leverage observability tools provided by the service mesh for security monitoring. Monitor metrics, logs, and traces to detect and respond to security incidents promptly.

- Regularly update and patch: Keep the service mesh software up-to-date with the latest security patches and updates. Regularly review and update configurations to address potential vulnerabilities and ensure a secure deployment.

- Secure configuration practices: Implement secure configuration practices for the service mesh components. This includes securing the control plane, securing communication channels, and configuring access controls to align with security best practices.

- Secure the control plane: The control plane of the service mesh, which includes components like the API server and service registry, must be adequately secured. Implement authentication, authorisation, and encryption for control plane communications.

- Incident response and forensics: Develop and document an incident response plan specific to the service mesh. Ensure that the team is prepared to investigate and respond to security incidents effectively.

- Service mesh proxy security: The proxies handling communication between microservices must be configured securely. Regularly review and update proxy configurations, and implement measures like rate limiting and access control to protect against potential threats.

By addressing these security considerations, organisations can establish a robust security posture for their service mesh deployments, safeguarding the communication and data flow within their microservices architecture. Regular security audits and continuous monitoring are essential components of maintaining a secure service mesh environment.