Amazon offers a range of options for companies to elevate containerised solution, thus helping send products faster into the market. ECS, EKS, Fargate, Spinnaker and Amazon EKS Anywhere…the choice is varied.

AWS provides multiple choices for a containerised solution in the form of Elastic Container Service (ECS), Elastic Kubernetes Service (EKS) and the new EC2 Fargate. ECS and EKS are primarily meant for container orchestration — ECS is a pure AWS native cloud managed service and EKS is a Kubernetes platform-based native implementation.

When designing a Kubernetes container solution with Amazon EKS, the focus is on services and application groups, without placing significant emphasis on cost benefit and optimisation. The autoscaling feature handles automatic fan-in and fan-out of pod instances in EKS clusters.

Amazon EKS is chosen for managed Kubernetes control plane operations, with nodes based on Amazon EC2 instances which can scale faster in the cluster.

Amazon ECS and EKS

If you are familiar with Docker container handling, you can choose ECS-based architecture. If you are familiar with the Kubernetes framework, you can opt for an EKS-based architecture.

If you seek a cloud-agnostic solution, EKS is preferable, as it gives the advantage of using the underlying services offered by other cloud service providers as well (with less or no disruptions/changes in the level of services). Also, if you are looking for a ‘bin packing’ solution with automatic resource management for the containers, EKS facilitates automated deployment, scaling of services, and self-management of containerised applications.

Amazon Fargate vs EKS

For those new to native container programming, Fargate is a sought-after solution for container orchestration. It is cost-effective as you pay only for computing time, not the underlying EC2 resources and instances.

Fargate is more advanced than ECS and EKS, providing a serverless computing engine to build and deploy containers on an ECS service. It alleviates concerns about configuring underlying infrastructure (typically how serverless computing works, but in this case for a container service) and enables faster time to market.

The EKS Distro, however, helps to create Kubernetes clusters anywhere with underlying open source Kubernetes components like kube-controller-manager, etcd, CoreDNS, kOps and Kubelet. This allows you to deploy the container image on-premise, in a private cloud, or any other cloud, including AWS EC2 or EKS.

A new public repository of container registry called Elastic Container Registry Public or ECR Public can be accessed from https://gallery.ecr.aws/ with or without an AWS account to browse. It can be downloaded as a binary image for any container deployment. This turns ECR into a community initiative for storing, managing, sharing and also deploying container images, reducing the time spent reusing the images available in the public registry.

Cost factors in EKS

Effective cost management is crucial for any organisation utilising cloud-based container orchestration platforms like EKS. With EKS, achieving optimal cost efficiency involves understanding the various cost factors, implementing monitoring tools, and adopting best practices for resource utilisation. The major costs are as follows.

- Cluster costs: A fixed hourly rate is paid for each EKS cluster, regardless of usage.

- Node costs: EKS worker nodes run on standard EC2 instances, incurring regular EC2 costs based on instance type and usage.

- Networking and storage: These are costs associated with network traffic and the storage consumed by your EKS cluster.

- Operations tools: Additional costs may arise for services like Amazon Managed Service for Prometheus (AMP) used for monitoring.

Third-party services: Any external tools or services integrated with EKS contribute to the overall cost.

Monitoring tools for EKS cost management

- Amazon Cost Management: This tool provides general cost visibility and usage reports for all AWS services, including EKS

- Kubecost: This open source tool is specifically designed for Kubernetes cost monitoring, offering detailed insights into resource allocation and cost attribution per namespace, cluster, and pod.

- Amazon Managed Service for Prometheus (AMP): Integrates with EKS to gather and analyse cluster metrics, enabling customised dashboards and cost analysis.

Best practices for EKS cost optimisation

- Right-sizing clusters: Choose the most appropriate EC2 instance type based on your workload needs, avoiding overprovisioning.

- Scaling on-demand instances: Leverage autoscaling groups to dynamically adjust resource allocation based on real-time demand.

- Utilising Spot Instances: Consider using Spot Instances for non-critical workloads to benefit from significant cost savings.

- Resource quotas and limits: Implement resource quotas and limits to prevent uncontrolled resource usage and cost spikes.

- Container optimisation: Optimise your container images and applications for minimal resource consumption.

- Automated shutdown: Implement automated shutdown processes for idle workloads to avoid unnecessary charges.

- Continuous monitoring and analysis: Regularly monitor your EKS resource usage and cost trends to identify potential savings opportunities.

Autoscalers in EKS

Autoscaling is a crucial aspect of managing EKS clusters efficiently. It ensures you have enough resources to run your workloads while avoiding unnecessary costs for idle resources. There are two main approaches to autoscaling in EKS.

Horizontal Pod Autoscaler (HPA)

Purpose: Scales the number of pods of a specific deployment based on resource metrics like CPU or memory usage.

Mechanism: Monitors the resource consumption of pods within a deployment and automatically scales the number of pods up or down to maintain the desired resource utilisation levels.

Benefits:

- Ensures application performance by scaling pods to meet demand.

- Reduces resource costs by scaling down pods when not needed.

- Easy to set up and configure.

Limitations:

- Can only scale pods within a single deployment.

- Doesn’t factor in node resource availability.

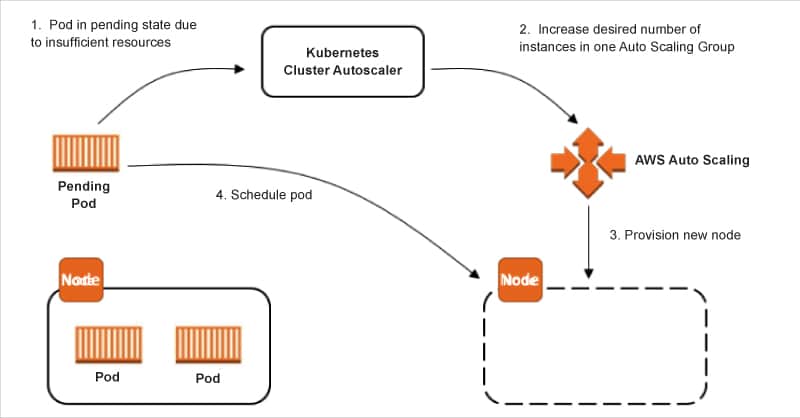

Cluster Autoscaler

Purpose: Scales the number of worker nodes in your EKS cluster based on pod resource requests and availability.

Mechanism: Monitors the pods in the cluster and checks for pods that cannot be scheduled due to insufficient resources. If such pods exist, the Cluster Autoscaler automatically adds new nodes to the cluster. It also removes idle nodes to optimise resource utilisation.

Benefits:

- Scales the entire cluster based on overall resource needs.

- Supports heterogeneous node pools with different configurations.

- Integrates with various cloud providers like AWS and Azure.

Limitations:

- More complex to set up and manage than HPA.

- May not scale quickly enough for sudden spikes in resource demand.

Implementing autoscaling in EKS

Deploying the Cluster Autoscaler

- Use the official Helm chart or Kubernetes manifest to deploy the Cluster Autoscaler in your EKS cluster.

- Configure the Cluster Autoscaler to target specific node groups or namespaces.

Configuring autoscaling groups

- Create autoscaling groups for your EKS worker nodes.

- Configure the ASG settings like instance type, scaling policies, and min/max limits.

- Associate the ASGs with the Cluster Autoscaler.

Monitoring and optimisation

- Use tools like Amazon CloudWatch or Prometheus to monitor cluster resource usage and scaling activity.

- Fine-tune the Cluster Autoscaler configuration based on resource needs and workload patterns.

- Consider implementing HPA for specific workloads requiring finer-grained scaling control.

The following are the components shown in Figure 1.

Pods: Represent the application workloads running in the cluster.

HPA: Monitors resource usage and scales pods within a deployment.

Cluster Autoscaler: Monitors unschedulable pods and triggers node provisioning/termination.

Autoscaling groups: Manage the life cycle of EC2 instances based on Cluster Autoscaler instructions.

CloudWatch: Provides monitoring and insights into resource utilisation and scaling activity.

Amazon EKS Anywhere for a hybrid cloud platform

Cloud service providers like Microsoft Azure, AWS and Google Cloud have been introducing new features for multi-cloud and hybrid cloud architecture.

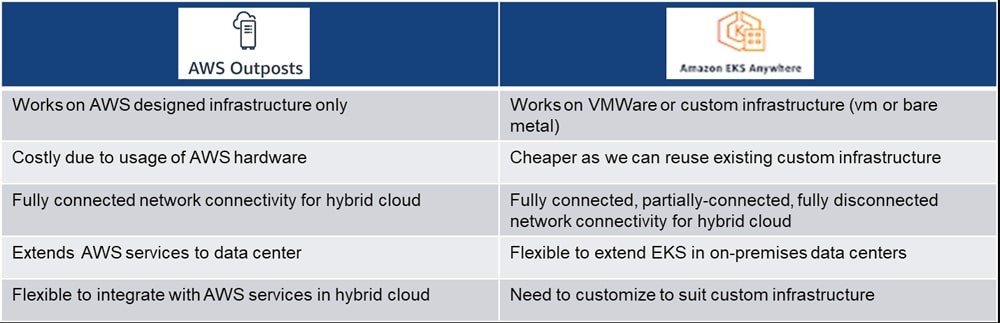

Google Anthos and Azure Arc support hybrid cloud architectures, allowing cloud service deployment on private clouds like VMWare or OpenShift. Amazon also offers a similar capability through Outposts, which was originally designed for AWS infrastructure but extended for private cloud platforms thus enabling AWS distribution like EC2 clusters on private cloud environments.

Now, AWS has tried to overcome the demerits of Outposts (Table 1) with a more advanced service that uses AWS EKS Anywhere. The latter extends Elastic Kubernetes Service (EKS) distribution for use in private cloud infrastructure on a custom data centre environment. So far, we have been able to deploy EKS services in a data centre using EKS Distro (EKS-D), which can run control planes on custom infrastructure, and also help run data planes on existing custom virtual machines or bare metal servers.

AWS Outposts can be used to deploy EKS instances on AWS infrastructure, using the same architecture — control plane, data plane and worker nodes or instances. AWS EKS Anywhere can easily migrate existing Kubernetes services running on-premise (like VMWare Tanzu Kubernetes Grid) to run on an AWS EKS distribution.

AWS EKS Anywhere can be easily considered as a direct competitor of Google Anthos or Azure Arc. It runs self-managed services on VMWare vSphere and is deployable on any cloud platform. It facilitates application service communication through proxying, using App Mesh and Kubernetes distribution and deployment services with Flux services.

Spinnaker for continuous delivery

Spinnaker is a popular continuous deployment/delivery framework suitable for multi-cloud environments. It supports build pipelines with Jenkins, Travis CI, Docker or Git event based triggers. Conceived by Netflix, Spinnaker is now part of the Netflix open source software (OSS) service.

For Kubernetes deployment on the AWS platform, Spinnaker is flexible and handy for quicker integration with Jenkins for continuous integration and deployment to multi-stage environments. The solution blueprint involves developing code in a Cloud9 workspace, and checking into GitHub to trigger a code build using Jenkins, which can create a container image using the CloudFormation template or build script. This gets pushed to the Elastic Container Registry (ECR), from where the Spinnaker pipeline triggers builds when a new Docker image gets added to ECR.

The Spinnaker pipeline involves configuring Kubernetes deployment, generating Kubernetes deployment (a.k.a. Spinnaker bake) for different stages like dev, test and prod, and then deploying the image to Kubernetes pod and to the development environment. After validation checks, manual approval (or judgement) is required to promote the image to the next stage (test and then to production). This ensures that the application container is not promoted to the next stage without validation or approval.

Spinnaker uses Helm v2 for the managing and baking activities of Kubernetes deployments. It has a Helm template for application deployment and a YAML script for dev, test and prod activities. By integrating Spinnaker with Jenkins, we can easily create a container deployment pipeline with CI/CD as a toolchain process for faster time to market.