Modern artificial intelligence, such as ChatGPT, is capable of mimicking human behaviors, often exhibiting even more positive traits like cooperation, altruism, trust, and reciprocity.

In a recent study by the University of Michigan, researchers employed “behavioral” Turing tests, which assess a machine’s ability to demonstrate human-like responses and intelligence, to analyze the personality and behavior of various AI chatbots. The evaluation involved ChatGPT responding to psychological survey questions and participating in interactive games, with its choices compared to those of 108,000 individuals from over 50 countries. The study’s lead author, Qiaozhu Mei, a professor at U-M’s School of Information and College of Engineering, noted that AI’s behavior, characterized by increased cooperation and altruism, might be particularly suitable for roles that require negotiation, dispute resolution, customer service, and caregiving.

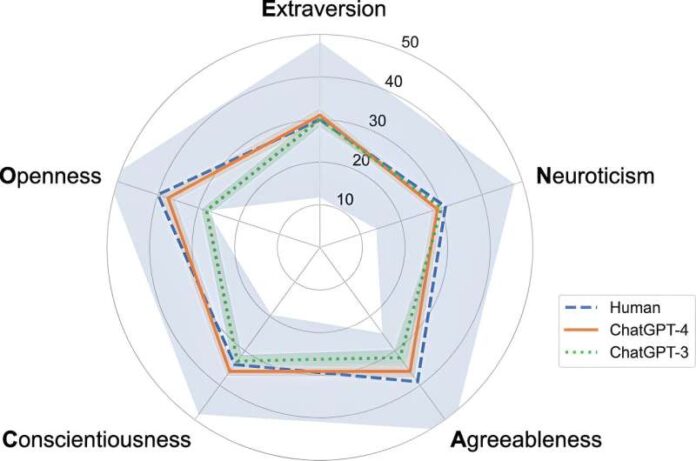

A recent study has shed light on the personality traits and behavioral tendencies of artificial intelligence (AI), raising questions about the future relationship between AI and humans. The study, conducted by a team of researchers, introduces a formal method to test AI’s personality traits, providing a scientific approach to understanding how AI makes choices and revealing its preferences beyond what is explicitly programmed. ChatGPT, exhibits human-like traits in various aspects, including cooperation, trust, reciprocity, altruism, spite, fairness, strategic thinking, and risk aversion. Surprisingly, in certain aspects, AI behaves more altruistically and cooperatively than humans. This discovery has led the researchers to express optimism rather than concern about the future implications of AI’s behavior.

Before this study, comparing AI with humans was limited to examining their outputs, as AI models are often considered “black boxes.” However, the new method allows researchers to delve deeper into understanding how AI makes decisions, a crucial step before entrusting AI with high-stakes tasks like healthcare or business negotiations. Looking ahead, the researchers suggest several future research directions. They propose expanding behavioral tests, testing more AI models, and comparing their personalities and traits. Moreover, they advocate for educating AI to represent the diversity of human behaviors and preferences, rather than an “average human.”

The study’s implications are significant, as they help people understand when and how they can rely on AI to make decisions. Increased trust in AI’s capabilities could lead to its use in negotiation, dispute resolution, caregiving, and other tasks. However, the study also highlights the limitations of AI, particularly in tasks where the diversity of human preferences is crucial, such as product design, policymaking, or education.

The study opens up a new field of “AI behavioral science,” where researchers from different disciplines can collaborate to investigate AI’s behaviors, its relationship with humans, and its impact on society. By understanding AI’s personality traits and behavioral tendencies, we can better navigate the evolving landscape of human-AI interaction.