Generative AI and large language models (LLMs) are the future, and promise a revolution in data management. However, development of LLMs is still very costly and inaccessible to smaller organisations. This will change as the years go by, and AI becomes more commonplace.

AI technology is changing the way the world does business. Generative artificial intelligence (generative AI) refers to the use of large language models (LLMs) to create new content, like text, images, music, audio, and videos.

LLMs are generative AI models that use deep learning techniques known as transformers. These models excel at natural language processing (NLP) tasks, including language translation, text classification, sentiment analysis, text generation, and question-answering. LLMs are trained with vast data sets from various sources, sometimes boasting hundreds of billions of parameters. They could fundamentally transform how we handle, interact with and master data.

Prominent examples of large language models include OpenAI’s GPT-3, Google’s BERT, and XLNet, based on a whopping 175 billion parameters.

Industry adoption of large language models

Generative AI is primed to make an increasingly strong impact on enterprises over the next five years.

- The generative AI-based LLMs market is poised for remarkable growth, with estimations pointing towards a staggering valuation of $188.62 billion by the year 2032. – Brainy Insights

- The world’s total stock of usable text data is between 4.6 trillion and 17.2 trillion tokens. This includes all the world’s books, all scientific papers, all news articles, all of Wikipedia, all publicly available code, and much of the rest of the internet, filtered for quality (e.g., web pages, blogs, social media). Recent estimates place the total figure at 3.2 trillion tokens. One of today’s leading LLMs was trained on 1.4 trillion tokens. – Forbes

- By 2025, 30% of enterprises will implement an AI-augmented development and testing strategy, a substantial increase from 5% in 2021. – Gartner

- By 2026, generative design AI will automate 60% of the design effort for new websites and mobile apps – Gartner

- By 2027, nearly 15% of new applications will be automatically generated by AI without human intervention. This is not happening at all today. – Gartner

- According to Accenture’s 2023 Technology Vision report, 97% of global executives agree that foundation models will enable connections across data types, revolutionising where and how AI is used.

The key characteristics of LLM technology are:

- LLMs can automate data cataloguing, enhancing speed and efficiency.

- They can continuously monitor and improve data quality and detect anomalies.

- These models can generate insights from both structured and unstructured data.

- LLMs enhance data quality control by detecting inconsistencies and anomalies. They can automate the process of checking data against predefined quality standards, making it faster and more efficient.

- They can complete a given text coherently, translate text between languages, and summarise concisely.

- LLMs perform various NLP tasks, understanding and processing human language, and allowing users to ask questions in a conversational manner. They can generate insights in natural language, making them accessible to non-technical stakeholders.

LLM data preparation

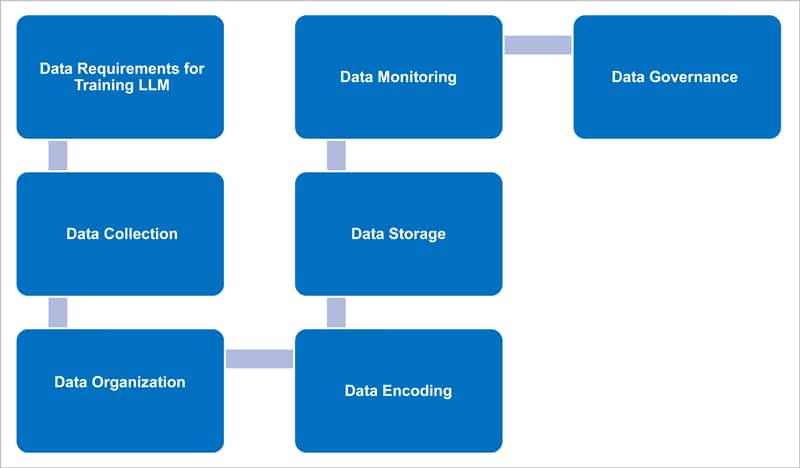

Figure 1 shows the steps involved in LLM data preparation.

Data requirements: Establish the AI strategy. Identify areas where LLMs add value and the types of data that are needed for training LLMs, and select the right tools and technology for GenAI and data management. Identify the various data source systems covering enterprise smarter applications, machine learning tools and real-time analytics. Categorise data into structured, semi-structured, and unstructured types. Structured data refers to databases of the enterprise. Unstructured data comprises videos, images, text messages, etc.

Data collection: Data sources provide the insight required to solve business problems. The various data sources are web, social media, text documents, etc. Collected data is used for training LLMs.

For example, to perform sentiment analysis by the trained LLM, the collected data should include a large number of reviews, comments, and social media posts.

Web scraping is the automated method of extracting data from various websites. Crawlee and Apify Universal scrapers are examples of web scraping tools.

Data organisation: Preprocess data by cleansing, normalising, and tokenising it.

- Data cleansing involves the identification and removal of inaccurate, incomplete, or irrelevant data. Duplicate data will be removed and incorrect data values will be fixed.

- Data normalisation transforms data to standard format for easy comparison and analysis.

- Data tokenisation breaks the text into individual words or phrases using natural language processing (NLP). Tokenisation happens at word level, character level or sub-word level.

Data encoding: Feature engineering is crucial here. It involves creating features based on pre-processed data. Features are numerical representations of the text that the LLM can understand. The data is split, augmented, and encoded during this stage.

- Splitting is the process of dividing the data into training, validation, and testing sets. Training set is leveraged to train LLMs.

- Augmenting helps in synthesising new data and transforming the existing data.

- Encoding embeds data into tokens or vectors.

Data storage: This step primarily involves the Model hub, blog storage, and databases. The Model hub consists of trained and approved models that can be provisioned on demand, acting as a repository for model checkpoints, weights, and parameters. Comprehensive data architecture covering both structured and unstructured data sources is defined as part of the repository. The data is categorised and organised so that it can be used by generative AI models.

Data monitoring: This step involves monitoring the quality and relevance of the data, and updating it to improve the performance of the LLM.

Data governance: This step covers the automation of policies, guidelines, principles, and standards. Data governance must align with AI governance at the enterprise level to realise business objectives.

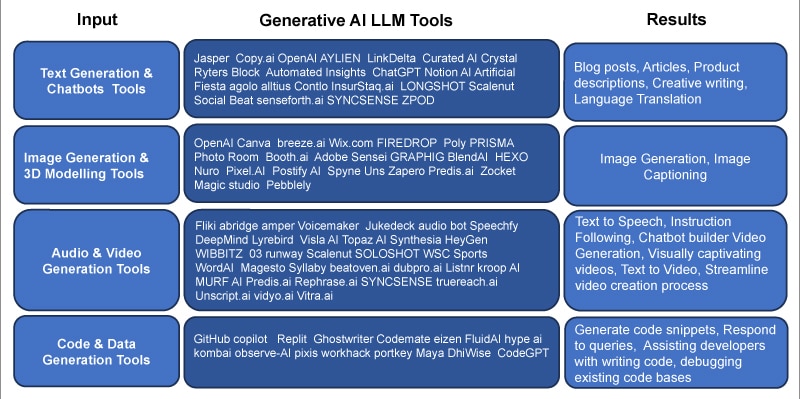

LLM models can be built using open source or proprietary models. Open source models are off-the-shelf and customisable, while proprietary models are offered as LLMs-as-a-service. Figure 2 shows a few LLM tools.

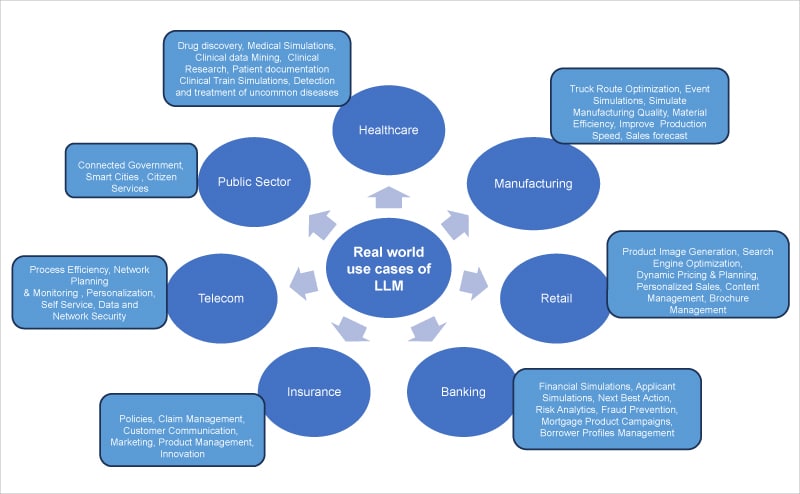

Let’s now take a look at the use cases of LLMs across industries.

Healthcare and pharma

Generative AI-based applications help healthcare professionals be more productive, identifying potential issues upfront, and providing insights to deliver interconnected health. This helps in:

Better customer experience: Automating administrative tasks, such as processing claims, scheduling appointments, and managing medical records.

Patient health summary: Providing healthcare decision support by generating personalised patient health summaries, thus improving patient response times and experience.

Faster analysis of publications: Reducing the time it takes to create research publications on specific drugs by analysing vast amounts of data from multiple sources faster than ever. This helps in accelerating the speed and quality of care, and can also improve drug adherence.

Personalised medicine: Creating individual treatment plans based on a patient’s genetic makeup, medical history, life style, etc.

Healthcare virtual assistant: Providing end users with conversational and engaging access to the most relevant and accurate healthcare services and information.

Manufacturing

Generative AI enables manufacturers to create more with their data, leading to advancements in predictive maintenance and demand forecasting. It also helps in simulating manufacturing quality, improving production speed, and efficiency in the use of materials.

Predictive maintenance: Helps in estimating the life of machines and their components, proactively providing information to technicians about repairs and replacement of parts and machines. This helps in reducing the downtime.

Performance efficiency: Anticipates production problems in real-time, covering the risks of production disruptions, bottlenecks, and safety.

Other usages of generative AI in the manufacturing industry include:

- Yield, energy, and throughput optimisation

- Digital simulations

- Sales and demand forecasting

- Logistics network optimisation

Retail

Generative AI helps in personalising offerings and brand management, thus optimising marketing and sales activities. It enables retailers to tailor their offerings more precisely to customer demand, and supports dynamic pricing and planning.

Personalised offerings: Generative AI enables retailers to deliver customised experiences, offerings, pricing, and planning. It also modernises the online and physical buying experience.

Dynamic pricing and planning: It helps predict demand for different products, providing greater confidence for pricing and stocking decisions.

Other usages of generative AI in the retail industry include:

- Campaign management

- Content management

- Augmented customer support

- Search engine optimisation

Banking

Generative AI applications deliver personalised banking experiences to customers. They improve the financial simulations, developing risk analytics.

Risk mitigation and portfolio optimisation: Generative AI helps banks to build data foundations for developing risk models, identify events that are impacting the bank, mitigate that risk, and optimise portfolios.

Customer pattern analysis: It can analyse patterns in historic banking data at scale, helping relationship managers and customer representatives to identify customer preferences, anticipate needs, and create personalised banking experiences.

Customer financial planning: Generative AI can be used to automate customer service, identify trends in customer behaviour, and predict customer needs and preferences. This helps to understand the customer better and provide personalised advice.

Other usages of generative AI in the banking industry include:

- Anti-money laundering regulations

- Compliance

- Financial simulations

- Applicant simulations

- Next best action

- Risk analytics

- Fraud prevention

Insurance

The capability of analysing and processing large amounts of data by generative AI helps in accurate risk assessments and effective claims processes.

Customer support: Generative AI can provide multilingual customer service by translating customer queries and responding to them in the preferred language.

Policy management: It analyses large amounts of unstructured data related to customer policies, various policy documents, customer feedback, and social media literature for better policy management.

Claims management: Generative AI helps in analysing various claims artefacts to enhance the overall efficiency and effectiveness of claims management.

Other uses of generative AI in the insurance industry are:

- Customer communications

- Coverage explanations

- Cross-sell and up-sell products

- Accelerate the product development life cycle

- Innovation of products

Education

Generative AI offers real-time collaboration between teachers, administrators, and technology innovators.

Student enablement: Generative AI helps students who speak different languages with real-time lesson translations, and helps the visually impaired with classroom accessibility.

Student success: It offers deep analytic insights into student success, and helps teachers to make informed decisions on how to improve results.

Telecommunications

Generative AI adoption improves operational efficiency and network performance in the telecom industry. It can be used to:

- Analyse customer purchasing patterns

- Personalise recommendations of services

- Enhance sales

- Manage customer loyalty

- Give insights into customer preferences

- Provide better data and network security, enhancing fraud detection

Public sector

The goal of most governments is to establish a digital government and provide better citizen services. Generative AI enables smart cities and optimised service operations.

Smart cities: Generative AI helps in toll management, traffic optimisation, and sustainability.

Better citizen services: It offers easier access to connected government services through tracking, search, and conversational bots.

Other services that are enabled using generative AI are:

- Service operations optimisation

- Contact centre automation

Limitations of current LLMs

Enterprises face several challenges in implementing LLMs as a part of generative AI solutions.

Data preparation: Identifying data sources for LLM, labelling data for algorithms, data policies, data security, data storage and data governance are the big challenges.

High volume data: LLMs reference huge amounts of data to generate meaningful output. This training and deploying of LLMs require significant computational resources, making them inaccessible to smaller enterprises or researchers with limited budgets.

High cost: Training costs of LLMs range from a couple of million dollars to ten million dollars, making them financially inaccessible for some organisations.

Data quality: Most LLMs have been trained in data based on web scraping. This may lead to plausible but factually incorrect or nonsensical responses, leading to poor data quality.

Data security: Sometimes, LLMs accept publicly available data as input. This can expose enterprise secrets, posing data security risks.

Reliability: Trained models are often ‘black boxes’, leading to false, harmful, and unsafe results.

Skills gap: Generative AI initiatives requires expertise in machine learning, deep learning, prompt engineering, and large language models, which many enterprises lack in-house.

In summary, LLMs are revolutionising the technology that helps in bridging the gap between human communications and machine understanding.

The major steps involved in building custom LLM models for an enterprise are:

- Data gathering and preparation

- Training the model

- Evaluating the model

- Integrating with applications

- Deploying the model

- Continuous retraining

The future of LLMs is promising, powering virtual assistants, improving machine translation quality and enabling innovative applications across various industries. Organisations such as Google, Microsoft, Amazon, Facebook, IBM and OpenAI leverage LLMs for applications like NLP, chatbots, content generation, sentimental analysis, etc. However, LLM technology is very new and not well understood, and many applications remain exploratory.

Acknowledgements: The author would like to thank Santosh Shinde of BTIS, Enterprise Architecture division of HCL Technologies Ltd, for the support in bringing this article to fruition as part of architecture practice efforts.

Disclaimer: The views expressed in this article are that of the authors and HCL does not subscribe to the substance, veracity, or truthfulness of the said opinion.