Neuromorphic computing transcends mere connectivity, creating a future where our tech mirrors human cognition. Get ready to embrace brain-inspired tech!

IoT is a rapidly evolving field. The recent developments in AI, and now, quantum computing, have accelerated this process by a factor of 100. From smart homes to interconnected urban infrastructures, the IoT ecosystem thrives on continuous data exchange and instant processing. Yet, as vast as these networks become, there’s an inherent need to reimagine how we perceive and approach computing for them.

Enter neuromorphic computing—a revolutionary concept that takes inspiration from the most intricate and efficient computer known to us: the human brain.

At its core, neuromorphic computing seeks not only to replicate the structural intricacies of our neural networks but also to emulate the adaptability, efficiency, and real-time processing capabilities of the brain.In doing so, it promises to overcome many of the limitations that traditional computing architectures face when applied to the sprawling IoT networks of the future.

In this article, we’ll journey through the foundational architecture that has been the backbone of our computer systems—the von Neumann architecture. We delve into the evolving realm of neuromorphic computing, and explore why this shift is the next big step in the evolution of IoT.

The traditional von Neumann architecture in IoT

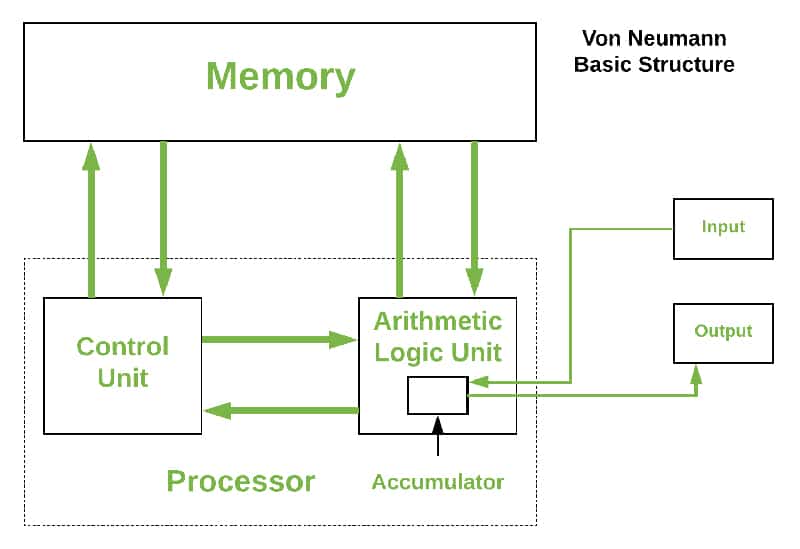

The von Neumann architecture has long been the cornerstone of modern computing. Named after the renowned mathematician and physicist John von Neumann, this design has dictated how computers process information for decades. Central to this architecture is the principle of storing both data and instructions (programs) in the same memory space and processing them sequentially. Figure 1 explores the von Neumann architecture.

The key components of von Neumann architecture are:

- Central processing unit (CPU): The brain of the computer, responsible for executing instructions

- Memory: Stores both the data and the program instructions

- Input/output (I/O) systems: Devices and interfaces that allow the computer to interact with the external world, like sensors in IoT devices

- Bus system: Communication pathways that transport data between different components

In the context of IoT, von Neumann’s architecture has played a pivotal role. IoT devices, at their heart, are miniaturised computers that interact with the physical world. They collect data through sensors, process this data, and then take actions or communicate findings. This sequence of operations—input (sensing), processing, and output (acting or communicating)—is a direct manifestation of von Neumann’s principles.

However, this architecture does come with its set of limitations.

- Bottleneck: Often termed the ‘von Neumann bottleneck’, there’s an inherent limitation in the speed at which data can be transferred between the CPU and memory. In real-time environments like IoT, this can cause latency issues.

- Power consumption: von Neumann architectures can be power-hungry, not ideal for IoT devices which often rely on battery power or energy harvesting.

- Parallel processing: The sequential nature of this architecture isn’t always best suited for tasks that demand parallel processing, a feature that’s becoming increasingly essential with the influx of large-scale data in IoT.

Despite these challenges, von Neumann’s architecture has been the foundation of many breakthroughs in IoT. But as the scale and complexity of IoT systems grow, and the demand for real-time, efficient processing increases, there’s a pressing need for architectures that can better handle these requirements. This sets the stage for the emergence of neuromorphic computing.

Transition to neuromorphic computing

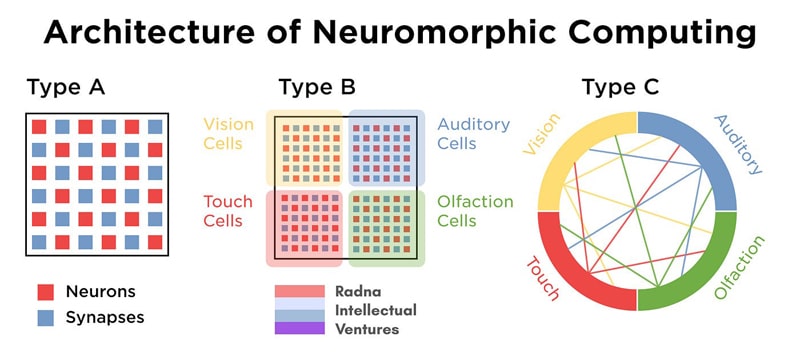

In order to transcend the constraints of traditional computing systems, researchers have turned to an unlikely yet profound source of inspiration: the human brain. The brain, with its billions of neurons and trillions of synaptic connections, operates in ways that no man-made machine can replicate — at least not yet. This realisation birthed the field of neuromorphic engineering, a domain that seeks to design algorithms, hardware, and systems inspired by the structure and functionality of biological neural systems. Figure 2 explores the architectural overview of neuromorphic computing.

Principles of neuromorphic computing

Parallelism over sequence: Unlike von Neumann architectures, which work predominantly in sequences, neuromorphic systems employ massively parallel operations, emulating the brain’s vast network of simultaneously active neurons.

Event-driven processing: Neuromorphic systems often use event-driven models, such as spiking neural networks (SNNs). Instead of continuous computations, these models rely on discrete events or ‘spikes’, mirroring how neurons either fire or remain inactive.

Adaptability and learning: In traditional architectures, hardware and software are distinct entities. In contrast, neuromorphic systems blur these lines, allowing the hardware itself to adapt and learn, akin to the plasticity of biological synapses.

Memristors: The synaptic mimic

One of the groundbreaking components in neuromorphic engineering is the memristor. Memristors, whose resistance varies based on the history of currents passed through them, are seen as ideal components to represent the synaptic weights in artificial neural networks. Their capability to retain memory even when powered off mirrors the long-term potentiation and depression in biological synapses.

Why the shift matters for IoT

With billions of devices projected to be part of the IoT ecosystem, the demand is clear: low power consumption, real-time processing, and adaptive learning capabilities. Neuromorphic computing, with its energy efficiency and real-time adaptability, offers a promising avenue to address these needs. Devices can potentially learn and adapt on-the-fly, make real-time decisions at the edge without relying on centralised servers, and do so with remarkable power efficiency.

In essence, neuromorphic computing isn’t just an incremental improvement over existing architectures; it represents a fundamental paradigm shift in how we approach computing for complex, interconnected systems like IoT.

Cross-comparing von Neumann and neuromorphic architectures

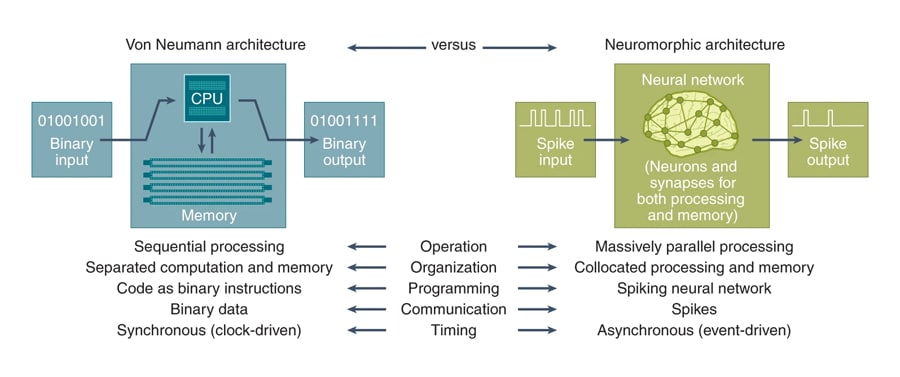

The architectural design behind any computing system not only defines its operational methodology but also its potential and limitations. So let us cross-compare the von Neumann architecture with the evolving architecture of neuromorphic computing. Figure 3 provides a cross-comparison between these architectures.

- von Neumann architecture

Sequential processing: At its core, the von Neumann model is built around the fetch-decode-execute cycle. Instructions and data, both stored in memory, are fetched and processed sequentially, leading to inherent delays.

Shared memory bottleneck: The shared memory for both data and instructions often becomes a bottleneck, especially when dealing with complex computations. This is the infamous ‘von Neumann bottleneck’ that can limit system performance.

Stateful design: von Neumann systems operate in a stateful manner—operations depend on a sequence of previously executed instructions, leading to potential inefficiencies in certain real-time or parallel processing tasks.

- Neuromorphic architecture

Parallelism at heart: Instead of a linear sequence, neuromorphic designs embrace massive parallelism, with artificial neurons operating concurrently. This mirrors the human brain, where billions of neurons can fire or stay silent simultaneously, allowing for multitasking and rapid sensory processing.

Event-driven modality: Neuromorphic systems, especially those using spiking neural networks (SNNs), operate based on events. A neuron either spikes (fires) or doesn’t, making the system inherently efficient as operations are based on events rather than continuous cycles.

Inherent adaptability: By using components like memristors that can adjust their resistance based on historical currents, neuromorphic designs allow for an adaptability that’s hardwired into the system. This is akin to how biological synapses can strengthen or weaken over time, allowing for learning and memory.

Why it matters for IoT

The transition from von Neumann to neuromorphic isn’t just about mimicking the brain but about addressing real-world challenges. As IoT devices proliferate, the demands for low latency, real-time processing, and adaptive learning are increasing. The latency inherent in the von Neumann design, especially in large-scale data processing scenarios, can be a limiting factor for IoT devices needing real-time responses. Neuromorphic designs, with their parallelism and event-driven nature, can address these challenges, offering a more responsive and adaptable architecture for the next generation of IoT solutions.

Industry examples

As academia has unveiled the principles of neuromorphic computing, industry giants and innovative startups have taken the lead in actualising these concepts.

Launched by IBM in collaboration with DARPA’s SyNAPSE program, TrueNorth stands as one of the pioneering neuromorphic chips.

Specifications: Comprising 5.4 billion transistors, the chip simulates over a million programmable neurons and 256 million programmable synapses, offering a snapshot of the brain’s complexity.

Applications: Beyond mere proof-of-concept, TrueNorth has been deployed in real-world scenarios, including real-time hand gesture recognition, optical character recognition, and even powering a prototype mobile eye-tracking system.

Intel’s Loihi

Intel’s foray into neuromorphic engineering, Loihi is a testament to the industry’s commitment to the paradigm. Designed for research purposes, it has been instrumental in fostering exploration and innovation in neuromorphic applications.

Specifications: The chip simulates roughly 8 million neurons, and is designed to provide high efficiency and scalability.

Potential: With on-chip learning, Loihi represents a significant leap towards systems that can adapt and evolve. Intel’s research has showcased its capabilities in tasks ranging from path planning for robots to adaptive, real-time control systems for prosthetic limbs.

Neuralink

While not a neuromorphic company per se, Elon Musk’s Neuralink is weaving the narrative of blending advanced computing with neurobiology. Aimed at creating brain-machine interfaces, Neuralink’s endeavours underscore the potential of merging the biological with the artificial.

Relevance: Neuralink’s work is emblematic of the broader aspiration within neuromorphic computing: understanding, emulating, and eventually synergising with neural systems. As these interfaces evolve, they might incorporate neuromorphic principles to improve efficiency, adaptability, and biocompatibility.

The vision: Beyond medical applications, Neuralink’s long-term vision of symbiosis between the human brain and AI systems aligns with the essence of neuromorphic computing – building machines that resonate with the nuances of human cognition.

Why neuromorphic computing is the future of IoT

The future needs devices that not only communicate but also think, adapt, and evolve. Currently, there are two technologies that are powering this vision into reality, i.e., artificial intelligence (AI) and quantum computing.

Role of AI

- Demand for real-time processing: AI-driven applications in IoT, from voice assistants to anomaly detection in industrial setups, necessitate real-time data processing. Traditional architectures often grapple with latencies, whereas neuromorphic systems, with their parallelism and event-driven nature, are primed for instantaneous responses.

- Adaptive learning on the edge: IoT devices often operate at the edge – away from central servers or clouds. With neuromorphic chips, these devices can learn and adapt on-site without constantly needing data transfers to central servers, ensuring efficiency and data privacy.

- Synergy with deep learning: As deep learning models become more intricate, their computational demands skyrocket. Neuromorphic systems, with their inherent efficiency and brain-like processing, could become the preferred substrate for running these complex models, especially in power-constrained IoT environments.

Emergence of quantum computing

- Beyond classical computing: Quantum computing represents a leap beyond classical computing principles, offering exponentially faster computations for specific tasks. However, the intersection of quantum and neuromorphic computing remains largely uncharted, promising unprecedented computational capabilities.

- Potential synergy: While neuromorphic systems emulate the brain’s structure and functionality, quantum systems could potentially capture its underlying quantum mechanical properties. This synergy could unlock new models of neural processing, further enhancing IoT capabilities.

- IoT and quantum: As quantum technologies mature, they’ll demand architectures that can seamlessly integrate with them. Neuromorphic systems, with their adaptability and bio-inspired designs, may offer the most compatible bridge to this quantum future.

In the confluence of AI and quantum computing, neuromorphic systems stand as potent allies. Their brain-inspired designs resonate with AI’s aspirations, and their adaptive architectures might dovetail with quantum’s complexities. As IoT devices become ubiquitous, smarter, and more integrated into our lives, neuromorphic computing’s relevance will only amplify, heralding a future where devices don’t just connect but also cogitate.

Neuromorphic computing is more than just another tech buzzword; it presents a genuine paradigm shift. By drawing inspiration from the most complex structure known to us, the human brain, we’re not merely aiming for better computing; we’re redefining what it means to compute.