MLOps is a fusion of DevOps and machine learning. It brings the principles that govern DevOps to the field of machine learning, to help build models that improve the functioning of industries as varied as healthcare and e-commerce. There are a number of platforms that can be used for MLOps. We take a look at a few of these.

Machine learning (ML) and DevOps are two critical fields that have been increasingly converging in recent years. DevOps refers to the specialised set of practices that combine software development (Dev) and IT operations (Ops) to shorten the systems development life cycle and provide continuous delivery with high software quality. When applied to ML, this convergence is often referred to as ‘MLOps’ (machine learning operations). MLOps aims to bring the principles of DevOps to the world of machine learning, facilitating the seamless integration of ML models into production environments while ensuring reliability, scalability, and reproducibility.

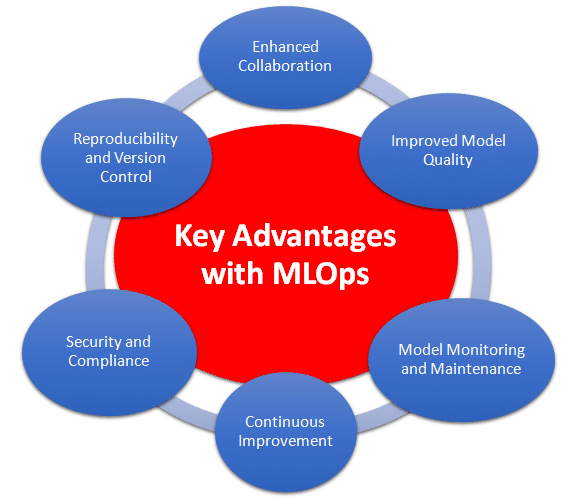

Key advantages of implementing MLOps

Using MLOps offers several advantages that significantly improve the development, deployment and management of machine learning models. Through streamlined processes and enhanced reproducibility, MLOps empowers organisations to harness their full potential.

Faster development cycles: MLOps automates and streamlines the end-to-end machine learning life cycle, including model training, testing and deployment. This results in faster development cycles, allowing data scientists and engineers to iterate and improve models more rapidly.

Improved model quality: MLOps promotes continuous integration and continuous deployment (CI/CD) practices for machine learning models. As a result, models can be tested thoroughly and deployed with greater reliability, leading to higher model quality and performance.

Reduced human errors: Automation in MLOps reduces the manual intervention required in the model deployment process, minimising the chances of human errors and ensuring consistency in deployments.

Enhanced collaboration: MLOps facilitates better collaboration between data scientists, software engineers, and operations teams. It creates a unified workflow where everyone can work together seamlessly, improving communication and knowledge sharing.

Reproducibility and version control: MLOps enables version control and reproducibility of models and experiments. This ensures that models can be tracked, compared, and reproduced, making it easier to roll back to previous versions if needed.

Scalability and resource efficiency: MLOps provides tools and practices to optimise resource allocation, making model training and inference more scalable and efficient. It helps organisations make the most of their computational resources.

Model monitoring and maintenance: MLOps includes monitoring capabilities to track model performance in real-world environments. It can automatically detect concept drift or data drift and trigger retraining or updates as needed, ensuring models remain accurate and relevant.

Faster time-to-market: With automated processes and efficient workflows, MLOps reduces the time it takes to deploy machine learning models into production. This faster time-to-market can provide a competitive advantage for organisations.

Cost optimisation: MLOps practices help optimise the cost of running machine learning workloads. By automating resource allocation and monitoring, organisations can reduce unnecessary expenses.

Security and compliance: MLOps addresses security concerns in model deployment, ensuring that models and data are handled securely. It also helps organisations comply with industry regulations and data privacy requirements.

Flexibility in model deployment: MLOps allows organisations to deploy models across various platforms, including cloud, edge devices, and on-premises infrastructure, providing flexibility and adaptability to different deployment scenarios.

Continuous improvement: MLOps fosters a culture of continuous improvement. By integrating feedback from real-world usage, models can be continuously updated and enhanced, ensuring they remain effective and relevant over time.

MLOps offers numerous benefits that boost the efficiency, reliability, and effectiveness of machine learning deployments. It empowers organisations to deliver high-quality machine learning solutions more rapidly, enhancing their ability to leverage data-driven insights for business value and innovation.

Integration of MLOps with smart cities

MLOps offers tremendous potential in transforming the development and management of smart cities. By harnessing the power of data and AI-driven insights, MLOps enables efficient, scalable, and real-time solutions for the challenges faced in modern urban environments.

One of the key applications of MLOps in smart cities is in the field of traffic management. Machine learning algorithms can analyse vast amounts of traffic data, including live feeds from cameras, GPS data, and historical patterns. MLOps facilitates the continuous training and deployment of traffic prediction models, which can accurately anticipate congestion, optimise traffic flow, and even predict potential accidents. This results in smoother traffic management, reduced commute times, and improved overall safety for citizens.

Another significant use case is in waste management. By integrating MLOps practices, cities can optimise waste collection routes based on real-time data, including the fill-level of trash bins. This ensures that waste collection vehicles follow the most efficient paths, reducing fuel consumption and carbon emissions while keeping the city clean and hygienic.

MLOps can also be applied to enhance public safety and security in smart cities. Machine learning models can be trained to analyse video feeds from surveillance cameras and identify suspicious activities or potential security threats. With MLOps, these models can be continuously updated and deployed to provide real-time monitoring, enabling law enforcement to respond swiftly to incidents and ensure the safety of residents.

MLOps can also help optimise energy consumption in smart cities. Machine learning algorithms can predict energy demand patterns, enabling cities to allocate resources efficiently and make data-driven decisions for energy distribution. MLOps facilitates the automatic updating of these predictive models, ensuring they remain accurate as energy usage patterns evolve.

It can be leveraged for environmental monitoring and sustainability initiatives. By analysing data from various sensors placed throughout the city, machine learning models can provide insights into air quality, water usage, and noise pollution levels. This data-driven approach empowers policymakers to implement targeted strategies for environmental conservation, and create greener and more sustainable urban spaces.

The use of MLOps in smart cities empowers urban planners, administrators, and citizens to make informed decisions based on real-time data and predictive analytics. It enhances the efficiency of city services, optimises resource allocation, and fosters a more livable, safe, and sustainable environment for the growing urban population. As smart cities continue to evolve, MLOps will undoubtedly play a central role in shaping the future of urban living.

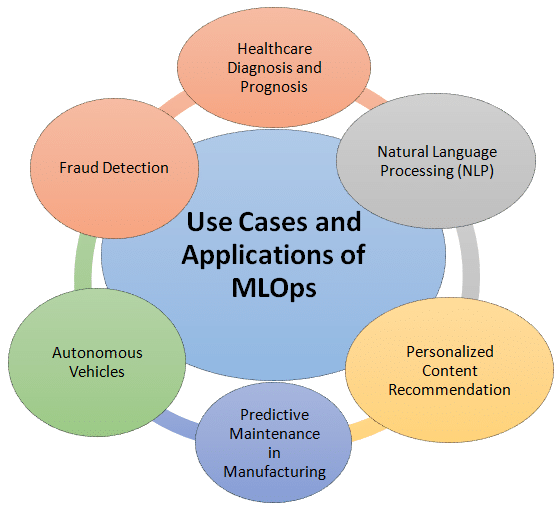

Real world use cases and applications of MLOps

The practical application of MLOps across various industries demonstrates its transformative impact on diverse fields, from e-commerce and manufacturing to healthcare and finance. These real-world use cases exemplify how MLOps optimises processes, enhances decision-making, and ushers in a new era of efficiency and innovation.

Automated image classification in e-commerce: An e-commerce company utilises ML models to classify products automatically. MLOps helps in continuous training and deployment of these models as new product images are added or existing ones change. By automating the model deployment process, the company can maintain the accuracy of its classification system, ensuring customers receive relevant search results.

Predictive maintenance in manufacturing: A manufacturing plant employs ML models to predict machinery failures and schedule preventive maintenance. MLOps enables the seamless integration of these predictive models into their operational systems. This ensures that maintenance schedules are automatically updated based on real-time data, reducing downtime and increasing overall efficiency.

Fraud detection in finance: A financial institution uses ML models to detect fraudulent transactions. MLOps helps in automating the training and deployment of these models, ensuring that the fraud detection system remains up-to-date with the latest patterns and trends in fraudulent activities.

Healthcare diagnosis and prognosis: Hospitals implement ML models for medical image analysis, patient diagnosis, and treatment prognosis. MLOps ensures the continuous improvement and deployment of these models, making sure that medical professionals have access to the most accurate and reliable tools to aid in patient care.

Natural language processing (NLP) for customer support: Companies deploy NLP models to analyse and categorise customer support tickets. MLOps streamlines the process of updating and deploying these models as new customer queries and support ticket data are generated.

Autonomous vehicles: Companies working on autonomous vehicles require continuous development and deployment of ML models for perception, decision-making, and control systems. MLOps helps ensure that the most recent and optimised models are used, improving the safety and performance of autonomous vehicles.

Personalised content recommendation: Media and entertainment platforms utilise ML models to recommend personalised content to users. MLOps enables the seamless delivery of content recommendations in real-time, enhancing user engagement and satisfaction.

In all these cases, MLOps plays a crucial role in automating the ML model life cycle, including model training, testing, deployment, monitoring, and updates. This streamlines the development process, reduces manual errors, enhances collaboration between teams, and allows organisations to deliver high-quality ML-powered applications more efficiently and reliably.

Prominent platforms for MLOps

Many platforms offer a robust ecosystem to streamline machine learning workflows. Two major ones are Kubeflow and TensorFlow Extended.

Kubeflow

URL: https://www.kubeflow.org/

This is an open source platform built on Kubernetes, designed to streamline the deployment, scaling and management of machine learning workloads. It provides tools for building, training, and deploying models in a consistent and reproducible manner. Kubeflow leverages Kubernetes’ container orchestration capabilities to enable seamless scalability of ML workloads. This means that as the demand for machine learning models grows, Kubeflow can efficiently allocate resources and scale the infrastructure to meet it. This elasticity ensures that ML models can handle various workloads, from small-scale experimentation to large-scale production deployments.

In MLOps, it is vital to have a well-documented and version controlled process for model training. Kubeflow provides tools and components that allow data scientists to package their ML code, dependencies, and configurations in a consistent manner using containers. This ensures that models can be easily reproduced and versioned, facilitating collaboration among teams and reducing the risk of model discrepancies in production.

TensorFlow Extended (TFX)

URL: https://www.tensorflow.org/tfx

Developed by Google, TFX is an end-to-end platform for deploying production machine learning pipelines. It includes components for data validation, preprocessing, model training, and serving. It plays a crucial role in the implementation of MLOps by enabling the end-to-end management of machine learning workflows. By seamlessly integrating TensorFlow, TFX facilitates the efficient development, deployment, and monitoring of machine learning models in real-world applications. At its core, TFX is designed to address the complexities associated with scaling and operationalising machine learning projects. It encompasses a collection of modular components, each serving a specific function within the pipeline. These components work cohesively to automate the machine learning life cycle, from data ingestion to model serving and beyond.

Other platforms for MLOps

There are other, lesser-known platforms too that play a vital role in shaping the MLOps landscape.

MLflow

URL: https://mlflow.org/

This open source platform facilitates the management of the complete machine learning life cycle. MLflow allows you to track experiments, package code into reproducible runs, and share and deploy models.

DVC (Data Version Control)

URL: https://dvc.org/

This version control system is designed for managing machine learning models, data sets, and experiments efficiently.

Apache Airflow

URL: https://airflow.apache.org/

While primarily used for data orchestration and workflow automation, Airflow can also be leveraged for MLOps to schedule and monitor model training and deployment tasks.

Metaflow

Developed by Netflix, Metaflow is a data science framework that assists in building and managing real-life data science projects. It allows easy integration of code, data, and models.

Knative

URL: https://knative.dev/

Not specific to MLOps, Knative is an open source platform that extends Kubernetes to support serverless workloads, making it useful for managing serverless machine learning deployments.

CML (Continuous Machine Learning)

URL: https://cml.dev/

An open source library that integrates with Git and GitHub Actions to enable continuous integration and delivery for machine learning projects.

Seldon Core

URL: https://www.seldon.io/tech/products/core/

Built on Kubernetes, Seldon Core helps deploy and manage machine learning models at scale, allowing you to create production-ready inference graphs.

Apache Spark

URL: https://spark.apache.org/

While primarily a Big Data processing engine, Spark’s MLlib library provides various tools for building and managing machine learning pipelines.

Research in the domain of MLOps can delve into identifying and analysing the best practices, methodologies and frameworks for integrating machine learning and DevOps effectively. This could involve studying existing MLOps tools, platforms, and practices to understand their strengths, weaknesses, and areas of improvement. Research may also explore techniques for automating model training, testing, and deployment pipelines.

Research could also address the security and governance challenges associated with deploying ML models in production environments. This may involve exploring methods to secure models, data, and infrastructure, as well as adhering to regulatory compliance.

Researchers can contribute to enhancing the efficiency, reliability, and ethical implications of deploying ML models in real world settings, making them more accessible and beneficial to society.