Generative AI is changing the face of technology. Let’s take a brief look at the history of AI and how it has evolved over the years, leading to the emergence of generative AI.

It’s a very exciting time to be a technologist. My own IT career has experienced massive transformation all the way from mainframes, client-servers, enterprise apps, and the internet, to the cloud and AI. In today’s digital economy, technology leaders are the bridge between business agendas and leadership strategies. These tech leaders are uniquely positioned to challenge the status quo by setting the direction for an enterprise, which is driven largely by innovation in technology. For the past few months, every such leader is trying their best to realign an organisation’s technology strategy with generative AI.

Let’s look at what generative AI is, how it is different from traditional AI, why it’s gaining popularity now, and what open source technologies are available to take advantage of this new bleeding-edge innovation. But before jumping into what generative AI is, it’s important to give a quick look at what AI truly is and how it has evolved over the years.

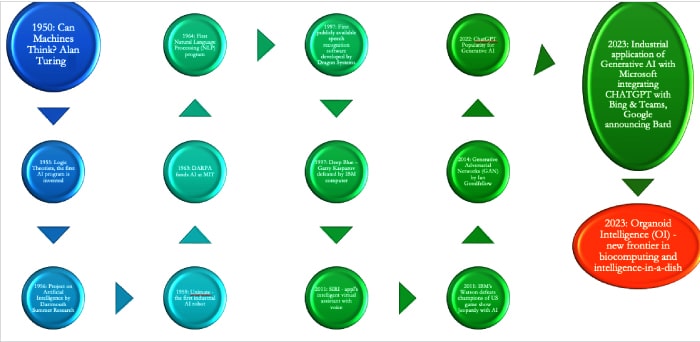

Brief history of AI

It was way back in 1950 that Alan Turing questioned whether machines can think. That single thought has led to the development of autonomous driving cars, self-healing applications, and much more. In the quest for developing a smart world that’s highly productive, the role of AI has been significant, especially in the past two decades. Traditional AI evolved into generative AI when generative adversarial network (GAN) algorithms were developed to create convincingly authentic images, videos, and audio of real people.

AI has benefited significantly from advancements in machine learning (ML), natural language processing (NLP), deep learning (DL), and other data processing frameworks.

What is generative AI?

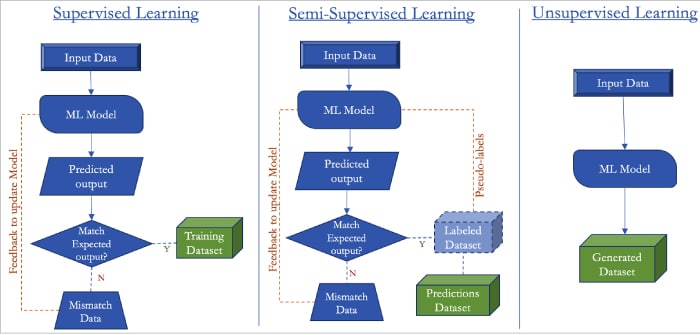

Generative AI refers to unsupervised or semi-supervised machine learning algorithm-based AI systems that utilise data to learn a representation of artifacts, and then use that representation to create whole new artifacts while maintaining a resemblance to the original data. The idea is to generate artifacts that look real. Generative AI represents a category of artificial intelligence that can generate novel content as opposed to analysing existing data. The model with which it is trained is a reference used to build its own understanding and evolve, in order to develop the ability to create new content like articles, blog posts or images, and sounds. Typical AI, which has been around for decades, focuses on analysing existing data. It is supervised machine learning, whereas generative AI is unsupervised or semi-supervised machine learning that enables the use of existing input data, image, audio, or video content to generate new content that is as real as it can be.

The job of a supervised machine learning algorithm is to locate the right answers in the new data, since the old data already includes them.

Unsupervised machine learning is the technique of uncovering hidden patterns from data. Using this method, a machine learning model looks for any patterns, structure, similarities, and differences in the data on its own. There is no requirement for human involvement.

Supervised machine learning is discriminative modelling and unsupervised machine learning works as generative modelling. A hybrid version of the two models is known as semi-supervised machine learning.

Semi-supervised learning (SSL) employs a sizable amount of unlabelled data to train a predictive model, together with a modest amount of labelled data. The major benefit of this model is that within a short span of taking a sample data model, one can start the machine learning process of self-training with pseudo labelling. There are many variations in this model like co-training, which trains two individual classifiers based on two views of data. These models are developed based on need and usage expectations.

| Learning scope | Supervised | Semi-supervised | Unsupervised |

| Input data | Labelled | Some labelled data with large amounts of unlabelled data | Unlabelled |

| Model feed | Input and output variables | Input and output variables with trained data set |

Input variables only |

| Human involvement | Most involved | Some involvement | Least involved |

| Functional characteristics | Collection/preparation of qualitative data; Tedious time-consuming data labelling; More accurate results |

Faster data preparation time; Self-training with minimal supervision |

Most time-consuming learning model; Complexity increases with volume of data; Less accurate results |

| When to use | Look for known data pattern/analysis | Crawlers and content aggregation |

Look for unknown data pattern/analysis |

| Popular algorithms | Support vector machine; Random forest; Naïve bayes; Decision trees |

FixMatch; MixMatch; Graph-based SSL algorithms |

Gaussian mixture models; Principal component analysis; Frequent pattern growth; K-means |

| Common use cases | Demand forecasting; Price prediction; Sentiment analysis; Image recognition |

Speech recognition; Content classification; Document stratification; Website annotation |

Prep data for supervised learning; Anomaly detection; Recommendation systems; Customer segmentation |

Table 1: Characteristics of supervised, semi-supervised and unsupervised ML models

As their adoption and use cases evolve, each of these machine learning models is destined to advance and develop dramatically in its core capabilities. Semi-supervised learning is today being applied everywhere — from data aggregation to image or speech processing. The generalisation semi-supervised machine learning offers by performing data classification based on a small number of defined variables is extremely attractive, making it very popular among all the machine learning models. Table 1 shows how the three machine learning models differ and lists the types of algorithms that are typically used for various use cases. It should give an idea as to where and how these models are most useful.

Most artificial intelligence enterprise applications are written in commonly known popular open source languages like Python, Lisp, Java, C++, and Julia. Most of the enterprises embarking on the digital transformation journey find themselves leveraging AI in routine scenarios for increasing operational efficiency and automation of mundane processes. The good news is that most popular open source AI frameworks allow the developer to use the language of their choice as well as the implementation model — supervised, semi-supervised or unsupervised learning. Listed below are a few popular AI frameworks that are in use currently.

TensorFlow is perhaps the most popular AI framework. Developed by Google, it supports learning neural networks in an easy and extensible setup. This is also the most widely adopted deep learning framework.

PyTorch is a Python framework for building machine learning algorithms that can quickly evolve from prototype to production.

Keras is designed with the developer in mind. It allows a plug-and-play framework for building, training, and evaluating machine learning models quickly.

scikit-learn includes a great level of abstraction from common machine learning algorithms making it suitable for predictions, classification, or statistical analysis of data.

Knowing what problem has to be solved and which libraries provide the most support for that, will help decide what toolsets and framework to use to develop the machine learning module that will give the expected outcome.

The research on generative AI is continuous and this technology is today being applied in a wide range of industries, including the life sciences, healthcare, manufacturing, material sciences, media, entertainment, automotive, aerospace, military, and energy.

Whatever direction AI takes in the future, its influence is bound to last for a very long time. Today, innovations in generative AI have led to organoid intelligence. Organoids are three-dimensional lab-grown tissues derived from stem cells. As we embrace various forms of AI in our daily lives, one thing is for certain – innovation in this field will continue to create cutting-edge technology.