Machine learning is everywhere and the field of art is no exception. This article explores applications of machine learning in painting and music.

Machine learning has emerged as an important and powerful tool for software developers. It has helped solve problems that were earlier considered unsolvable, and has been adopted in a wide spectrum of domains. In addition to the various tech domains that have benefited from ML, many art forms have also benefited from it. This article focuses on two major art forms — music and painting.

Various tools can assist in applying ML to the creative process. Some of them are:

- Magenta

- Google Deep Dream

- MuseNet

There are various applications that have been developed using popular tools such as TensorFlow. This article has two major sections:

- The first section explores neural style transfer using TensorFlow.

- The second section explores Magenta and its application to music.

Neural style transfer

Composing one image in the style of another image is called neural style transfer. Using this technique, an ordinary picture can be converted into a style of painting created by the masters such as Picasso, Van Gogh or Monet. The major steps in neural style transfer are:

- Get an image

- Get a reference image

- Blend the images with ML

- Output the image with the transformed style

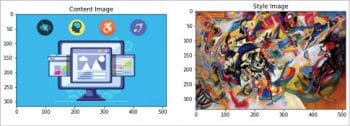

Figure 1 shows a source image (the banner image of an earlier article titled, ‘Is your website accessible and inclusive?’ in the March 2020 issue of OSFY available at https://www.opensourceforu.com/2020/03/is-your-website-accessible-and-inclusive/) and a reference style image (a Kandinsky painting).

The fast style transfer using TensorFlow Hub is shown in Figure 2.

The code snippet with TensorFlow Hub is as follows:

import tensorflow_hub as hubhub_module = hub.load(‘https://tfhub.dev/google/magenta/arbitrary-image-stylization-v1-256/1’)stylized_image = hub_module(tf.constant(content_image), tf.constant(style_image))[0]tensor_to_image(stylized_image) |

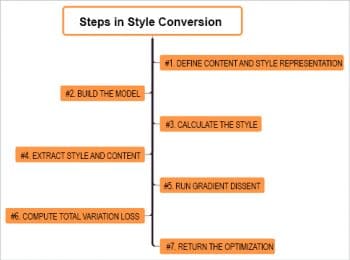

In the style conversion process, the following steps are involved:

- Defining content and style representations

- Building the model

- Calculating the style

- Extracting the style and content

- Running the gradient descent

- Total variation loss

- Re-running the optimisation

The final stylised output with the source image rendered using the reference style image is shown in Figure 4.

The complete code demo is available at https://colab.research.google.com/github/tensorflow/docs/blob/master/site/en/tutorials/generative/style_transfer.ipynb.

Magenta

Magenta is an open source project aimed at exploring the role of machine learning in the creative process (https://magenta.tensorflow.org/). There are two different distributions of Magenta, as listed below.

- Magenta – Python: This is a Python library that is built on TensorFlow. It has powerful methods to manipulate music and image data. Generating music by using ML models is the primary objective of Magenta – Python. It can be installed with pip.

- Magenta – JavaScript: This distribution is an open source API. It enables the use of pre-trained Magenta models inside the Web browser. TensorFlow.js is at the core of this API.

Magenta-Python

The first step is to install Magenta along with the dependencies, as follows:

1 2 3 4 | print(‘Installing dependencies...’)!apt-get update -qq && apt-get install -qq libfluidsynth1 fluid-soundfont-gm build-essential libasound2-dev libjack-dev!pip install -qU pyfluidsynth pretty_midi!pip install -qU magenta |

Magenta fundamentally works on NoteSequences, which are abstract representations of a sequence of notes. For example, the following code snippet represents ‘Twinkle Twinkle Little Star’:

from magenta.music.protobuf import music_pb2twinkle_twinkle = music_pb2.NoteSequence()# Add the notes to the sequence.twinkle_twinkle.notes.add(pitch=60, start_time=0.0, end_time=0.5, velocity=80)twinkle_twinkle.notes.add(pitch=60, start_time=0.5, end_time=1.0, velocity=80)twinkle_twinkle.notes.add(pitch=67, start_time=1.0, end_time=1.5, velocity=80)twinkle_twinkle.notes.add(pitch=67, start_time=1.5, end_time=2.0, velocity=80)twinkle_twinkle.notes.add(pitch=69, start_time=2.0, end_time=2.5, velocity=80)twinkle_twinkle.notes.add(pitch=69, start_time=2.5, end_time=3.0, velocity=80)twinkle_twinkle.notes.add(pitch=67, start_time=3.0, end_time=4.0, velocity=80)twinkle_twinkle.notes.add(pitch=65, start_time=4.0, end_time=4.5, velocity=80)twinkle_twinkle.notes.add(pitch=65, start_time=4.5, end_time=5.0, velocity=80)twinkle_twinkle.notes.add(pitch=64, start_time=5.0, end_time=5.5, velocity=80)twinkle_twinkle.notes.add(pitch=64, start_time=5.5, end_time=6.0, velocity=80)twinkle_twinkle.notes.add(pitch=62, start_time=6.0, end_time=6.5, velocity=80)twinkle_twinkle.notes.add(pitch=62, start_time=6.5, end_time=7.0, velocity=80)twinkle_twinkle.notes.add(pitch=60, start_time=7.0, end_time=8.0, velocity=80)twinkle_twinkle.total_time = 8twinkle_twinkle.tempos.add(qpm=60);# This is a colab utility method that visualizes a NoteSequence.mm.plot_sequence(twinkle_twinkle)# This is a colab utility method that plays a NoteSequence.mm.play_sequence(twinkle_twinkle,synth=mm.fluidsynth) |

Machine learning with Magenta

The magenta.music library enables the developer to do the following:

- Create music using utilities in the Magenta library, by using abstractions.

- Generate music using ML models.

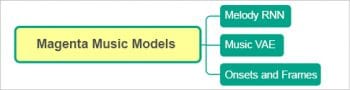

This library incorporates various ML models that are built using TensorFlow. Three of the prominent models are listed below.

- MelodyRNN: Given a note sequence, it will continue in the original style.

- MusicVAE: This is used to build novel NoteSequences. It can also be used to interpolate between sequences.

- Onsets and frames: This transcribes piano audio.

Melody RNN

Melody RNN is primarily an LSTM based language model. It handles musical notes. The objective is to continue the given sequence in the original style. There are two steps to using it:

- Initialising the model

- Continuing a sequence

The code snippet for model initialising follows:

print(‘Downloading model bundle. This will take less than a minute...’)mm.notebook_utils.download_bundle(‘basic_rnn.mag’, ‘/content/’)# Import dependencies.from magenta.models.melody_rnn import melody_rnn_sequence_generatorfrom magenta.models.shared import sequence_generator_bundlefrom magenta.music.protobuf import generator_pb2from magenta.music.protobuf import music_pb2# Initialize the model.print(“Initializing Melody RNN...”)bundle = sequence_generator_bundle.read_bundle_file(‘/content/basic_rnn.mag’)generator_map = melody_rnn_sequence_generator.get_generator_map()melody_rnn = generator_map[‘basic_rnn’](checkpoint=None, bundle=bundle)melody_rnn.initialize() |

The sequence continuation can be carried out with two parameters – the number of steps and temperature. You can tweak these two parameters and understand the change in the resultant output:

# Model options. Change these to get different generated sequences!input_sequence = twinkle_twinklenum_steps = 128 # change this for shorter or longer sequencestemperature = 1.0 # the higher the temperature the more random the sequence.# Set the start time to begin on the next step after the last note ends.last_end_time = (max(n.end_time for n in input_sequence.notes)if input_sequence.notes else 0)qpm = input_sequence.tempos[0].qpmseconds_per_step = 60.0 / qpm / melody_rnn.steps_per_quartertotal_seconds = num_steps * seconds_per_stepgenerator_options = generator_pb2.GeneratorOptions()generator_options.args[‘temperature’].float_value = temperaturegenerate_section = generator_options.generate_sections.add(start_time=last_end_time + seconds_per_step,end_time=total_seconds)# Ask the model to continue the sequence.sequence = melody_rnn.generate(input_sequence, generator_options)mm.plot_sequence(sequence)mm.play_sequence(sequence, synth=mm.fluidsynth) |

Music VAE

Music VAE (variational auto encoder) is a generative model that can be used to create novel sequences or interpolate between existing sequences.

The model initialisation can be done with the following code snippet:

# Import dependencies.from magenta.models.music_vae import configsfrom magenta.models.music_vae.trained_model import TrainedModel# Initialize the model.print(“Initializing Music VAE...”)music_vae = TrainedModel(configs.CONFIG_MAP[‘cat-mel_2bar_big’],batch_size=4,checkpoint_dir_or_path=’/content/mel_2bar_big.ckpt’) |

New sequence creation may be carried out as shown below:

generated_sequences = music_vae.sample(n=2, length=80, temperature=1.0)for ns in generated_sequences:mm.plot_sequence(ns)mm.play_sequence(ns, synth=mm.fluidsynth) |

Magenta – JavaScript

As stated earlier, the JavaScript distribution of Magenta can be used right inside your Web browser. It provides many powerful features to manipulate music in the Web browser.

The Magenta music can be incorporated into JavaScript as follows:

<script src=”https://cdn.jsdelivr.net/npm/@magenta/music@^1.0.0”></script> |

Managing NoteSequences and playing them is rather simple in JavaScript:

player = new mm.Player();player.start(TWINKLE_TWINKLE);player.stop(); |

The three machine learning models (MusicRNN, MusicVAE, and onsets and frames), which were explained earlier with Magenta – Python, are available in the JavaScript version as well. A sample code snippet for MusicRNN is shown below:

// Initialize the model.music_rnn = new mm.MusicRNN(‘https://storage.googleapis.com/magentadata/js/checkpoints/music_rnn/basic_rnn’);music_rnn.initialize();// Create a player to play the sequence we’ll get from the model.rnnPlayer = new mm.Player();function play() {if (rnnPlayer.isPlaying()) {rnnPlayer.stop();return;}// The model expects a quantized sequence, and ours was unquantized:const qns = mm.sequences.quantizeNoteSequence(ORIGINAL_TWINKLE_TWINKLE, 4);music_rnn.continueSequence(qns, rnn_steps, rnn_temperature).then((sample) => rnnPlayer.start(sample));}rnn_steps and rnn_temperature can be used to modify the output. |

There are many demos available at https://magenta.tensorflow.org/demos.

The Magenta Studio provides a collection of music plugins that are built using Magenta models (https://magenta.tensorflow.org/studio). The power of Magenta can be understood from the variety of demos.

With the advent of advanced libraries such as Magenta, handling art with machine learning is becoming simpler and more effective. We can surely expect more to come in the near future.

To conclude, as the official documentation says, Magenta can really make your browser sing.