An understanding of the multimedia application is a must in today’s world because there are many products based on it, like the IP phone, IP TV, video conferencing applications, network video recorders, etc. These products have become essentials in this modern era of communication.

Multimedia applications have different requirements and there are many challenges to be addressed. On the whole, multimedia applications are divided into two types — interactive applications and non-interactive applications.

Interactive applications: Interactive applications are more time stringent. They are more sensitive to network jitter and end-to-end delay. Some loss of packets might be acceptable with such applications. Examples of such applications are Voice over IP, video conferencing apps, etc.

Non-interactive applications: These are less time stringent. They are less sensitive to jitter and delay, but a lesser loss of packets is to be expected. Applications like live streaming and stored video/audio fall into this category. Such applications have buffering on the client side to reduce network jitter and delay. Due to buffering, the video stream appears smooth. Many media players use such a design.

Real-Time Protocol (RTP)

RTP is an Internet standard protocol for the transport of real-time data. It is used to exchange video/audio data over a network. RTP does not check bandwidth or quality of service. RTCP (Real Time Transport Control Protocol) is a control protocol used for quality of service. It is used for synchronisation of different media streams and to give information about participants in a group session. RTP and RTCP use consecutive transport layer ports. RTP is mostly used with UDP/IP.

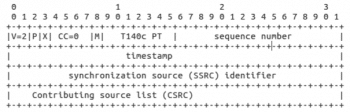

An RTP header would look like the one in Figure 1.

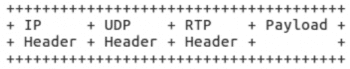

Raw data would look like what’s shown in Figure 2.

V(version) 2bit: The first two bits are version identifiers.

P (padding) 1bit: If the padding bit is set, then the packets contain one or more padding bytes at the end. This is to make for a fixed header size.

X (extension) 1bit: This is mainly used by application developers if they need some separate information to be passed on to their clients.

M (marker) 1bit: Special frames are conveyed to the recipient through this bit. An example of this is the end frame which is marked.

PT (Payload Type) 7bits: PT identifies the type of payload. If there is any change in the data then the receptor knows it, and takes appropriate action. You can find the payload type in RTP RFC.

Sequence number 16bits: This is the number to put frames in order. So this number is incremental in form. Mostly, RTP is used over UDP, and there is a need to put packets in sequence to construct the frames. RTP does not do anything about the packet loss. That depends on the receptor’s implementation.

Time stamp 32bit: This shows the time of sampling. Time can be the wall clock time or monotonic time. This field is used when the network jitter is more. One can buffer some frames and play them according to the time stamp difference. So even if the network has jitter, a smooth stream is achieved on playback. Many players, including VLC, etc, do such an implementation.

SSRC (Synchronisation source) 32bit: This informs the receptor about the source of the data that is received. There can be many sources that the receptor receives. Each source has a different identifier. Mainly, this is used in the video conferencing type of application.

CSRC (Contributing source list) 32bit: This gives the number of sources contributing to the packet in the payload. It comes into the picture when there is a mixer used.

Real Time Transport Control Protocol (RTCP)

This is a control protocol used with RTP. RTCP packets contain sender and receiver reports that contain information like packet loss, packets sent and inter-arrival jitter. The value of SSRC is changed by the RTCP. This happens when the same source is sending different streams at the same time.

RTCP packets contain information like the receiver report, the sender report and source description items. This information can be used for an adaptive streaming kind of feature. The sender and receiver should be smart enough to check network jitter and adjust accordingly. RTSP communication works on both TCP and UDP transport protocols. TCP is mostly used for RTSP.

RTSP (Real Time Streaming Protocol)

RTSP is an application layer protocol used to control the delivery of data. It works like a remote control for streaming. It has some standard commands that control streaming. It is a client-server protocol for multimedia applications. Its functionality includes streaming, pausing streams, fast forwarding the stream, reversing the stream, etc. It is designed to be used with other protocols like RTP and RTCP for streaming.

Some methods that RTSP supports, in order to do its job well, are listed below.

OPTION: The client asks the server which methods the RTSP media server uses, so that the client/server knows the RTSP commands that are supported.

DESCRIBE: The server sends the client a description of the media being demanded. Authentication takes place in this method. Basic and digest — both types of authentication methods are valid for this. The DESCRIBE command is mostly used to get information such as the kind of underlining protocol used, the video or audio source, and the SDP (session description) — bit-rate, resolution, etc.

SETUP: The server creates a session for the client and allocates resources for it like opening the socket for the particular port and the IP address. The SETUP command response is the destination and source address, and port.

PLAY: This command asks the server to start sending packets of video and audio. RTSP supports sending packets on both the same socket created or on a different socket for streaming.

PAUSE: This command keeps the session of RTSP on the server/client side only. The server will not send the packets over.

TEARDOWN: This command is used for closing the session and freeing the resource on both sides.

I have mentioned minimal commands. You can explore many more commands for RTSP as per requirements.

Configuring the media server

This is a simple tutorial for making your RTSP sever. A more advanced one would require writing code. Live555 is an open source library available for RTSP streaming. This library is widely used by many standard companies. VLC has the RTSP client of Live555, which is a single threaded library and is not a thread safe library. As this library is open source, one can download the source code and compile it in a proper environment.

How to compile source code for a Linux workstation

Download and extract the latest tar ball of live555 from http://www.live555.com/. Move to the extracted folder and execute the below set of commands.

- ./genMakefiles linux

- make all

- make install

- run binary ./live555MediaServer

Before running the live555MediaServer you need to keep the files you want to stream, besides Makefile. This is now your home directory for the media server. Once you run live555MediaServer you will find it can stream only a few formats. But for the purpose of understanding, it is good to start with. If you need your own format to stream, then you have to write code for it.

VLC includes the RTSP client, so you can check and stream data to VLC by adding the URL to it; e.g., rtsp://<your IP address>/<filename>.