Facebook says these algorithms were designed based on its experience detecting abuse across billions of posts on its platform.

Facebook is open sourcing two algorithms that it uses to detect harmful content, such as child exploitation, terrorist propaganda, or graphic violence.

PDQ and TMK+PDQF, the photo-matching algorithm and video-matching technology, have been released on Github.

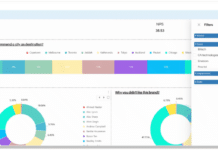

“These technologies create an efficient way to store files as short digital hashes that can determine whether two files are the same or similar, even without the original image or video,” Facebook explains in blog post.

Facebook hopes that these algorithms will help other tech companies, nonprofit organizations and individual developers “to more easily identify abusive content and share hashes — or digital fingerprints — of different types of harmful content.”

Partnership with Academic institutions

Over the years, Facebook has contributed hundreds of open-source projects, but this is the first time it has shared any photo- or video-matching technology.

In addition to the open-source move, Facebook has formed a partnership with the University of Maryland, Cornell University, Massachusetts Institute of Technology and the University of California, Berkeley to research new techniques to detect intentional adversarial manipulations of videos and photos to circumvent safety systems.

Facebook announced the news at its fourth annual cross-industry Child Safety Hackathon in Menlo Park, California.

The two-day event brings together nearly 80 engineers and data scientists from Technology Coalition partner companies and others to develop new technologies that help safeguard children.