Related research paper is expected to be published by end of January 2019 at the earliest, as reported by Synced.

A Stanford ntelligent Systems Laboratory (SISL) research group has decided to open-source its NeuralVerification.jl project, which helps verify deep neural networks’ training, robustness and safety results.

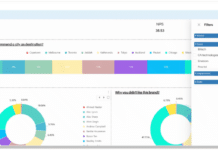

The library is now available on GitHub and contains implementations of various methods used to verify deep neural networks. In general, it helps verify whether a neural network satisfies certain input-output constraints. The verification methods are divided into five categories:

Reachability methods: ExactReach, MaxSens, Ai2,

Primal optimization methods: NSVerify, MIPVerify, ILP

Dual optimization methods: Duality, ConvDual, Certify

Search and reachability methods: ReluVal, DLV, FastLin, FastLip

Search and optimization methods: Sherlock, BaB, Planet, Reluplex

The implementations run in Julia 1.0.

Related research paper is expected to be published by end of January 2019 at the earliest, as reported by Synced.

Upcoming software to help drones make decisions in millisecond

Research at Stanford Intelligent Systems Laboratory (SISL) focuses on advanced algorithms and analytical methods for design of robust decision-making systems. It is supported by numerous external sponsors, including Federal Aviation Administration (FAA), MIT Lincoln Laboratory, NASA, National Science Foundation, Bosch, SAP, etc.

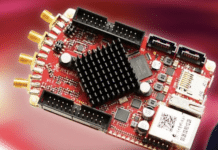

SISL has worked with Suave to build drones and other flying aircraft. The team is apparently working on a software that allows drones to make very fast (millisecond) decisions while flying in crowded spaces such as urban areas. If successful, this technology may benefit large commercial companies like Amazon and Google who would be using drones for delivery of packages from their online stores.