Intel’s Jason Knight also announced the open sourcing of nGraph, an open-source C++ library and runtime / compiler suite for Deep Learning ecosystems.

Intel has released a Kubernetes-native deep learning platform, dubbed Nauta, on Github under the Apache 2.0 licence.

Intel describes Nauta as a multi-user, distributed computing environment for running deep learning (DL) model training experiments on Intel Xeon Scalable processor-based systems.

The new open source platform lets data scientists and developers use Kubernetes and Docker to conduct distributed deep learning at scale, reported CBR.

Intel Nauta’s client software can run on Ubuntu 16.04, Red Hat 7.5, MacOS High Sierra and Windows 10. It supports both batch and streaming inference, all in a single platform, its GitHub repo shows.

Intel’s Jason Knight, speaking at the company’s AI DevCon in Munich on Wednesday, described Nauta as “Kubernetes-native” and said it had been built for ease of use. He also announced the open sourcing of nGraph, an open-source C++ library and runtime / compiler suite for Deep Learning ecosystems.

Intel Nauta

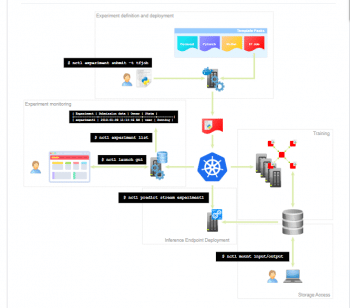

The Nauta software provides a multi-user, distributed computing environment for running deep learning model training experiments. Results of experiments can be viewed and monitored using a command line interface, web UI and/or TensorBoard – Intel says.

Developers can use existing data sets, proprietary data, or downloaded data from online sources, and create public or private folders to make collaboration among teams easier.

Nauta runs using the industry leading Kubernetes and Docker platform for scalability and ease of management.

Template packs for various DL frameworks and tooling are available (and customizable) on the platform. This means developers can use multi-node deep learning training experiments “without all the systems overhead and scripting needed with standard container environments.”