ML.NET is an open source cross-platform machine learning (ML) framework for .NET. It works with .NET, C# or F# to integrate custom machine learning into your ML models. The advantage of using it is that you don’t need any prior expertise in developing or tuning machine learning models!

Artificial intelligence (AI) is helping industries and consumers transform the way they work. Ubiquitous data, connectivity and the emergence of hyper-scale cloud platforms have opened the doors to innovative ways of solving business challenges. However, there is a need for experts, developers and technologies from all backgrounds to be able to leverage the power of AI to transform businesses.

Microsoft has been working towards the democratisation of AI technologies by aligning its research efforts towards solving problems that empower developers and data scientists to achieve more. The central theme of Microsoft’s cloud and AI products is to deliver a platform that is productive, hybrid, intelligent, trusted and open. The Microsoft AI ecosystem spans intelligent apps (like Cortana) as well as deep learning toolkits and services, which developers can use to create bespoke intelligent solutions.

Why should developers care about AI?

Cloud technologies allow APIs for intelligent services to be invoked from applications (regardless of the programming language). This is a faster and more productive way for applications to tap into the power of intelligent platforms. Developers are uniquely positioned to integrate AI with their solutions as they can identify key integration points where AI can add value and make apps feature-rich. Features like locating certain objects in an image or scanning for text in an app running on the mobile can be implemented through API calls to the cloud AI. Hence, it is important to also identify which libraries and APIs are most suitable for app development and enhance productivity without having to refactor a lot of code.

Machine learning libraries and APIs have always appealed to data scientists and developers, as they help achieve results through the configuration of the APIs being invoked rather than writing the code from the ground up, thus saving time.

While the majority of AI-specific solutions are written in Python and R, a lot of SDKs allow developers to create and/or consume ML models in .NET Core (Microsoft’s open source version of .NET), Java, JavaScript and other languages. This presents a significant opportunity for application developers to leverage these platforms to learn about ML and AI through their favourite languages.

Dotnet Core and machine learning

Dotnet Core (aka .NET Core) is a free, cross-platform, open source developer platform for building many different types of applications. It offers support for writing apps in Windows, Linux and Mac OS on mobile devices, the Web, for gaming, IoT and ML in a language of your choice: C#, F# or Visual Basic. These languages have been around for a while and continue to be popular among developer audiences as application development platforms. While the use of .NET has been predominantly on the frontend/backend or middleware layers of a solution, the introduction of .NET for ML creates a new dimension on how application development platforms are evolving.

If you are skilled in open source platforms or have always been a .NET developer on Windows, this is the right time for you to start exploring this cross-platform, open source platform through proven programming languages in the stack.

While AI solutions have mostly been developed in Python and R, .NET Core introduces a new paradigm where the development and use of models is powered by a framework called ML.NET. The framework also has built-in implementations of popular algorithms like logistics, linear regressions, SVM and many others that are commonly used in the field of ML for model development.

ML.NET enables you to develop and integrate custom ML models into your applications even while you navigate through the basics of ML. Being an open source cross-platform framework for .NET developers, ML.NET is an extensible platform that powers Microsoft features like Windows Hello, Bing Ads, PowerPoint Design Ideas and more. This article focuses on ML.NET version 0.6.

ML.NET capabilities and features

There are primarily three tasks in any ML experiment (or application).

- Data processing

- Creating a training workflow

- Creating a scoring workflow

ML.NET provides namespaces and classes to achieve the above tasks through built-in classes and functions. For example, Microsoft.ML.Runtime.Data and Microsoft.ML.Core.Data have classes that help you to extract, load and transform data into the memory of the program for further processing by the ML engine. Similarly, there are classes in the Microsoft.ML.StaticPipe namespace which help you to create a pipeline for activities and configurations related to the ML aspects of AI. Finally, there are classes that help you to score or predict the basis of the developed model and get an output. There are also classes within the Microsoft.ML.Runtime.Data namespace that help you to output the performance metrics of the trained model. These metrics help you to understand how the model has been able to perform against training and test data. For example, to measure the performance of a binary logistic regression classifier (a model which outputs only two classes), we study the attribute values of ‘F1 Score’, ’Accuracy’, ‘AUC’, etc.

Given that ML.NET is built on the strong fundamentals and the same platform as other .NET Core technologies, you can use this inside any of your .NET Core apps like the console, Web (ASP.NET Core) and class libraries or apps running inside Docker containers on Linux.

Time to get your hands dirty!

The ML.NET documentation on Microsoft Docs has a few walk-throughs to get started with some of the scenarios. We will look at using the Logistic Regression classifier on the MNIST data set.

The MNIST data set is like the ‘Hello World!’ of your machine learning journey. In almost any ML technology that you pick, you will come across an exercise that helps you understand the process using this data set.

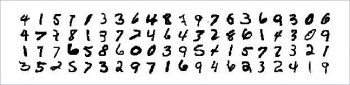

The MNIST data set contains handwritten images of digits, ranging from 0 to 9. An example of what the source images look like is shown in Figure 1.

Identifying the digit in an image is a classification task and there are algorithms such as the Random Forest classifier or the Naïve Bayes classifier which can easily deal with multi-class classification. On the other hand, Logistic Regression is a binary classifier at the core, and it gives a binary outcome between 0 and 1 by measuring the relationship between the label and one or more features in the data set. Being a simple and easy-to-implement algorithm, you can use it to benchmark your ML tasks.

Prima facie, being a binary classifier, it doesn’t seem suitable for MNIST data set classification, but when it is trained using the One-vs-All (OvA) method, you get a distinct classifier that can identify a specific class from the data set. When you perform the prediction, you just need to find out which classifier returns the best score to identify the digit on the image.

Preparing yourself

If you have not yet installed the .NET Core framework, do so before proceeding any further. You can find compatibility and support information at https://www.microsoft.com/net/download/dotnet-core/2.1.

Let’s create a directory called ‘mnist’ anywhere you like, and execute the following commands to create a .NET Core console app inside that directory and to add the ML.NET framework to our project:

dotnet new consoledotnet add package Microsoft.ML |

We can now move ahead on our journey to machine learning in .NET. Fire up your favourite text edit and open the ‘Program.cs’ file in your project directory. You will see the following ‘Hello World!’ code shown below. Let’s start with this and change it as we move forward:

using System;namespace mnist{class Program{static void Main(string[] args){Console.WriteLine("Hello World!");}}} |

If you are trying to build a .NET Core app for the first time, you can also build and run it by running ‘dotnet run’ in the project directory. Moving forward, the first order of business is to add all the namespaces that we will need to get this project running. Add the following lines to the top of the Program.cs file, as follows:

using System;using System.IO;using System.Linq;using Microsoft.ML;using Microsoft.ML.Core.Data;using Microsoft.ML.Runtime.Api;using Microsoft.ML.Runtime.Data;using Microsoft.ML.StaticPipe;using System.Threading.Tasks; |

The next thing we need to set up is a few variables to point to our training and test data set, as well as the name and path of our ML model to save once we are done. Add the following lines under the class Program just before the Main() method:

static readonly string _datapath = Path.Combine(Environment.CurrentDirectory, “Data”, “optdigits-train.csv”);static readonly string _testdatapath = Path.Combine(Environment.CurrentDirectory, “Data”, “optdigits-test.csv”);static readonly string _modelpath = Path.Combine(Environment.CurrentDirectory, “Data”, “Model.zip”); |

Data processing

You may have noticed that we have referred to a directory called Data in the code snippet above. This directory will host our MNIST data files as well as the trained model once we are done. Please download the MNIST 8×8 data set* from the UCI Machine Learning Repository at http://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits

Click on the ‘Data Folder’ link on the page and download the following files in a directory called Data in your project directory:

optdigits.traoptdigits.tes |

Just to make it easier for us, we will rename these files to optdigits-train.csv and optdigits-test.csv. If you haven’t yet looked at them, this is a good time to do so.

The MNIST data set we are using contains 65 columns of numbers. The first 64 columns in each row are integer values in the range from 0 to 16. These values are calculated by dividing 32 x 32 bitmaps into non-overlapping blocks of 4 x 4. The number of ON pixels is counted in each of these blocks, which generates an input matrix of 8 x 8. The last column in each row is the number that is represented by the values in the first 64 columns. These columns are our features and the last column is our label, which we will predict using our ML model once we are finished with this project.

It’s time to set up the data classes that we will use when training and testing our ML model. To do that, let’s add the following classes under the mnist namespace in our Program.cs file, as follows:

public class MNISTData{[Column(“0-63”)][VectorType(64)]public float[] PixelValues;[Column(“64”)]public string Number;}public class NumberPrediction{[ColumnName(“PredictedLabel”)]public string NumberLabel;[ColumnName(“Score”)]public float[] Score;} |

The MNISTData data class is our input data class. It contains the definitions of our features and the labels. We are using the Column attribute and a range of 0-63 to treat our 64 columns as an array of floats and are calling it PixelValues. We are also defining a size of 64 for these columns since the ML.NET trainer requires vectors of a known size. Next up is our label in the last column that we call Number.

The NumberPrediction class is used for the output of our ML model. The PredictedLabel column is of uint type and is called NumberLabel, and the Score column is a float array called Score.

Creating a training workflow

With these basics in place, we can move ahead with building our ML pipeline. Remove or comment the ‘Hello World!’ line from the Main() method and add the following lines:

var env = new LocalEnvironment();var model = Train(env); |

We are creating an ML environment using LocalEnvironment and passing that to the Train method. Add the Train() method after the Main() method with the following code:

public static dynamic Train(LocalEnvironment env){try{var classification = new MulticlassClassificationContext(env);var reader = TextLoader.CreateReader(env, ctx => (PixelValues: ctx.LoadFloat(0, 63),Number: ctx.LoadText(64)),separator: ‘,’,hasHeader: false);var learningPipeline = reader.MakeNewEstimator().Append(r => (label: r.Number.ToKey(),Features: r.PixelValues)).Append(r => (r.label,Predictions: classification.Trainers.MultiClassLogisticRegression(r.label, r.Features))).Append(r => (PredictedLabel: r.Predictions.predictedLabel.ToValue(),Score: r.Predictions.score));var data = reader.Read(new MultiFileSource(_datapath));var model = learningPipeline.Fit(data).AsDynamic;return model;}catch (Exception ex){Console.WriteLine(ex.Message);return null;}} |

LocalEnvironment is a class that creates an ML.NET environment for local execution. In this method, we are creating a classification context of type MultiClassClassificationContext() since our little project here deals with classifying the images as one out of ten numbers. We then use the TextLoader class to point to our data files and the structure of the data as explained in the data processing section of this article. The TextLoader class performs lazy reading, so the data will not be read until we perform a Read operation just before the training.

The learning pipeline is built using the MakeNewEstimator() class. An estimator is a class that represents the attributes related to an ML algorithm. We start by appending our label and features as the Number and PixelValues as defined in our input data classes, respectively. We now add the MultiClassLogisticRegression trainer to the pipeline and finally, define the structure of the predictions that we expect.

After this is done, we read the data from our training data file and perform the training task by calling the Fit method. This step will produce a model that we can save and reuse for making predictions with our testing and/or real-world inputs.

You can run this program now to see how the training task is performed by running dotnet run in the project directory. If no errors pop up during the execution of this program, our training was successful; our model will be returned, and can be saved for reuse later.

You can add the following code to the Main() method to save the file to disk:

using (var stream = File.Create(_modelpath)){model.SaveTo(env, stream);} |

After these changes, you should be able to run the program, and see the model file saved to the file system for reuse, if needed.

Testing the model

Continuing with this program, we will use our test data from optdigits-test.csv to see how the predictions from our model turn out. Here’s how we can test the predictions. Add the following line in the Main() method:

Test(env); |

Then, add the following Test() method to your project:

public static void Test(LocalEnvironment env){ITransformer loadedModel;using (var f = new FileStream(_modelpath, FileMode.Open))loadedModel = TransformerChain.LoadFrom(env, f);using (var reader = new StreamReader(_testdatapath)){int iCount = 0, cCount = 0;while (!reader.EndOfStream){var line = reader.ReadLine();var pixels = line.Substring(0, line.Length - 2);var number = line.Substring(line.Length - 1, 1);var testValue = Array.ConvertAll(pixels.Split(‘,’), float.Parse);var predictor = loadedModel.MakePredictionFunction<MNISTData, NumberPrediction>(env);var prediction = predictor.Predict(new MNISTData() { PixelValues = testValue, Number = number });var predictedNum = int.Parse(prediction.NumberLabel);if (predictedNum != uint.Parse(number)){iCount++;Console.WriteLine($”{iCount} Incorrect prediction {predictedNum} for {number}”);}else{cCount++;Console.WriteLine($”{cCount} Correct prediction {predictedNum} for {number}”);}}Console.WriteLine($”Incorrect predictions: {iCount.ToString()}”);Console.WriteLine($”Correct predictions: {cCount.ToString()}”);}} |

In this method, we are just reading our test data set and converting the values found in each line according to the MNISTData input data class defined earlier. The prediction is returned as a predicted label and since the return value is a string, we just convert this value to int type to get the actual number this model is predicting.

In the rest of code in this method, we are just comparing the predicted value against the actual number in the last column of our data set to see if our predictions are correct, and writing them to the console to make it easier to see how our model is performing. The following code is a truncated output from one of our executions, for your reference:

$ cd mnist$ dotnet run......1713 Correct prediction 8 for 81714 Correct prediction 9 for 91715 Correct prediction 8 for 8Incorrect predictions: 82Correct predictions: 1715 |

Based on the total number of samples (1797) in our test data set, the above numbers indicate an accuracy of 95.43 per cent, which is a good number to have.

We have taken care to ensure you are able to follow the steps in this article, build this model from scratch and test it successfully.

Going forward, you could find ways to push the limits and increase the accuracy of this model. Some of the ways you can do that is by trying out a different classifier and/or using the higher resolution MNIST data set.

*This dataset was made available courtesy of E. Alpaydin and C. Kaynak, Department of Computer Engineering, Bogazici University, 80815, Istanbul, Turkey. We would also like to acknowledge Dua, D. and Karra Taniskidou, E. (2017). UCI Machine Learning Repository [http://archive.ics.uci.edu/ml]. Irvine, CA: University of California, School of Information and Computer Science.