Docker is an open source platform that’s used to build, ship and run distributed services. Kubernetes is an open source orchestration platform for automating deployment, scaling and the operations of application containers across clusters of hosts. Microservices structure an application into several modular services. Here’s a quick look at why these are so useful today.

The Linux operating system has become very stable now and is capable of cleanly sandboxing processes, to execute processes easily; it also comes with better name space control. This has led to the development and enhancement of various container technologies. Some of the features/characteristics of the Linux OS that help container development are:

- Only the required libraries get installed in their respective containers.

- Custom containers can be built easily.

- During the initial days, LXC (Linux container) was very popular and was the foundation stone for the development of various other containers.

- Name space and control groups (process level isolation).

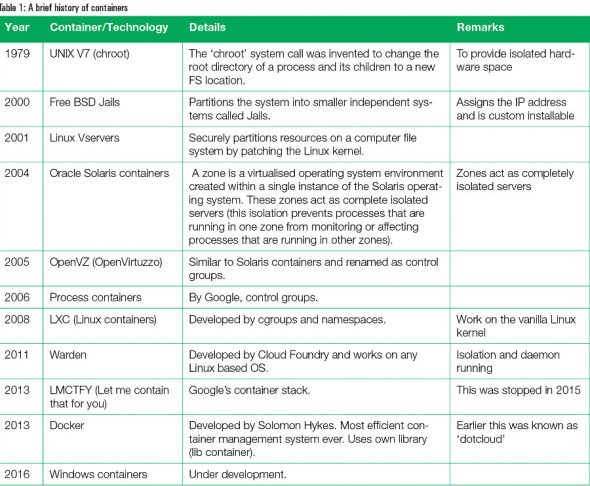

A brief history of containers is outlined in Table 1.

Some of the advantages of containers are:

- Containers are more lightweight compared to virtual machines (VMs).

- The container platform is used in a concise way to build Docker (which is one of the container standards; it is actually a static library and is a daemon running inside the Linux OS).

- Containers make our applications portable.

- Containers can be easily shipped, built and deployed.

Containers

Containers

Containers are an encapsulation of an application with its dependencies. They look like lightweight VMs but that is not the case. A container holds an isolated instance of an operating system, which is used to run various other applications.

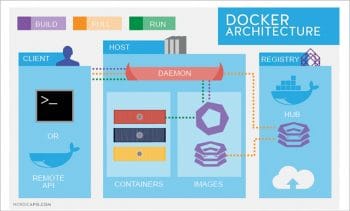

The architecture diagram of Docker-container in Figure 1 shows how each of the individual components are interconnected.

Various components of the Docker-container architecture

The Docker daemon (generally referred to as dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks and volumes. A daemon can also communicate with other daemons to manage Docker services.

The Docker client (also called ‘docker’), with which many Docker users interact with Docker, can communicate with more than one daemon.

A Docker registry stores Docker images. Docker Hub and Docker Cloud are public registries that anybody can use, and Docker is configured to look for images on Docker Hub, by default.

Note: When we use the docker pull or docker run commands, the required images are pulled from the configured registry. When we use the docker push command, the image is pushed to our configured registry.

When we use Docker, we are creating and using images, containers, networks, volumes, plugins and other various such objects. These are called Docker objects.

Thus containers are fundamentally changing the way we develop, distribute and run software on a daily basis. These developments and advantages of containers help in the advancement of microservices technology. Microservices are small services running as separate processes, where each service is lined up with separate business capabilities. When one lists the advantages of microservices over monolithic applications (as given below), it will help users understand and appreciate the beauty of the former. In microservices:

- One single application is broken down into a network of processes.

- All these services communicate using REST or MQ.

- Applications are loosely coupled.

- The scaling up of applications is a lot easier.

- There is very good isolation of these services, as when one fails, others can continue.

What is Kubernetes and why should one use it?

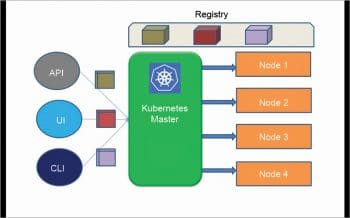

Kubernetes is an open source orchestrator for deploying containerised applications (microservices). It is also defined as a platform for creating, deploying and managing various distributed applications. These applications may be of different sizes and shapes. Kubernetes was originally developed by Google to deploy scalable, reliable systems in containers via application-oriented APIs. Kubernetes is suitable not only for Internet-scale companies but also for cloud-native companies, of all sizes. Some of the advantages of Kubernetes are listed below:

- Kubernetes provides the software necessary to build and deploy reliable and scalable distributed systems.

- Kubernetes supports container APIs with the following benefits:

- Velocity—a number of things can be shipped quickly, while also staying available.

- Scaling—favours scaling with decoupled architecture through load balancers and scaling with consistency.

- Abstract—applications built and deployed on top of Kubernetes can be ported across different environments. Developers are separated from specified machines for providing abstraction. This reduces the overall machines required, thus reducing the cost of CPUs and RAM.

- Efficiency—the developer’s test environment can be cheaply and quickly created via Kubernetes clusters and this can be shared as well, thus reducing the cost of development.

- Kubernetes continuously takes action to ensure that the current state matches the desired state.

The various components involved in Kubernetes

Pods: These are groups of containers that can group together other container images developed by different teams into a single deployable unit.

NameSpaces: This provides isolation and complete access control for each microservice, to control the degree to which other services interact with it.

Kubernetes services: Provides load balancing, discovery isolation and naming of microservices.

Ingress: These are objects that provide an easy-to-use front-end (externalised API surface area).

Running and managing containers using Kubernetes

As described earlier, Kubernetes is a platform for creating, deploying and managing distributed applications. Most of these applications take an input, process the data and provide the results as output. Most of these applications contain language runtime, libraries (libc and libssl) and source code.

A container image is a binary package that encapsulates all of the files necessary to run an application inside an OS container. The Open Container Image (OCI) is the standard image format that’s most widely used.

The container types are of two categories:

- System containers, which try to imitate virtual machines and may run the full boot processes

- Application containers, which run single applications

These images can be run using the docker run –d –name command, using the CLI.

The default container runtime used by Kubernetes is Docker, as the latter provides an API for creating application containers on both Linux and Windows based operating systems.

kuard is a database and maps to Port 8080, and can aso be explored using a Web interface.

Docker provides many features by exposing the underlying ‘cgroups’ technology provided by the Linux kernel. With this, the following resource usage can be managed and monitored:

- Memory resources management and limitation

- CPU resources management and limitation

Deploying a Kubernetes cluster

Deploying a Kubernetes cluster

Kubernetes can be installed on three major cloud providers like that of Amazon’s AWS, Microsoft’s Azure and Google’s Cloud Platform (GCP). Each cloud provider allows its own container service platforms.

Kubernetes also can be installed using Minikube, locally. Minikube is a simulation of the Kubernetes cluster, but the main function of this is for experimentation, local development or for learning purposes.

Kubernetes can also be run on IoT platforms like Raspberry Pi for IoT applications and for low-cost projects.

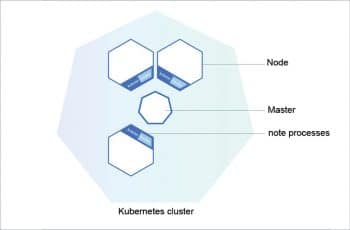

The Kubernetes cluster has multiple components such as:

- Kubernetes Proxy—for routing network traffic for load balancing services (https://kubernetes.io/docs/getting-started-guides/scratch/)

- Kubernetes DNS—a DNS server for naming and discovery of the services that are defined in DNS

- Kubernetes UI— this is the GUI to manage the cluster

Pods in Kubernetes

A pod is a collection of application containers and volumes running in the same execution environment. One can say that the pods are the smallest deployable artifacts in the Kubernetes cluster environment. Every container within a pod runs in its own cgroup but shares a number of Linux name spaces. Pods cane be created using the following command in the CLI:

kubectl run kuard

Most of the pod manifests are written using YAML or JSON scripts. But YAML is preferred as it is in human readable format.

There are various command options using kubectl to run or list pods.

Labels, annotations and service discovery

Labels are key-value pairs that can be attached to Kubernetes objects such as pods and replica-sets. These labels help in finding the required information about Kubernetes objects, metadata for objects and for the grouping of objects.

Annotations provide a place to store additional metadata for Kubernetes objects with assisting tools and libraries.

Labels and annotations go hand-in-hand; however, annotations are used to provide extra information about where and how an object came from and what its policies are.

A comparison between Docker Swarm and Kubernetes

Both Kubernetes and Docker Swarm are popular and used as container orchestration platforms. Docker also started supporting and shipping Kubernetes from its CE (community edition) and EE (enterprise edition) releases.

Docker Swarm is the native clustering for Docker. Originally, it did not provide much by way of container automation, but with the latest update to Docker Engine 1.12, container orchestration is now built into its core with first-party support.

It takes some effort to get Kubernetes installed and running, as compared to the faster and easier Docker Swarm installation. Both have good scalability and high availability features built into them. Hence, one has to choose the right one based on the need of the hour. For more details, do refer to https://www.upcloud.com/blog/docker-swarm-vs-kubernetes/.