Social media networks like Facebook have a large user base and an even larger accumulation of data, both visual and otherwise. Face recognition is an important feature of such sites, and has been made possible by deep learning. Let’s find out how.

Deep learning is a type of machine learning in which a model learns to perform classification tasks directly from images, text or sound. Deep learning is usually implemented using neural network architecture. The term deep refers to the number of layers in the network—the more the layers, the deeper the network. Traditional neural networks contain only two or three layers, while deep networks can have hundreds.

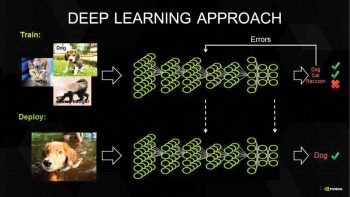

In Figure 1, you can see that we are training the system to classify three animals – a dog, a cat and a honey badger.

A few applications of deep learning

Here are just a few examples of deep learning at work:

- A self-driving vehicle slows down as it approaches a pedestrian crossing.

- An ATM rejects a counterfeit bank note.

- A smartphone app gives an instant translation of a street sign in a foreign language.

Deep learning is especially well-suited to identification applications such as face recognition, text translation, voice recognition, and advanced driver assistance systems, including lane classification and traffic sign recognition.

The learning process of deep neural networks

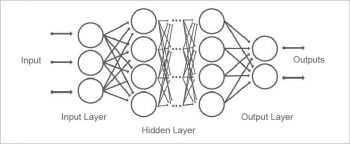

A deep neural network combines multiple non-linear processing layers, using simple elements operating in parallel. It is inspired by the biological nervous system, and consists of an input layer, several hidden layers, and an output layer. The layers are interconnected via nodes, or neurons, with each hidden layer using the output of the previous layer as its input.

How a deep neural network learns

Let’s say we have a set of images, where each image contains one of four different categories of objects, and we want the deep learning network to automatically recognise which object is in each image. We label the images in order to have training data for the network.

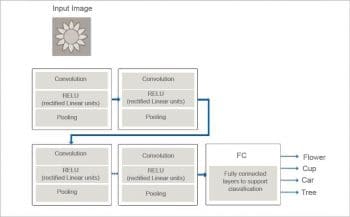

Using this training data, the network can then start to understand the object’s specific features and associate them with the corresponding category. Each layer in the network takes in data from the previous layer, transforms it, and passes it on. The network increases the complexity and detail of what it is learning from layer to layer. Notice that the network learns directly from the data—we have no influence over what features are being learned.

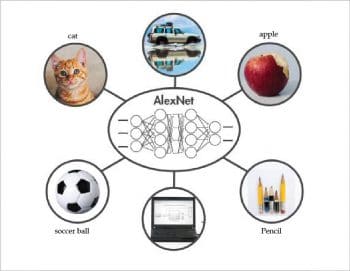

Implementation: An example using AlexNet

If you’re new to deep learning, a quick and easy way to get started is to use an existing network, such as AlexNet, which is a CNN (convolutional neural network) trained on more than a million images. AlexNet is commonly used for image classification. It can classify images into 1000 different categories, including keyboards, computer mice, pencils, and other office equipment, as well as various breeds of dogs, cats, horses and other animals.

You will require the following software:

1. MATLAB 2016b or a higher version

2. Neural Network Toolbox

3. The support package for using Web cams in MATLAB (https://in.mathworks.com/matlabcentral/fileexchange/45182-matlab-support-package-for-usb-webcams)

4. The support package for using AlexNet (https://in.mathworks.com/matlabcentral/fileexchange/59133-neural-network-toolbox-tm–model-for-alexnet-network)

After loading AlexNet, connect to the Web cam and capture a live image.

Step 1: First, give the following commands:

camera = webcam; % Connect to the camerannet = AlexNet; % Load the neural netpicture = camera.snapshot; % Take a picture |

Step 2: Next, resize the image to 227 x 227 pixels, the size required by AlexNet:

picture = imresize(picture,[227,227]); % Resize the picture |

Step 3: AlexNet can now classify our image:

label = classify(nnet, picture); % Classify the pictureimage(picture); % Show the picturetitle(char(label)); % Show the label |

Step 4: Give the following command to get the output:

output: |