Container management and automation tools are today a great necessity, since IT organisations need to manage and monitor distributed and cloud/native based applications. Let’s take a look at some of the best open source tools that are available to us today for containerisation.

The Linux operating system, LXC, the building block that sparked the development of containerisation technologies, was added to the Linux kernel in 2008. LXC combined the use of kernel cgroups, which allow groups to be separated so that they cannot “see” each other, to implement lightweight process isolation.

Recently, Docker has evolved into a powerful way of simplifying the tooling required to create and manage containers. It basically uses LXC as its default execution driver. With Docker, containers have become accessible to novice developers and systems administrators, as it uses simple processes and standard interfaces.

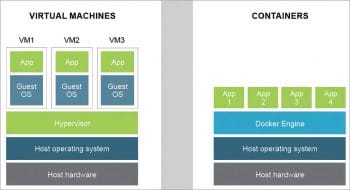

Containerisation is regarded as a lightweight alternative to the virtualisation of the full machine, which involves encapsulating an application in a container with its own operating environment. This, in turn, provides many unique advantages of loading an application into a virtual machine, as applications can run on any suitable physical machine without any dependencies.

Containerisation has become popular through Docker, for which containers are designed and developed in such a manner that they are capable of running on everything ranging from physical machines and virtual machines, to OpenStack cloud clusters, physical instances and all sorts of servers.

The following points highlight the unique advantages of containers.

- Host system abstraction: Containers are standardised systems, which means that they connect the host machine to anything outside of the container using standard interfaces. Container applications rely on host machine resources and architectures.

- Scalability: Abstraction between the host system and containers gives an accurate application design, scalability and an easy-to-operate environment. Service-Oriented-Design (SoD) is combined with container applications to provide high scalability.

- Easy dependency management: Containers give a powerful edge to developers, enabling them to combine an application or all application components along with their dependencies as one unit. The host system doesn’t face any sort of challenge regarding the dependencies required to run any application. As the host system can run Docker, everything can run on Docker containers.

- Lightweight and isolation execution operating environments: Though containers are not as powerful as virtualisation in providing isolation and resource management, they still have a clear edge in terms of being a lightweight execution environment. Containers are isolated at the process level and share the kernel of the host machine, which means that a container doesn’t include a complete operating system, leading to faster start-up times.

- Layering: Containers, being ultra-lightweight in the operating environment, function in layers and every layer performs individual tasks, leading to minimal disk utilisation for images.

With the general adoption of cloud computing platforms, integrating and monitoring container based technologies is of utmost necessity. Container management and automation tools are regarded as important areas for development as today’s IT organisations need to manage and monitor distributed and cloud/native based applications.

Containers can be visualised as the future of virtualisation, and strong adoption of containers can already be seen in cloud and Web servers. So, it is very important for systems administrators to be aware of various container management tools that are also open source. Let’s take a look at what I consider are the best tools in this domain.

Apache Aurora

Apache Aurora is a service scheduler that runs on top of Apache Mesos, enabling users to run long-running services and to take appropriate advantage of Mesos’ scalability, fault tolerance and resource isolation. Aurora runs applications and services across a shared pool of host machines and is responsible for keeping them up and running 24×7. In case of any technical failures, Aurora intelligently does the task of rescheduling over other running machines.

Components

- Scheduler: This is regarded as the primary interface for the user to work on the cluster and it performs various tasks like running jobs and managing Mesos.

- Client: This is a command line tool that exposes primitives, enabling the user to interact with the scheduler. It also contains Admin_Client to run admin commands especially for cluster administrators.

- Executor: This is responsible for carrying out workloads, executing user processes, performing task checks and registering tasks in Zookeeper for dynamic service discovery.

- Observer: This provides browser based access to individual tasks running on worker machines and gives detailed analysis of processes being executed. It enables the user to browse all the sandbox task directories.

- Zookeeper: It performs the task of service discovery.

- Mesos Master: This tracks all worker machines and ensures resource accountability. It acts as the central node that controls the entire cluster.

- Mesos Agent: This receives tasks from the scheduler and executes them. It basically interfaces with Linux isolation groups like cgroups, namespace and Docker to manage resource consumption.

Features

- Scheduling and deployment of jobs.

- Resource quota and multi-user support.

- Resource isolation, multi-tenancy, service discovery and Cron jobs.

Latest version: 0.18.0

Apache Mesos

Apache Mesos is an open source cluster manager developed by the University of California, Berkeley, and provides efficient resource isolation and sharing across distributed applications or frameworks. Apache Mesos abstracts the CPU, memory, storage and other computational resources away from physical or virtual machines and performs the tasks of fault tolerance.

Apache Mesos is being developed on the same lines as the Linux kernel. The Mesos kernel is compatible with every machine and provides a platform for various applications like Apache Hadoop, Spark, Kafka and Elasticsearch with APIs for effective resource management, and scheduling across data centres and cloud computing functional environments.

Components

- Master: This enables fine-grained resource sharing across frameworks by making resource offers.

- Scheduler: Registers with the Master to be offered resources.

- Executor: It is launched on agent nodes to run the framework tasks.

Features

- Isolation of computing resources like the CPU, memory and I/O in the entire cluster.

- Supports thousands of nodes, thereby providing linear scalability.

- Is fault-tolerant and provides high availability.

- Has the capability to share resources across multiple frameworks and implement a decentralised scheduling model.

Latest version: 1.3.0

Docker Engine

Docker Engine creates and runs Docker containers. A Docker container can be defined as a live instance of a Docker image, which is regarded as a file created to run a specific service or program in the operating system. Docker is an effective platform for developers and administrators to develop, share and run applications. It is also used to deploy code, test it and implement it as fast as possible.

Docker Engine is Docker’s open source containerisation technology combined with a workflow for building and containerising applications. Docker containers can effectively run on desktops, servers, virtual machines, data centres-cum-cloud servers, etc.

Features

- Faster delivery of applications as it is very easy to build new containers, enabling rapid iteration of applications; hence, changes can be easily visualised.

- Easy to deploy and highly scalable, as Docker containers can run almost anywhere and run on so many platforms, enabling users to move the applications around — from testing servers to real-time implementation environments.

- Docker containers don’t require a hypervisor and any number of hosts can be packed. This gives greater value for every server and reduces costs.

- Overall, Docker speeds up the work, as it requires only a few changes rather than huge updates.

Latest version: 17.06

Docker Swarm

Docker Swarm is an open source native clustering tool for Docker. It converts a pool of Docker hosts into a single, virtual Docker host. As Docker Swarm serves the standard Docker API, any tool communicating with the Docker daemon can use Swarm to scale to multiple hosts.

The following tools support Docker Swarm:

- Dokku

- Docker Compose

- Docker Machine

- Jenkins

Unlike other Docker based projects, the ‘swap, plug and play’ principle is utilised by Swarm. This means that users can swap the scheduled backend Docker Swarm out-of-the-box with any other one that they prefer.

Features

- Integrated cluster management via Docker Engine.

- Decentralised design: Users can deploy both kinds of nodes, managers and workers, via Docker Engine.

- Has the Declarative Service Model to define the desired states of various services in the application stack.

- Scaling: Swarm Manager adapts automatically by adding or removing tasks to maintain the desired state of application.

- Multi-host networking, service discovery and load balancing.

- Docker Swarm uses TLS mutual authentication and encryption to secure communications between the nodes.

- It supports rolling updates.

Version: Supports Docker Engine v1.12.0 (the latest edition)

Kontena

Kontena is an open source project for organising and running containerised workloads on the cluster and is composed of a number of nodes (virtual machines) and Master (for monitoring nodes).

Applications can be developed via Kontena Service, which includes the container image, networking, scaling and other attributes of the application. Service is highly dynamic and can be used to create any sort of architecture. Every service is assigned a DNS address, which can be used for inter-service communication.

Features

- In-built private Docker image registry.

- Load balancing service to maintain operational load of nodes and Master.

- Access control and roles for secure communication.

- Intelligent scheduler with affinity filtering.

Latest version: 1.3.3

oVirt

oVirt is an open source virtualisation platform, developed by Red Hat primarily for the centralised management of virtual machines, as well as compute, storage and networking resources, via an easy Web based GUI with platform independent access. oVirt is built on the powerful Kernel-based Virtual Machine (KVM) hypervisor, and is written in Java and the GWT Web toolkit.

Components

- oVirt Node: Has a highly scalable, image based and small footprint hypervisor written in Python.

- oVirt Engine: Has a centralised virtualisation management engine with a professional GUI interface to administer a cluster of nodes.

Features

- High availability and load balancing.

- Live migration and Web based administration.

- State-of-art security via SELinux, and access controls for virtual machines and the hypervisor.

- Powerful integration with varied open source projects like OpenStack Glance, Neutron and Katello for provisioning and overall administration.

- Highly scalable and self-hosted engine.

Latest version: 4.1.2

Weaveworks

Weaveworks comprises a set of tools for clustering, viewing and deploying microservices and cloud-native applications across intranets and the Internet.

Tools

- Weave Scope: Provides a visual monitoring GUI interface for software development across containers.

- Weave Cortex: Prometheus-as-a-Service open source plugin for data monitoring in Kubernetes based clusters.

- Weave Flux: Facilitates the deployment of containerised applications to Kubernetes clusters.

- Weave Cloud: Combines various open source projects to be delivered as Software-as-a-Service.

Features

- Powerful GUI interface for viewing processes, containers and hosts in order to perform all sorts of operations and microservices.

- Real-time monitoring of containers via a single-node click.

- Easy integration via no coding requirements.

Wercker

Wercker is an open source autonomous platform to create and deploy containers for multi-tiered, cloud native applications. It can build containers automatically, and deploy them to public and private Docker registries. Wercker provides a CLI based interface for developers to create Docker containers that deploy and build processes, and implement them on varied cloud platforms ranging from Heroku to AWS and Rackspace.

It is highly integrated with Docker containers and includes application code for easy mobility between servers. It works on the concept of pipelines, which are called ‘automated workflows’. The API provides programmatic access to information on applications, builds and deployments.

Features

- Tight integration with GitHub and Bitbucket.

- Automates builds, tests and deployments via pipelines and workflows.

- Executes tests in parallel, and saves wait times.

- Works with private containers and container registries.