This is a discussion on the role of Docker in software development and how it scores over virtual machines. As it becomes increasingly popular, let’s look at what the future holds for Docker.

We all know that Docker is simple to get up and running on our local machines. But seamlessly transitioning our honed application stacks from development to production is problematic.

Docker Cloud makes it easy to provision nodes from existing cloud providers. If you already have an account with an Infrastructure-as-a-Service (IaaS) provider, you can provision new nodes directly from within Docker Cloud, which can play a crucial role in digital transformation.

For many hosting providers, the easiest way to deploy and manage containers is via Docker Machine drivers. Today we have native support for nine major cloud providers:

- Amazon Web Services

- Microsoft Azure

- Digital Ocean

- Exoscale

- Google Compute Engine

- OpenStack

- Rackspace

- IBM Softlayer

- Packet.net

AWS is the biggest cloud-hosting service on the planet and offers support for Docker across most of its standard EC2 machines. Google’s container hosting and management service is underpinned by Kubernetes, its own open source project that powers many large container-based infrastructures. More are likely to follow soon, and you may be able to use the generic driver for other hosts.

Docker Cloud provides a hosted registry service with build and testing facilities for Dockerised application images, tools to help you set up and manage host infrastructure, and application life cycle features to automate deploying (and redeploying) services created from images. It also allows you to publish Dockerised images on the Internet either publicly or privately. Docker Cloud can also store pre-built images, or link to your source code so it can build the code into Docker images, and optionally test the resulting images before pushing them to a repository.

Virtual machines (VM) vs Docker

Some of the companies investing in Docker and containers are Google, Microsoft and IBM. But just because containers are extremely popular, that doesn’t mean virtual machines are out of date. Which of the two is selected depends entirely on the specific needs of the end user.

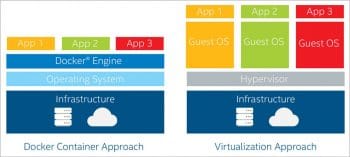

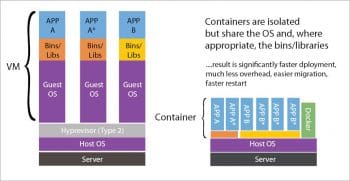

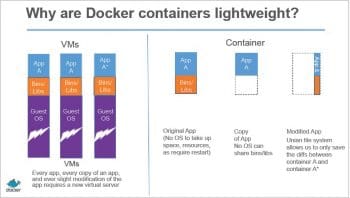

Virtual machines (VMs) run on top of a hypervisor with a fully virtualised and totally isolated OS. They take up a lot of system resources and are also very slow to move around. Each VM runs not just a full copy of an operating system, but a virtual copy of all the hardware that the operating system needs to run. This quickly adds up to a lot of RAM and CPU cycles. And yes, containers can enable your company to pack a lot more applications into a single physical server than a VM can. Container technologies such as Docker beat VMs at this point in the cloud or data centre game.

Virtual machines are based on the concept of virtualisation, which is the emulation of computer hardware. It emulates hardware like the CPU, RAM and I/O devices. The software emulating this is called a hypervisor. Every VM interacts with the hypervisor through the operating system installed on the VM, which could be a typical desktop or laptop OS. There are many products that provide virtualised environments like Oracle VirtualBox, VMware Player, Parallel Desktop, Hyper-V, Citrix XenClient, etc.

Docker is based on the concept of containerisation. A container runs in an isolated partition inside the shared Linux kernel running on top of the hardware. There is no concept of emulation or a hypervisor in containerisation. Linux namespaces and cgroups enable Docker to run applications inside the container. In contrast to VMs, all that a container requires is enough of an operating system, supporting programs and libraries, and system resources to run a specific program. This means that, practically, you can put two to three times as many applications on a single server with containers than you can with a VM. In addition, with containers you can create a portable, consistent operating environment for development, testing and deployment. That’s a winning triple whammy.

Why Docker instead of VMs?

• Faster delivery of applications.

• Portable and scales more easily.

• Get higher density and run more of a workload.

• Faster deployment leads to easier management.

Docker features

• VE (Virtual Environments) based on LXC.

• Portable deployment across machines.

• Versioning: Docker includes Git-like capabilities for tracking versions of a container.

• Component reuse: It allows building or stacking of already created packages. You can create ‘base images’ and then run more machines based on the image.

• Shared libraries: There is a public repository with several images.

• Docker containers are very lightweight.

Who uses Docker and containers?

Many industries and companies have today shifted their infrastructure to containers or use containers in some other way.

The leading industries using Docker are energy, entertainment, financial, food services, life sciences, e-payments, retail, social networking, telecommunications, travel, healthcare, media, e-commerce, transportation, education and technology.

Some of the companies and organisations using Docker include The New York Times, PayPal, Business Insider, Cornell University, Indiana University, Splunk, The Washington Post, Swisscomm, GE, Groupon, Yandex, Uber, Shopify, Spotify, New Relic, Yelp, Quora, eBay, BBC News, and many more. There are many other companies planning to migrate their existing infrastructure to containers.

Integration of different tools

With the integration of various major tools available in the market now, Docker allows developers and IT operations teams to collaborate with each other to build more software faster while remaining secure. It is associated with service provider tools, dev tools, official repositories, orchestration tools, systems integration tools, service discovery tools, Big Data, security tools, monitoring and logging tools, configuration management tools such as those used for continuous integration, etc.

Continuous integration (CI) is another big area for Docker. Traditionally, CI services have used VMs to create the isolation you need to fully test a software app. Docker’s containers let you do this without using a lot of resources, which means your CI and your build pipeline can move more quickly.

Continuous integration and continuous deployment (CD) have become one of the most common use cases of Docker early adopters. CI/CD merges development with testing, allowing developers to build code collaboratively, submit it to the master branch and check for issues. This allows developers to not only build their code, but also test it in any environment type and as often as possible to catch bugs early in the applications development life cycle. Since Docker can integrate with tools like Jenkins and GitHub, developers can submit code to GitHub, test it and automatically trigger a build using Jenkins. Then, once the image is complete, it can be added to Docker registries. This streamlines the process and saves time on build and set-up processes, all while allowing developers to run tests in parallel and automate them so that they can continue to work on other projects while tests are being run. Since Docker works on the cloud or virtual environment and supports both Linux and Windows, enterprises no longer have to deal with inconsistencies between different environments – which is perhaps one of the most widely known benefits of the Docker CaaS (Containers as a Service) platform.

Drone.io is a Docker-specific CI service, but all the big CI players have Docker integration anyway, including Jenkins, Puppet, Chef, Saltstack, Packer, Ansible, etc; so it will be easy to find and incorporate Docker into your process.

Adoption of Docker

Docker is probably the most talked about infrastructure technology in the past few years. A study by Datadog, covering around 10,000 companies and 185 million containers in real-world use, has resulted in the largest and most accurate data review of Docker adoption. The following highlights of this study should answer all your questions.

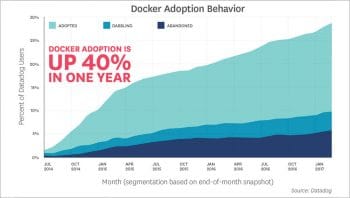

i) Docker adoption has increased 40 per cent in one year

At the beginning of March 2016, 13.6 per cent of Datadog’s customers had adopted Docker. One year later, that number has grown to 18.8 per cent. That’s almost 40 per cent market-share growth in 12 months. Figure 7 shows the growth of Docker adoption and behaviour. Based on this, we can say that companies are adopting Docker very fast and it’s playing a major role in global digital transformation.

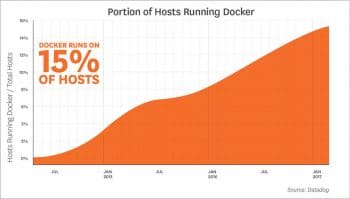

ii) Docker now runs on 15 per cent of the hosts

This is an impressive fact. Two years ago, Docker had about 3 per cent market share, and now it’s running on 15 per cent of the hosts Datadog monitors. The graph in Figure 8 illustrates that the Docker growth rate was somewhat variable early on, but began to stabilise around the fall of 2015. Since then, Docker usage has climbed steadily and nearly linearly, and it now runs on roughly one in every six hosts that Datadog monitors.

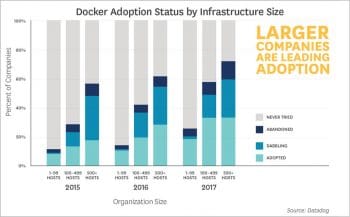

iii) Larger companies are leading adoption

Larger companies tend to be slower to move. But in the case of Docker, larger companies are leading the way since the first edition of Datadog’s report in 2015. The more hosts a company uses, the more likely it is to have tried Docker. Nearly 60 per cent of organisations running 500 or more hosts are classified as Docker dabblers or adopters.

While previous editions of this report showed organisations with many hosts clearly driving Docker adoption, the latest data shows that organisations with mid-sized host counts (100–499 hosts) have made significant gains. Adoption rates for companies with medium and large host counts are now nearly identical. Docker first gained a foothold in the enterprise world by solving the unique needs of large organisations, but is now being used as a general-purpose platform in companies of all sizes.

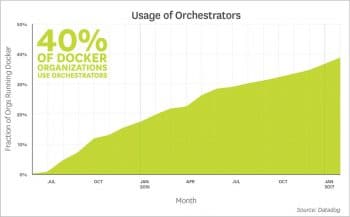

iv) Orchestrators are taking off

As Docker increasingly becomes an integral part of production environments, organisations are seeking out tools to help them effectively manage and orchestrate their containers. As of March 2017, roughly 40 per cent of Datadog customers running Docker were also running Kubernetes, Mesos, Amazon ECS, Google Container Engine, or another orchestrator. Other organisations may be using Docker’s built-in orchestration capabilities, but that functionality did not generate uniquely identifiable metrics that would allow us to reliably measure its use at the time of this report.

Among organisations running Docker and using AWS, Amazon ECS is a popular choice for orchestration, as would be expected — more than 35 per cent of these companies use ECS. But there has also been significant usage of other orchestrators (especially Kubernetes) at companies running AWS infrastructure.

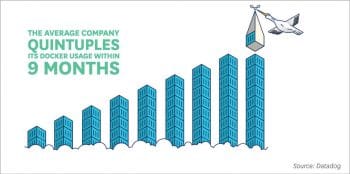

v) Adopters quintuple their container count within nine months

The average number of running containers Docker adopters have in production grows five times between their first and tenth month of usage. This internal-usage growth rate is quite linear, and shows no signs of tapering off after the tenth month. Another indication of the robustness of this trend is that it has remained steady since Datadog’s previous report last year.

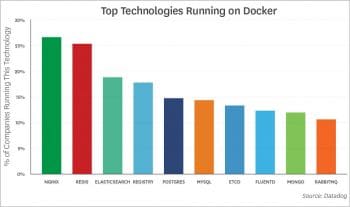

vi) Top technologies/companies running on Docker technology

The most common technologies running in Docker are listed below.

1. NGINX: Docker is being used to contain a lot of HTTP servers, it seems. NGINX has been a perennial contender on this list since Datadog began tracking image use in 2015.

2. Redis: This popular key-value data store is often used as an in-memory database, message queue, or cache.

3. ElasticSearch: Full-text search continues to increase in popularity, cracking the top three for the first time.

4. Registry: Eighteen per cent of companies running Docker are using Registry, an application for storing and distributing other Docker images. Registry has been near the top of the list in each edition of this report.

5. Postgres: The increasingly popular open source relational database edges out MySQL for the first time in this ranking.

6. MySQL: The most widely used open source database in the world continues to find use in Docker infrastructure. Adding the MySQL and Postgres numbers, it appears that using Docker to run relational databases is surprisingly common.

7. etcd: The distributed key-value store is used to provide consistent configuration across a Docker cluster.

8. Fluentd: This open source ‘unified logging layer’ is designed to decouple data sources from backend data stores. This is the first time Fluentd has appeared on the list, displacing Logsout from the top 10.

9. MongoDB: This is a widely-used NoSQL datastore.

10. RabbitMQ: This open source message broker finds plenty of use in Docker environments.

vii) Docker hosts often run seven containers at a time

The median company that adopts Docker runs seven containers simultaneously on each host, up from five containers nine months ago. This finding seems to indicate that Docker is in fact commonly used as a lightweight way to share compute resources; it is not solely valued for providing a knowable, versioned runtime environment. Bolstering this observation, 25 per cent of companies run an average of 14+ containers simultaneously.

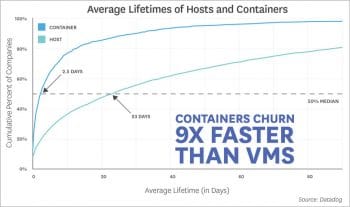

viii) Containers’ churn rate is 9x faster than VMs

At companies that adopt Docker, containers have an average lifespan of 2.5 days, while across all companies, traditional and cloud-based VMs have an average lifespan of 23 days. Container orchestration appears to have a strong effect on container lifetimes, as the automated starting and stopping of containers leads to a higher churn rate. In organisations running Docker with an orchestrator, the typical lifetime of a container is less than one day. At organisations that run Docker without orchestration, the average container exists for 5.5 days.

Containers’ short lifetimes and increased density have significant implications for infrastructure monitoring. They represent an order-of-magnitude increase in the number of things that need to be individually monitored. Monitoring solutions that are host-centric, rather than role-centric, quickly become unusable. We thus expect Docker to continue to drive the sea change in monitoring practices that the cloud began several years ago.

amazing information

@jackluter

Thank you so much for valuable comments.