Containerisation involves encapsulating an application in a container with its own operating environment. This is a short overview on containers and how they are used for application containerisation.

Containers – I am sure all of us have used them in our day-to-day lives to store some things. We usually store something inside a container so that it remains confined within a specified space, is shielded from any external harm or disturbances, and can be kept safe for long. We also use containers when we have to transport anything safely from one place to another. What if we use a container to store or encapsulate an application? Yes, that’s what we use containers for in the IT arena.

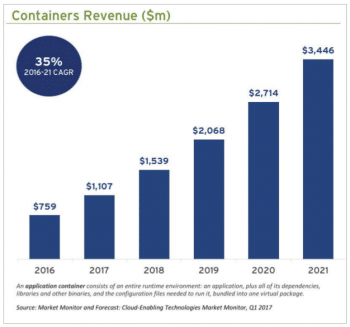

A container is basically used to store the entire runtime environment of an application, including all its dependencies like the different libraries and configuration files needed to successfully run it. All of these are bundled together into a single package. This ensures that any process inside the container cannot see any other process or resource outside the container.

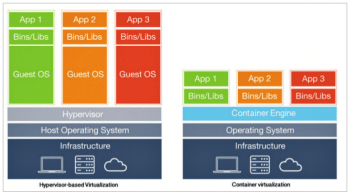

For long, the hypervisor based virtualisation technology was used to emulate hardware, due to which we could run any OS on top of any other, Linux on Windows, or the other way around. With this technology, both the guest OS and the host OS run with their own kernel. The communication between the guest system and the actual hardware is done with the help of an abstract layer of the hypervisor. This approach actually provides a high level of isolation as well as security, since all communication between the guest and the host is through the hypervisor. But, this approach is much slower and incurs performance overhead due to hardware emulation. Hence, to reduce this overhead, container virtualisation or containerisation was introduced, which allows users to run multiple, isolated user space instances using the same kernel.

Containerisation helps us run any software reliably when it is moved from one computing environment to another. It is really helpful when moving an application that has been developed, from a developer’s desktop to a certification or test environment, and further into production. There are cases when we encounter some unexpected exceptions whenever an application or software is migrated from one environment to another, even when that application works fine in the earlier one. Such issues are also observed when software is moved from a physical machine in a data centre to another virtual machine present in a public or private cloud. If we have the application encapsulated in software containers, then we can get rid of all such issues.

Software containers are really helpful when the supporting environment or platform on which the software has been developed is not identical to the one in which it’s implemented. Nowadays, containers are also being used to manage different issues caused due to differences in network topology or different security and storage policies. Hence, containers are designed to solve different application management issues. Overall, containers help us take the DevOps culture a step ahead by increasing the portability of an application. They help to increase the storage and CPU efficiency as well.

What is containerisation?

Containerisation is nothing but the process of abstracting away all the differences in operating system distributions and their underlying infrastructure by encapsulating discrete components of application logic, including the application platform and its dependencies, with the help of lightweight containers. It’s actually a virtualisation method for deploying different distributed applications without actually launching an entire virtual machine for each application—multiple isolated systems run on a single control host and they access a single kernel. The containers used for containerisation hold different components such as environment variables, files and libraries, which are necessary to run the desired software. Since different resources are shared in this way, containers that place less strain on the overall available resources can be created. For instance, if a specific variation from any standard image is desired, one can create a container that holds only the new library.

Why containerisation?

1. Since containerisation does not incur any performance overhead as in the case of virtualisation, there is a high possibility that many containers can be supported on the same infrastructure.

2. It is because of containerisation that any application container can easily run on any system or in any cloud without any code changes. In the case of containerisation, there are no guest OS environment variables or any library dependencies to manage.

3. Containerisation is also required when we have to rely on the behaviour of a specific version of the SSL library for any application to run, and all of a sudden an updated version of it automatically gets installed. Containerisation lets the application run without any exception in such cases.

4. It also plays a significant role when there are differences in the network topology, or even in the security or storage policies of the two platforms on which software needs to run. Containerisation makes it possible to run the containerised software on any such platform.

5. A server running three different containerised applications actually runs on a single OS, and each of its containers shares the OS kernel with other containers. Shared parts of the OS are in read-only mode, while each container has its own way to access its peer containers for writing. This proves that containers are much more lightweight and also use fewer resources than the virtual machines, and hence are preferred.

6. A container used for containerisation may be only of megabyte-size, whereas a virtual machine with its own OS may be several gigabytes in size. Hence, a single server can host more containers than virtual machines, giving it another edge over VMs.

7. Containerised applications do not have any boot time. They can be started instantaneously. This means containers can be instantiated just when required, and can also disappear when no longer required, freeing up the resources on their hosts.

8. Containers are always designed in such a way that they run on everything, right from physical computers to virtual machines, bare-metal servers, different OpenStack cloud clusters, etc.

9. Containerisation also enables different developers to fully ‘own’ the configuration and set-up of their application’s runtime environment.

10. Containerisation leads to greater modularity. Instead of running an entire complex application using a single container, the application can also be split into different modules such as the application front-end, the database and so on. This is called the microservices approach. Applications built in such a way are easier to manage as each of their modules is relatively simple. Also, different changes can be made to different modules without the hassle of rebuilding the entire application.

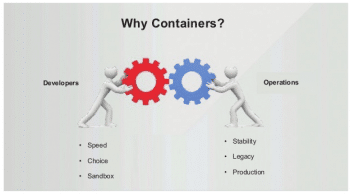

11. Containerisation supports a more unified DevOps culture. All developers have to handle applications and their frameworks, while the IT operations team is concerned with the OS and server. But both of them have the end goal of high quality software releases. They need to rely on each other when some changes take place during the development cycle—and when it is the time to scale up. All developers want scalability, whereas operations teams are focused on application management and its efficiency.

Containerisation keeps the separation between the two by isolating their processes and hence helping them to achieve their respective goals in tandem.

Different types of containerisation

Let’s now classify the containers based on how they can be used for different applications.

Operating system containers: Operating system containers are actually the virtual environments that share the kernel of the host operating system but, at the same time, provide user space isolation. We can think of OS containers as VMs. We can install and then configure them, and execute different applications, libraries, etc, in the same way that we would on any OS. Just as in the case of a VM, anything running inside a container can only see the different resources that have been assigned to that container. These containers are useful when we want to run a number of the same or different flavours of distros. Often, containers are created using templates or images, which actually determine the structure as well as contents of that container. Hence, we can create containers which have identical environments using the same package versions and configurations across all containers. Different container technologies such as OpenVZ, LXC, Linux VServer and Solaris zones are suitable for creating OS containers.

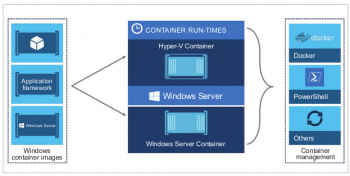

Application containers: We know that OS containers are designed in such a way that they can run multiple services and processes, but application containers are designed keeping in mind that they will package and run a single service. So, in spite of the fact that they share the same kernel of the host, there are many differences between these two types of containers. Whenever any application container is launched, it runs a single process that actually runs our application when we create different containers per application. This is very different from the OS containers, where we have multiple services running on the same operating system.

Different container technologies like Rocket and Docker are examples of application containers. Any RUN commands we specify in the Dockerfile are to create a new layer for the container. In the end, Docker combines such layers and runs our containers. Layering helps Docker to increase the reuse and reduce duplication. This is really helpful when we want to create different containers for our components. We can start with a base image, which is common for all components and then we just add different layers that are specific to our component. It also helps when we want to roll back our changes, as we can simply switch to the old layers. There is no overhead involved in doing so.

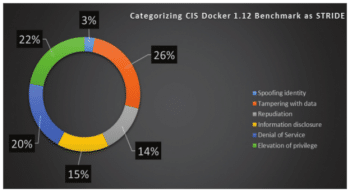

How secure is containerisation?

It is a common belief that containers are less secure than virtual machines, because if there is any vulnerability in the container host kernel then it could provide a way into those containers that share it. This is even true for a hypervisor, but since a hypervisor provides less functionality than a Linux kernel (as it implements file systems, application process controls, networking, etc) it presents a much smaller attack surface. There is one benefit to containerisation over virtualisation — we need to secure the host OS and every other OS running on top of it in case of virtualisation, but with a containerisation model, we just need to secure that one host OS.

For the past few years, a great deal of effort has been devoted to developing software to enhance the security of containers. For instance, Docker (and other such container systems) now include a signing infrastructure, which allows administrators to sign the container images in order to prevent different untrusted containers from being deployed. Given below are some of the ways in which containerisation security is being taken care of.

1. It is not always the case that a signed and trusted container is secure to run, as vulnerabilities may also be discovered in some of the software in the container even after it has been signed. That’s why Docker and other such containers offer different container security scanning solutions, which can notify administrators if any of the container images have vulnerabilities that could be exploited.

2. There is also some specialised container security software that has been developed in order to keep a strict vigilance on different security aspects. For instance, Twistlock-It offers software which profiles a container’s expected behaviour, networking activities (like source and destination IP addresses and ports) and also certain storage practices so that any unexpected or malicious behaviour can be flagged.

3. Another such container security company is Polyverse. It takes a slightly different approach. It makes use of the fact that different containers can be started even in a fraction of a second, to relaunch the containerised applications in a good state every few seconds so that the time that any hacker has to exploit a running application in a container can be minimised.

4. Some container management systems have also created associated encryption and security services like Docker Secrets to tackle different security concerns associated with containerisation. The practice of using kernel modules, which isolate different processes, also protects containers. This helps to better manage the way distributed applications can access and transmit data.

Advantages and drawbacks of containerisation

Advantages

1. Containers used in containerisation are much more lightweight as they use much fewer resources than virtual machines.

2. Containerisation helps to increase the efficiency of CPUs by saving the storage and memory of the system.

3. Containerisation leads to high portability.

4. With the help of containerisation, different containers can be instantiated precisely when they are required, and they can disappear when no longer required. This helps in freeing up the resources on their hosts when they are not in use.

5. A single server can be used to host a far greater number of containers than virtual machines and, hence, supports sharing.

6. We can track successive versions of a container, inspect the differences, or even roll back to the previous versions using containerisation.

7. Containerisation leads to greater modularity.

8. It helps us make different changes to the various modules of the application without us having to work on the entire application.

Drawbacks

1. Containerisation suffers because it is not adequately isolated from the core OS.

2. Since application containers are not abstracted from the host OS on a VM, security threats can access the entire system.

3. Containerisation is ideally used for microservice applications. Containers cannot be used as a universal replacement for VMs.

4. Few dependencies on the containers limit their portability between servers.

5. There are a limited number of tools available that can monitor and manage different containers.

An interesting overview of the pros and cons of containerisation.