Docker is the world’s leading containerisation platform designed to make it easier to create, deploy and run various applications by using containers. Docker containers wrap up a piece of software into a complete file system that contains everything the software needs to run.

Have you ever developed a snippet of code in Java on your local system and then tried to run it on some other desktop with a different environment? You were probably blocked with a configuration error or exception. If the snippet of code works fine on your system but doesn’t on the system with a different configuration, then you need to make changes to the latter’s configuration in order to make the code run. Sometimes, this gets quite tedious and infuriating.

Containers actually put a full stop to all such problems. They help us run any software reliably when moved from one computing environment to another, whether from a developer’s desktop to a test environment or into production, and perhaps even from a physical machine in a data centre to any virtual machine in a public or private cloud. The real need for software containers arises when the supporting environment on which the software is actually developed is not identical to the one where it’s implemented. For instance, if you test code using Python 2.7 and then it’s made to run on Python 3 in production, something weird happens. There are cases when we rely on the behaviour of a certain version of a library and another updated version gets installed automatically, resulting in the software running on that system behaving unusually. Not only different software, configurations or a library, but even a different network topology or different security policies and storage can prevent software from running successfully.

A container basically consists of an entire runtime environment, which includes an application with all its dependencies, like libraries and other binaries, and even the configuration files needed to run it, all bundled into one package. Hence, by containerising the differences in operating system distributions, the underlying infrastructure is abstracted away. Containers encapsulate all the discrete components of application logic which are provisioned, that too with the minimal resources needed to do their job.

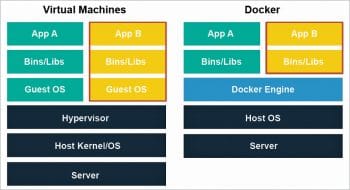

Containers are different from virtual machines

Unlike virtual machines (VMs), containers have no need for embedded operating systems; calls are made for operating system resources via an Application Programming Interface. By contrast, a server which is running three containerised applications, as with Docker, runs just a single operating system, and each of the containers shares that operating system kernel with the other containers. We need to note that the shared parts of the operating system are read only, while each of the containers has its own way to access the operating system for writing. This means that containers are much more lightweight and use far fewer resources than virtual machines. A container may be only a few megabytes in size, whereas a virtual machine with its own entire operating system may be several gigabytes in size. This is why a single server can host far more containers than virtual machines.

Docker: An open source software containerisation platform

Docker is an open source software containerisation platform designed to make it easier to create, deploy and run various applications by using containers. According to the official Web page of Docker, its containers wrap up a piece of software into a complete file system that contains everything it needs to run: runtime, code, system tools or system libraries – anything we can install on a server. This ensures that it will always run the same, irrespective of the environment it is running in.

Docker provides an additional layer of abstraction and even automation of virtualisation at the operating system level on Linux. It actually uses different resource isolation features of the Linux kernel, like cgroups and kernel namespaces, and also a union-capable file system such as OverlayFS to allow independent ‘containers’ to run within a single Linux instance. This helps in avoiding the overhead of starting and then maintaining virtual machines, which significantly boosts its performance and also reduces the size of the application. The support of the Linux kernel for namespaces isolates an application’s view of the operating environment—including process trees, user IDs, network and mounted file systems —while the kernel’s cgroups helps in limiting resources, including the memory, CPU, block I/O and network.

As various actions are done to a Docker base image, different union file system layers are created as well as documented, such that each layer fully describes the way to recreate an action. This strategy enables Docker’s lightweight images, since only layer updates need to be propagated in this. It implements a high-level API to provide lightweight containers that run different processes in isolation. Docker helps enable portability and flexibility wherever the application can run – whether on-premise, the private cloud, the public cloud, bare metal, etc. Docker accesses the different virtualisation features of the Linux kernel either directly, using the libcontainer library available in Docker 0.9, or indirectly via libvirt and LXC (Linux Containers). Since Docker containers are very lightweight, even a single server or a virtual machine can run several containers simultaneously. According to an analysis done in 2016, a typical Docker use case involves running five containers per host, but if we ponder over this, then that many organisations can actually run 10 containers or even more.

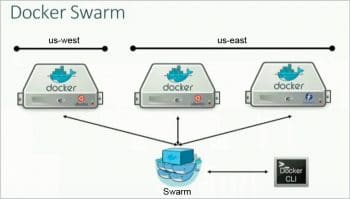

Using Docker to create and manage containers may actually simplify the creation of highly distributed systems by allowing multiple worker tasks, applications and other processes to run autonomously on a single physical machine or even across multiple virtual machines. This allows the deployment of different nodes as soon as the resources become available or even when more nodes are needed. It allows a Platform-as-a-Service (PaaS) style of deployment and the scaling for various systems like Apache Cassandra, Riak or MongoDB. Docker also helps in simplifying the creation and operation of task queues and other such distributed systems. And, most importantly, Docker is open source, which means that anyone can contribute to it and even extend it to meet their own needs in case they need additional features that aren’t available.

Differences between Docker and containers

1. There is some confusion when we try to compare containers and Docker. The latter has become quite synonymous with container technology because it has been the most successful at popularising this technology. Docker is an open source software containerisation platform, which helps in creating, deploying, and running various applications by using containers. But, we need to be aware that container technology is not new. It was built into Linux in the form of LXC almost 10 years ago, and similar virtualisation at operating system level has been offered by AIX Workload Partitions, FreeBSD jails and Solaris containers as well.

2. Today, Docker is not the only tool for Linux which helps in software containerisation. There are other alternatives like Rkt also available in the market. Rkt is a command line tool, which is used for running different app containers produced by CoreOS. It is able to handle both Docker containers as well as the ones that comply with its App Container Image specification.

3. One of the most important reasons for launching Rkt is that Docker has become too big and has actually lost its simplicity. Containers help in reliably running any type of software, whereas Docker is now building tools to launch various systems for clustering, cloud servers, and a wide range of other functions like building and running images, uploading or downloading them and eventually even overlay networking—all compiled into a single, monolithic binary running primarily as the root on any server.

4. According to Kelsey Hightower, chief advocate of CoreOS, other container images are intended to be much more secure than Docker images because they are signed by their creators. When we use Docker and pull an App Container image, we can decide if we trust the developer before running it. Rkt can also run Docker images, but they won’t always be signed.

Integration of Docker with several other tools

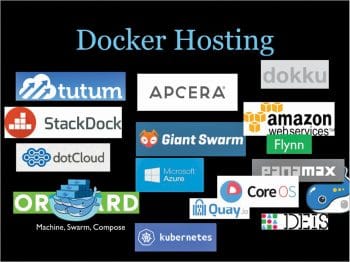

Since Docker provides a platform to deploy an application with its complete package, it’s quite obvious that it should have the ability to integrate with other kinds of applications as well, so that the application deployed on it can interact with it, without any blockages. There are several infrastructure tools like Amazon Web Services, Ansible, Chef, CFEngine or Google Cloud platform, which can easily be integrated with Docker.

Docker can also interact with other tools like Jenkins, IBM Bluemix, Microsoft Azure, OpenStack Nova, Salt, Vagrant and VMware vSphere Integrated Containers. The Cloud Foundry Diego integrates Docker into the Cloud Foundry PaaS. The OpenShift PaaS of Red Hat integrates Docker and other related projects (Kubernetes, Project Atomic, Geard and others) since v3 (June 2015). The Apprenda PaaS integrates containers of Docker in version 6.0 of its product.

Stack has complete support for automatically performing builds inside Docker, using the user ID and volume mounts switching to make it mostly seamless. FP Complete provides images for use with Stack that also include other tools, GHC and optionally, have all of the Stackage LTS packages which are pre-installed in the global package database. The main purpose for using Stack/Docker this way is to ensure that all developers build in a consistent environment without any of the team members needing to deal with Docker on their own.

The recent role of Docker in the IT field

1. Docker is a tool that is designed to benefit both systems administrators and developers, making it actually a part of many DevOps (developers + operations) tool chains. For developers, it means that they can simply focus on writing code without worrying about the system that it will be running on. Docker makes it possible to set up a local development environment that is exactly like the live server. It helps to run multiple development environments, that too from the same host having unique software, configurations, operating systems and test projects, on new or different servers. It also gives them an opportunity to get a head start by using one of the thousands of programs already designed to run in Docker as a part of their application. For those in operations, Docker provides flexibility and potentially reduces the number of systems that are needed because of its small footprint and lower overhead.

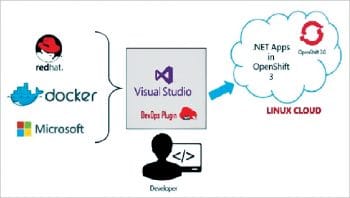

2. Docker, an open source containerisation platform, isn’t just the darling of several Linux powers such as Red Hat and Canonical. Various proprietary software companies such as Microsoft have also adopted it. Red Hat OpenShift 3 is one of the latest technologies that uses Docker Container and Kubernetes. OpenShift 3 has evolved and gained great momentum since its inception in 2015. It uses a Git Repository to store the source code of an application and Docker registry to manage Docker images.

3. Docker has also been used as a tooling mechanism to improve the resource management, process isolation, and security in the virtualisation of any application. This had been made clear with the launch of Project Atomic, a lightweight Linux host specifically designed to run Linux containers. The main focus of this project is to make containers easy to run, update and roll back in an environment that requires fewer resources than a typical Linux host.

4. eBay has been quite focused on incorporating Docker into its continuous integration process so as to standardise the deployment across a distributed network of different servers that run as a single cluster. The company also isolates application dependencies inside containers in order to address the issue of each server having different application dependencies, software versions, and special hardware. This means the host OS need not be the same as the container OS, and eBay’s end goal is to have different software and hardware systems running as a single Mesos cluster.

5. CompileBox, a Docker-based sandbox, can run all untrusted code and return the output without any risk to the host on which the software is actually running. During the development of CompileBox, developers considered using Ideone, Chroot jails and traditional virtual machines, but Docker was selected as the best option among all of them. Chroot does not provide the necessary level of security, whereas Ideone can quickly become cost-prohibitive, and virtual machines also take an exceptionally long time to reboot after they are compromised. Hence, Docker was the obvious choice for this application, as malicious code that attempts to destroy the system is limited to the container, and containers can be created and destroyed as quickly as needed.

Advantages of Docker

1. Rapid application deployment: Docker requires minimal runtime for any application, reducing the size and allowing quick deployment.

2. Portability across machines: An application with all its dependencies can be bundled into a single container, which is independent from the host version of the Linux kernel, deployment model or platform distribution. This container can be simply transferred to another machine that runs Docker, and executed there without any compatibility issues.

3. Version control and component reuse: We can track the successive versions of a Docker container, inspect the differences, or even roll back to previous versions. Docker reuses components from the preceding layers, which makes them lightweight.

4. Sharing: We can use a remote repository to share our Docker container with others. Red Hat even provides a registry for this purpose, and it is also possible to configure our own private repository.

5. Lightweight footprint and minimal overhead: Docker images are usually very small, which helps in rapid delivery and reduces the time to deploy any new application containers.

6. Simplified maintenance: Docker also reduces the effort and risk of problems caused due to application dependencies.

7. Security: From a security point of view, Docker ensures that applications that are running on containers are completely segregated from each other, granting us complete control over the traffic flow and management. No Docker container can look into processes that are running inside another container. From an architectural standpoint, each container actually gets its own set of resources, ranging from processing to network stacks.