Google is trying to filter abusive language using an open source tool. Called Conversation AI, the new development is currently in the pipeline by Jigsaw, a subsidiary of Google’s parent company Alphabet.

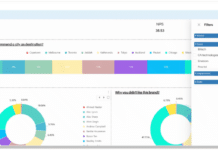

The Conversation AI technology that Google engineers have built at Jigsaw is reportedly capable of identifying abuse with 92 percent of certainty. According to Wired magazine, the tool has successfully filtered more than 17 million comments on The New York Times and over 13,000 discussions on Wikipedia. The data is said to be accurately labeled and scanned using the same software.

Google is using machine learning tactics to filter out trolls on the websites. Although the search giant describes more than 92 percent certainty and 10 percent false positivity rate, Andy Greenberg of Wired says otherwise. According to him, the AI tool makes subtle distinctions. The algorithm is still in the nascent state though expected to evolve over time.

There has been an attempt at stopping the web user from accessing illegal and inappropriate content. Adding machine learning to the serious attempt is a good thing at creating the self-evolving engine. That said, the results from Conversation AI needs to be testing thoroughly before the mass adoption of the technology.

The Jigsaw team is set to make the AI tool available to developers around the world. Meanwhile, Wikimedia and NYTimes are experimenting its initial presence.