Many of the websites today have servers that support HTTP protocols, which are based on standards that were released in 1999. The focus of Web browser and application developers, since then, has been on the various strategies that could be deployed to lay out the files on browsers in a better way for faster rendering and a more real-time experience. But the effort by Google (with SPDY) and its adoption by the IETF (Internet Engineering Task Force) to make it a standard with an RFC (Request for Comments), changed the way files are rendered to the browser from the Web server, speeding up application rendering substantially.

This article gives the history of the HTTP and the advantages of the newly released standard, HTTP/2, which is catching up because it ensures faster real-time rendering of data in the browsers.

A brief history of the HTTP standard

Tim Berners-Lee, considered the inventor of Web technologies, proposed the World Wide Web project in 1989 with his team at CERN. This involved a server that transported data over the TCP/IP and was called a Web server. This implemented one protocol action called GET, which indicated to the server that the User Agent, also called the Browser, had sent this request to get the data (Web page) referred by the parameter that it sent along with the request. The Web page that resides in a relative location inside the Web server requested is known as the URL (universal resource locator) that points to the simplified markup language based file, namely, the HTML file.

The Hypertext Markup Language (HTML) consists of identifiers (markups) that refer to various segments of the file (like the header, body and footer), the divisions inside these sections, various data types referred to in the page (namely, images), as well as audio/video links that are later fetched by the browsers to display or play. The server that serves these hypertext files is also called a HTTP (HyperText Transfer Protocol) server. This server implementation was later made into a standard by the IETF with the RFC 1945 document.

This way of exchanging documents and information became popular very quickly since the protocols that were used earlier, like the FTP (file transfer), Gopher (search and retrieve) and TELNET (a connection to another machine) were all found to be difficult to use by graduates who were not computer savvy in the college campuses. This technique caught on fast in the early 1990s.

These servers were run on mainframes and/or servers that were in the intranets of campuses and enterprises, and were primarily used to serve internal documentation and forms.

The very first website that was created by Tim Berners-Lee is still active at http://info.cern.ch/hypertext/WWW/TheProject.html

HTTP versions

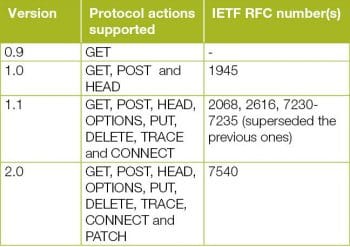

This initial HTTP protocol version was denoted by the number 0.9. Figure 2 provides the timeline for the various versions that were released and the features that the protocols supported.

The actions that were supported in each of these versions and the standard document number (RFC) are tabulated below:

The initial version supported only the GET action. This was restricted to serving the HTML files and the associated files (images) that were referred with the <IMG> tag. Applications at that time were mainly serving documents with images, forms that could be downloaded and printed, calendars with events marked, etc.

With the linking of email capability into the HTML syntax, there was a need to collect the feedback and contact details of people visiting the website, and sending it to appropriate personnel within the college and/or company. This is when corporate websites started appearing in the dial-up connected Internet, and these companies wanted their visitors to also fill up some forms that would enable them to collect information. This led to the POST action being supported, which would send the information collected in the browser back into the Web server for saving and sending as emails. This was the start of two-way data handling from the servers and browsers.

Browser based applications were developed, and there was a need for additional actions to be supported that would enable the server to interact with the thick client applications running on the browser to exchange data and enable workflows for the browser users. The competition amongst browser developers to capture market share did not focus on HTTP enhancements and instead laid emphasis on faster rendering of text and images in the browser itself. Since these improvements saturated, the browser vendors along with Google(SPDY) efforts started looking at how to handle the improvements via enhanced exchange of data from HTTP servers and hence this new protocol standard.

History of user agent(s) or Web browser(s)

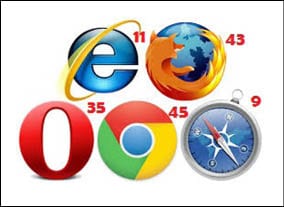

The first browser that was developed by NCSA in 1993 was known as Mosaic and was later renamed as Netscape Navigator. Its descendant is Mozilla Firefox and, in 1995, Microsoft licensed it to create Internet Explorer. Apple released its browser, Safari, in 2003 and Google later released Chrome in 2008. Each browser competed on efficiency and faster rendering of these Hypertext files to attract a larger user base. Cross-platform (OS) versions of these browsers were also released to increase their adoption.

Apart from the performance improvements in rendering the content on the screen, efforts were made to alter content storage and retrieval to make it reach and render faster in the browsers. Pages were kept compressed and transferred to avoid latency in lower bandwidth connections. The progressive rendering of images using the interlaced GIF standard was attempted.

Genesis of the HTTP/2 standard

As these changes took place, the nature of content rendered on the browsers also started to become more real-time as compared to static content that was rendered in the 1990s, right up till the mid-2000s. To address this challenge, those at Google realised that browser behaviour needed to be addressed by providing additional information to it from the server, regarding which part of the content needed to be changed immediately and which portions had to remain static.

The SPDY protocol, which was made public by Google in July 2012, was developed to provide information to the browser using a new command that would update only a certain part of the Web page with greater speed; hence, the protocol was called SPeeDY. It also used other techniques to speed up the transport of the files from the server to the browser by compressing the files using the gzip mechanism.

This was contributed by Google to the IETF standards body that took up the standardisation effort using the RFC 7540, which was completed in May 2015. Apart from other changes, a new protocol method, PATCH, was added. The PUT action replaces the data which is sent from the browser completely, in the server; with PATCH, the browser sends only the incremental changes that need to be done on the server and thereby enables quicker changes on the server side.

Early adopters

Google, with the SPDY protocol, started making changes in the behaviour of the HTTP server, and followed this by showing the performance improvements due to the Chrome browser enhancements to support this protocol. This grabbed the attention of many content delivery service providers who were handling high requests for the static files that were hosted by them instead of application hosting servers. This was done predominantly to reduce the access times for these static files by hosting them nearer to the location of the browsers themselves.

Browsers that implemented HTTP/1.1 had the issue of locking up the requests thread to the server till the parse of the initial set of files was completed. This led to it taking longer to serve the browsers request for files and this affected the performance of these CDN (content delivery network) providers. This motivated Google and these adopters to make it a standard for the HTTP servers themselves, which led to the implementation of this version (from the draft stage itself) in the leading servers and browsers.

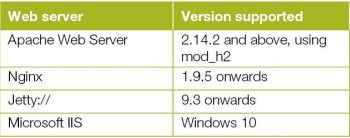

Support for Web servers and browsers

Some of the popular Web servers and the corresponding versions that are supported are tabulated below:

Browsers with the minimum version where the support is available are listed in Figure 3.

Many CDN providers, like Akamai (which spearheaded the adoption of this standard), CloudFlare and CDN77, have moved their servers to the latest versions that support this protocol. Apart from these providers, other application server provider who depend on high performance of HTTP servers also have moved to the latest versions.

The advantages of HTTP/2

Apart from compression of the data being sent from the server to the client, the following are additional changes that have been made in this version.

A. Concurrency support: Multiple requests can be sent by the client over the same connection as opposed to a single request per HTTP connection in HTTP/1.1. The response can come in a different sequence than the order in which the requests were placed to quickly render the information that comes in early.

B. Support for prioritisation indication: The browser can indicate to the server the priority of resources requested from it, and the server can change the sequence in which it transmits resources, thus helping the browser to handle these faster.

C. Server push support: This helps in the server indicating the availability of additional information for the requests that have been made by the browser, which were not part of the request that it sent earlier.

D. Compression support: Compression of data and the header reduces the time taken to transport the information to the browser.

E. PATCH support: This is a modification of the PUT action, whereby the browser instructs the server to update the information partially instead of a complete replacement as in PUT.

F. RST_STREAM support: This indicates to the server that the user has moved away from the operation that was requested (hit Back or stop the loading button, abort the download, etc), so that it can quickly abandon all the transfers from the server, instead of wasting bandwidth as happened with earlier versions.

Measuring speed improvements

Akamai, a leading CDN provider, has been in the forefront with respect to the adoption of the HTTP/2 standards and has supported the SPDY efforts. A demo hosted by it on http://http2.akamai.com/ provides the relative timings taken to load its famous tiled Spinning Globe image, both in HTTP/1.1 and HTTP/2. With the former, the latency was 264ms and the load time was 32.7ms. With HTTP/2, the latency was 0ms and the load time was 3.17ms.

CDN provider Cloudflare has the highest number of SPDY and HTTP/2 based servers running across the world, and in its demo site https://www.cloudflare.com/http2/ the performance improvements are also three times higher when compared to HTTP/1.1 based systems. The time taken to load the tiled image of its server distribution map was 25.18s with the HTTP/1.1 server and 8.34s with the HTTP/2 server.

These improvements are certainly useful in faster loading of websites and real-time updates. This will also increase site affinity with users in locations where broadband connectivity and high speeds are still not available.

Migrating to a new standard

According to a worldwide website tracking software (https://blog.shodan.io/tracking-http2-0-adoption/), in December 2015, HTTP 1.1 servers were still three times more than the combined number of various forms of SPDY and HTTP/2 supported servers. Moving to HTTP/2 standards provides all the advantages that come with the protocol, and a checklist will help in planning and executing the migration.

If the website being migrated already has secure (SSL) support, it will be easy to upgrade the version to the appropriate one that contains this support. If the website doesnt have SSL support, the first step would be to convert it into a secure site (HTTPS) and then ensure that the server has the support for the new standard.

After the server is moved to the latest version, a detailed study of the layout of the resources served from it needs to be made to ensure that the advantages of the concurrency, prioritisation and the push support are appropriately utilised by the applications hosted in the server. Additionally, the SEO optimisations done and image types that are used in applications have to be analysed to ensure that the servers performance can be improved and the functionalities are maintained from the earlier versions.