Manipulating sound and video files appeals to ones creative instincts. FFmpeg, which is used by application software such as VLC, Mplayer, etc, can be used to great effect when working with sound and video files. Lets take a look at how concatenation, fading and overlay can be done in video files using FFmpeg.

FFmpeg is a very fast video and audio converter that can also grab content from a live audio or video source. It can convert arbitrary sample rates and resize videos on-the-fly with a high quality poly-phase filter. This description and complete documentation on FFmpeg can be found at its home page. According to Wikipedia, FFmpeg is used by application software such as the VLC media player, MPlayer, Xine, HandBrake, Plex, Blender, YouTube and MPC-HC. It is one of the most popular open source audio/video converter software.

Understandably, FFmpeg is quite complex and it is naïve for the reader to expect or for the author to try to cover it in a single article. However, I would like to use a specific example to show how FFmpeg works.

In the January 2015 edition of OSFY, I had written about the tool grokit. More details on the tool can be found at http://grokit.pythonanywhere.com. I had created a couple of videos explaining how this tool is used. These videos are accessible at https://www.youtube.com/watch?v=XzrPlAfiC1w and https://www.youtube.com/watch?v=BTGnZShDiqA. It is clear that these videos were created by stitching multiple screencast videos together. In this article, I will explain how this was accomplished using FFmpeg.

FFmpeg is available for Linux, Windows and Mac. It can be downloaded from http://ffmpeg.org/download.html.

Stitching two videos

Let us assume that we have two AVI (1920 x 1080) files with names video1.avi and video2.avi. Let the files be of duration 5s and 10s, respectively. The following command will create video.avi by concatenating the two videos and fading the videos at the point where they are concatenated. Note that there are multiple ways to achieve the same effect and the command used below is merely one example. Please refer to https://www.ffmpeg.org/ffmpeg-all.html for a detailed explanation of all the options used in the command.

ffmpeg -i video1.avi -i video2.avi -f lavfi -i color=black -filter_complex [0:v]fade=t=out:st=4:d=1:alpha=1,setpts=PTS-STARTPTS[v0];[1:v]fade=t=in:st=0:d=1:alpha=1,setpts=PTS-STARTPTS+5/TB[v1];[2:v]scale=1920x1080,trim=duration=15[base];[base][v0]overlay[base1];[base1][v1]overlay[outv] -map [outv] video.avi

Admittedly, the above command looks scary.

I will try to explain the command, starting with the options used in it:

- i: FFmpeg uses the -i option to accept input files. Each of the two input files is provided as a parameter after the -i option.

- f lavfi: We need a background video stream so that we can overlay video1 and video2 on it. For this, we are using the libavfilter input virtual device (https://www.ffmpeg.org/ffmpeg-all.html#lavfi).

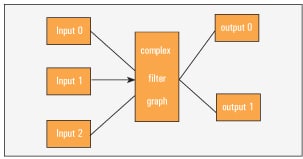

- -filter_complex: A filter complex is used to represent a complex filter graph in FFmpeg. The parameter after the filter_complex option (in double quotes) consists of a series of complex filter graphs separated by semicolons. Complex filter graphs are those which cannot be described simply as a linear processing chain applied to one stream. This is the case, for example, when the graph has more than one input and/or output, or when the output stream type is different from the input (see https://www.ffmpeg.org/ffmpeg-all.html#Complex-filtergraphs).

A complex filter graph is represented by a string of the form: [in_link_1]…[in_link_N]filter_name=arguments[out_link_1]…[out_link_M]. in_link_x represents the inputs that will be filtered using the filter_name and out_link_x represents the outputs. Multiple filters are separated by commas. Now, let us look at the filter graphs used in the command

[0:v]fade=t=out:st=5:d=1:alpha=1,setpts=PTS-STARTPTS[v0]

[0:v] represents the input video stream. The number to the left of : represents the input number (starting with 0) based on the order in which they are provided with the -i’ parameter. (So, 0 represents video1.avi, 1 represents video2.avi, etc). v to the right of : represents the video stream from video1.avi. We apply two filters to this input fade and setpts. Since we want the first video to fade out and the second video to fade in at the point where they are stitched, we use the fade filter present in FFmpeg (https://www.ffmpeg.org/ffmpeg-all.html#fade). We set the fade type toout and the duration of fading to 1s, starting at 4s. (Remember that the video1.avi was of duration 5s. So, we want to fade out the video in the last 1s.) setpts is used to set the presentation time stamp in the output stream. PTS and STARTPTS are constants defined in FFmpeg (https://www.ffmpeg.org/ffmpeg-all.html#setpts_002c-asetpts). This filter will offset the presentation time stamp of all the frames in the output stream by the PTS of the first frame in the input stream. The output video stream is represented by v0.

[1:v]fade=t=in:st=0:d=1:alpha=1,setpts=PTS-STARTPTS+5/TB[v1]

In the above command, the video stream in the second input video (video2.avi) is the input video stream. The video will fade in for a duration of 1s, starting from the beginning. Now, this video has to be shown after the video1.avi has faded out (i.e., 5s). So, we use the setpts filter to offset the presentation time stamp by 5s. TB is a constant representing the time-base of input time stamps. The output video stream is represented by va\1.

[2:v]scale=1920x1080,trim=duration=15[base]

Here, the third video input (from the virtual device) acts as the input video stream. This stream is scaled to the resolution matching that of video1.avi and video2.avi. The stream is generated for a total duration of 15s and the output stream is represented by base.

[base][v0]overlay[base1];[base1][v1]overlay[outv]

The command given above will simply overlay the streams v0 and v1 on base. The resulting stream is represented as outv.

-map: The final video stream outv is designated as the source for an output file using this option (see https://www.ffmpeg.org/ffmpeg-all.html#Advanced-options)

Look at the command again. Hopefully it is not as scary now.

Adding an image

Often, there is a need to show a picture for a couple of seconds in a video (for example, when showing a logo). The command below will create a video (img.avi) that will show the image (img.jpg). The option-t sets the duration of the video to be 5s and the option-s sets the resolution.

ffmpeg -loop 1 -i img.jpg -t 5 -s 1920x1808 img.avi

Multiple videos

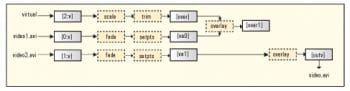

When there are more than two videos to be stitched, the procedure can be extended in a trivial manner. Though it is easy, care has to be taken in deciding the parameters for fade and setpts filters so that the videos are overlaid at the proper time. The command given below creates video.avi by stitching img.avi and the previously used video1.avi and video2.avi.

Also, note that in this case, video1.avi is both being faded in (in the beginning) and faded out (at the end).

ffmpeg -i img.avi -i video1.avi -i video2.avi -f lavfi -i color=black -filter_complex [0:v]fade=t=out:st=4:d=1:alpha=1,setpts=PTS-STARTPTS[v0];[1:v]fade=t=in:st=0:d=1:alpha=1,fade=t=out:st=4:d=1:alpha=1,setpts=PTS-STARTPTS+5/TB[v1];[2:v]fade=t=in:st=0:d=1:alpha=1,setpts=PTS-STARTPTS+10/TB[v2];[3:v]scale=1920x1080,trim=duration=20[base];[base][v0]overlay[base1];[base1][v1]overlay[base2];[base2][v2]overlay[outv] -map [outv] video.avi

As you would have guessed by now, a script can be used to construct the above command. Once we have the list of videos, the sequence they need to be stitched in, and the lengths of the videos, a script can speed up this process and make it less error prone. The base video stream (represented as [base]) can also be generated using the nullsrc filter.

Overlay (PIP) videos

We used the overlay filter as a background stream and then concatenated multiple videos in previous examples. Overlaying can do much more. The example below shows how we can create a PIP video using the overlay filter:

ffmpeg -i img.avi -i video1.avi -filter_complex [0:0]scale=320:240[v0];[1:0][v0]overlay=overlay_w:overlay_h [outv] -map [outv] pip_video.avi

Here, we scale the img.avi to a smaller size (320 x 240) and then overlay it on top of video1.avi.

First, note that I am using [0:0] instead of [0:v] to refer to the video stream. (The 0th stream is generally the video stream. This notation is intuitive when there is more than just one stream of a type, for example, multiple audio streams.) The scale filter scales the video stream from first input to a smaller size. The overlay filter accepts two parameters that give the position of the overlaid video on top of the base video. We have used overlay_w and overlay_h, which represent the width and height of the overlaid video in FFmpeg (so the PIP video is placed at 320 x 240 pixels from the top left corner). We can also give absolute values for this parameter.

Audio

Until now, we have looked at the video streams only. How good is a video without its audio?

Audio is, by default, picked from the audio stream of the first input. So, in any of the commands used till now, if we did not explicitly map the [outv] video stream to the output file, the audio stream from the first input is available in the output file.

For example:

ffmpeg -i video1.avi -i video2.avi -filter_complex [0:0]scale=320:240[a];[1:0][a]overlay=overlay_w:overlay_h out.avi

This is similar to the overlay command used before. But now, the audio from the first video is available in out.avi.

To explicitly concatenate the audio from two files, the following command can be used:

ffmpeg -i video1.avi -i video2.avi -f lavfi -i color=black -filter_complex [0:v]fade=t=out:st=5:d=1:alpha=1,setpts=PTS-STARTPTS[va0];[1:v]fade=t=in:st=0:d=1:alpha=1,setpts=PTS-STARTPTS+5/TB[va1];[2:v]scale=1920x1080,trim=duration=15[over];[over][va0]overlay[over1];[over1][va1]overlay[outv];[0:a]atrim=end=5,asetpts=PTS-STARTPTS[a1];[1:a]atrim=end=10,asetpts=PTS-STARTPTS[a2];[a1][a2]concat=n=2:v=0:a=1[outa] -map [outa] -map [outv] video.avi

Let us look at the filter graphs used for audio.

[0:a]atrim=end=5,asetpts=PTS-STARTPTS[a1];[1:a]atrim=end=10,asetpts=PTS-STARTPTS[a2]

The audio stream from the first input is passed through two filters atrim and asetpts. The former is used to trim the audio stream to 5s and asetpts sets the time stamp for the audio frames. The same filters are applied to the audio stream from the second input. Note that atrim is used to trim the length of the audio clip from an input. Since we are using the entire length, it can be omitted in this case.

[a1][a2]concat=n=2:v=0:a=1[outa]

The two streams ([a1] and [a2]) are not concatenated using the concat filter to create the final [outa] stream that is written to the output file.

The FFmpeg documentation is very comprehensive and can be used as a good reference while using the tool. There are lots of other options that can be used with FFmpeg to modify audio and video files as desired.

References

[1] https://www.ffmpeg.org/ffmpeg.html

[2] http://en.wikipedia.org/wiki/FFmpeg