Hopefully, readers have learnt from and enjoyed the earlier articles in this series on Wireshark, which started in July 2014. The articles included discussions on various important and interesting Wireshark features and various protocols such as ARP and DHCP, capturing packets in a switched environment, detecting an ARP spoofing attack, etc. This article will discuss how to put Wireshark to use when troubleshooting slow network problems.

Computer users often say, The network is slow or The Internet is working very slowly. Field engineers trying to troubleshoot such problems face an uphill task; first, to figure out what exactly a slow network means and further, to locate and solve the actual problem. There are just too many different aspects and variables to check.

The actual reasons for slow network speeds could be broadly classified as follows.

Network related issues:

- Slow Internet bandwidth available from the ISP, especially in shared connections

- A faulty Ethernet switch

- A fault in the Ethernet interface on the switch

- A defective Ethernet card on a computer system or device in the network

- The presence of electrical noise in the vicinity of the networks cabling

Problems linked to the operating system, drivers and everything else:

- Ethernet device driver

- Presence of worms (a type of malware which generates a lot of network traffic)

- Insufficiently configured computer hardware

- Too many downloads initiated from a single system or network

- All systems scheduled to update the anti-virus or the operating system simultaneously

- Simultaneous use of ERP software during a particular time

To arrive at the correct troubleshooting approaches, consider the fundamental basis of networking - the OSI/TCPIP layers. I assume that readers are knowledgeable about them – a lot of excellent information is also available on the Internet. Still, let me describe these layers in brief for relevance and immediate reference.

OSI layers separate network communication into seven different layers, each offering its services to its immediate upper and lower layer. This enables structured and standardised communication between systems. The OSI model does not require presence of each one of these seven layers. Also, multiple adjacent layers could be combined to form one layer. As of today, the most widely used model is TCP/IP, which has four layers. Do go through Table 1, which describes the four TCP/IP layers, their functions and corresponding OSI layer(s).

These OSI and TCP-IP layers are important from troubleshooting perspectivedepending upon the situation, one may use these layers as guidelines when selecting a particular approach while troubleshooting.

The top down approach: The diagnosis starts from the operating system, device drivers, anti- virus software and application software, before going down towards the network access layer.

The bottom up approach: In this case, troubleshooting starts from the Ethernet media (cabling), Ethernet card, MAC address and IP address before moving up the layers towards the application layer. In short, this approach involves starting by locating faults in the network infrastructure before moving up to the application layer.

Before deciding upon the approach and starting the actual tests or hands-on diagnosis, discuss the problem with the end user or site engineer (if any). For example, when the problem of a slow network is reported, the actual problem could be non-accessibility (or slow speed) of a particular service (say, FTP). Here, you can certainly select the top down approach and proceed. As you troubleshoot more and more problems, you will develop troubleshooting insight, which helps to take the correct approach and locate the exact problem in the shortest possible time.

This article describes three real-life network troubleshooting scenarios. These will definitely be interesting, readily applicable and helpful during day-to-day network diagnostics, especially for field engineers.

Scenario 1: The Internet is not working

The network setup had approximately 75 computer systems spread across four floors in one building. The server room was located on the second floor, which housed the networking rack, the firewall, the router for Internet connectivity and the servers. Cabling was structured with patch panels in the rack and information outlets at the user end. Cables and passive network components were of a reputed brand. The network installation was approximately four years old. A total of six 10/100MBps unmanaged Ethernet switches were used. Out of them, one switch connected all the servers and the remaining connected systems were located on various floors. Site inspection revealed that the cabling was done as per the industrys best practiceswith numbering at both ends, cable bend radius guidelines being followed, and patch cable being used at both the ends.

Detailed discussions with the administrator revealed a very interesting problem. First, the Internet stops functioning, then the network goes on becoming slower and slower, and finally, it comes to a complete halt. Even local copying of files and accessing computer systems becomes impossible. The problem is random in nature and has developed gradually. Rebooting the switches always stabilises the network, but after a random period of time, the problem reappears. To sum it up, the problem reported was actually the non-functioning of the entire LAN and was not limited to just the Internet is not working.

The best approach to troubleshooting in this case would have been to use Wireshark if managed Ethernet switches with a SPAN port (port mirroring) were available. With the help of a SPAN port, traffic from all the other ports on that switch could be copied to one port. A system with Wireshark connected on this SPAN port would capture the entire switch traffic. An analysis of this would lead to the faulty component or system being detected. However, on this site, managed switches were not available; hence a different approach was followed:

- First, the stacking of the switches was disconnected.

- Then, the Ping command was used continuously from one server to the default gateway.

- The stacking cables were connected, one by one.

When one of the switches was stacked, the Ping response started slowing downthus the faulty switch was isolated quickly. Proceeding further, to confirm the diagnosis, the faulty switch was replaced using an additional switch; but, unfortunately, the problem reappeared. So the fault was not with the switch. Troubleshooting continued further:

4. All Ethernet cables from the switch located in Step 3 were removed.

5. One cable at a time was plugged till the problem appeared.

6. The computer system/device (which had a faulty Ethernet card!) with a slow Internet speed was isolated.

Scenario 2: Slow Internet speed

The set-up had 10 computer systems connected to the Internet using the GNU-based IPCop firewall via a DSL connection.

Discussions with the systems administrator revealed that the LAN speed was perfect; however, the Internet speed was very slow. Trials had already been conducted to bypass the firewall by connecting one laptop directly to the DSL connection. Here, the Internet speed available for one PC was satisfactory.

The discussions clearly revealed that the issue arose only when the office computer systems were trying to access the Internet. So, the following steps were taken to isolate the problem:

(a) The firewall was the first suspect a firewall installed on a PC, and that too, with GNU? It must be the culprit! But bypassing it did not resolve the problem. Internet speed continued to be slow.

(b) Next, the possibility of a virus was explored.

(c) To capture complete network traffic, the Ethernet switch was replaced with an Ethernet hub (remember that old device which was used in the 1980s?). And traffic was captured using a Wireshark system connected to one port on the Ethernet hub.

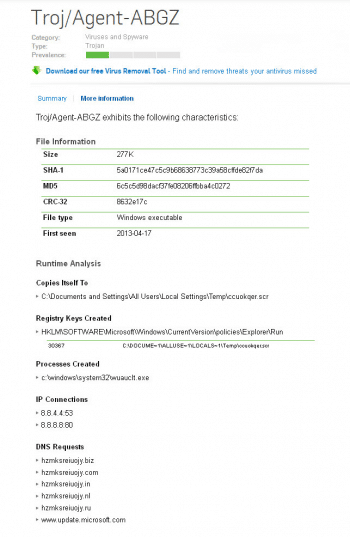

(d) This hit the jackpot (see Figure 1) revealing interesting malware on various computer systems. (Figure 1 shows only one malware, though there were many more, which I unfortunately could not capture.)

(e) Googling these links revealed a known malware (see Figure 2).

(f) Anti-virus and anti-spyware software were run on the systems to clear it of malware.

(g) Firewall black lists were updated to block these links.

Thus, removing malware solved the issue.

Scenario 3: A slow network and systems disconnect from it intermittently

The described problem was that any of the computer systems connected to the network would disconnect from the LAN intermittently. This would also lead to the complete network coming to a halt.

The in-house administrator had tried to diagnose the problem for several days. Discussions revealed several interesting facts. The installation had two 24-port Gigabit unmanaged Ethernet switches in two rooms connected by a crossover cable. LAN traffic was heavy with several large files being copied or moved between various systems every now and then. Each switch connected to 20 computer systems. The problem was first observed after half the older computers had been replaced with new systems. Network cabling and Ethernet switches were not changed or even touched during the upgrade. Operating system security updates were applied to the new computer systems. Rebooting the Ethernet switches would start the network, but the problem would reappear randomly.

The steps taken for troubleshooting were as follows:

- The first step was to check the operating systems the problem was reported after the change (of computers). So, the latest drivers of the motherboard, particularly the Network Interface Card (NIC) were downloaded and applied. On various forums there were complaints about similar problems for a specific model of NICs (which were onboard for the new systems) supporting EEE (Energy Efficient Ethernet). If connected to switches not supporting EEE, random network disconnections were reported. So, EEE features were disabled it was expected that the problem would be resolved, but that didnt happen.

- Anti-virus signatures of all the systems were updated, and the systems scanned for viruses. Some of the systems had viruses which were removed, but the problem reappeared.

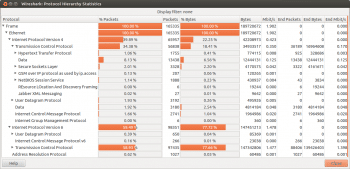

- Switches were replaced using the hub, turn by turn. Wireshark was deployed to capture network traffic for analysis. To our utmost surprise, there was very heavy IPv6 traffic in the network measuring almost 77 per cent of the total traffic, even though the network was configured for IPv4. So, it appeared that the problem was locatedprotocol IPv6 was disabled from all the systems in the belief that there could be an unknown malware that was using IPv6 to spread! But, again, there was no luck here too. The problem persisted.

Wireshark Statistics menu: Here, let us take a small break to understand an interesting Wireshark menu Protocol Hierarchy which is available under Statistics. This could be used to arrive at high IPv6 bandwidth utilisation while troubleshooting scenarios such as the current one. Protocol Hierarchy analyses captured files using various parameters beginning with Ethernet IPv4 / IPv6, and drilling down TCP and UDP to corresponding detailssuch as http, ssl, pop for TCP and DNS, etc, for UDP. These files are presented as % bytes used by these protocols with respect to total traffic. Look at Figure 3 for an interesting capture showing too much IPv6 traffic. (Later, it was concluded that the traffic was LAN file transfer traffic.)

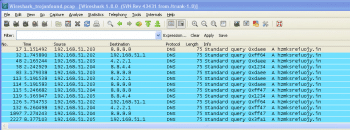

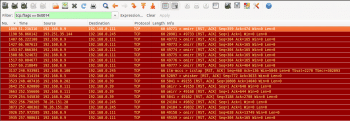

The traffic was further analysed to isolate TCP KeepAlive, TCP Retransmission, RSTACK and DUPACK packets, which generally signify problems. Systems found with these packets (faulty systems) were identified and isolated from the network. The systems were also scanned for viruses, and the infected systems were removed. However, with no further luck here too, troubleshooting had to continue.

Look carefully at Figure 4. To isolate RST and ACK traffic, the display filter tcp.flags == 0x0014 was used.

This was really frustrating and additional time was wasted with the trial and error process. Yet, the problem was still unresolved, and customer anxiety was increasing day by day.

Going back to the basics, it occurred to us that the issue could be with the NIC drivers or operating systems, primarily due to the fact that the problem started only after the new computer systems were installed. So, a few old systems that were using the same NICs were checked, which revealed a driver version that was different than the OS default drivers and the current drivers available on the vendors website (which were applied in Step 1). Matching the old drivers on all the systems finally solved the issue.

Looking back

Though packets indicating network issues were present in the capture, their percentage compared with good packets was just too low which could not have affected the network to the point of bringing it down completely. It was easy to conclude from the Wireshark capture that the top down approach starting from the operating system level and going down towards the physical layer should have been adhered to before going ahead with removing the supposed faulty systems from the network.

The end user was appreciated for his patience and confidence in the troubleshooter.

For troubleshooters, slow network issues are indeed a challenging task. Apart from resourceful troubleshooters with adequate tools, techniques, experience and insight, successful troubleshooting also requires a lot of patience from the engineer and the customer as well!