The current cloud inventory for one of the SaaS applications at our firm is as follows:

- Web server: Centos 6.4 + NGINX + MySql + PHP + Drupal

- Mail server: Centos 6.4 + Postfix + Dovecot + Squirrelmail

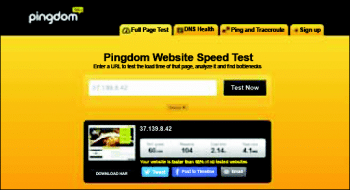

A quick test on Pingdom showed a load time of 3.92 seconds for a page size of 2.9MB with 105 requests.

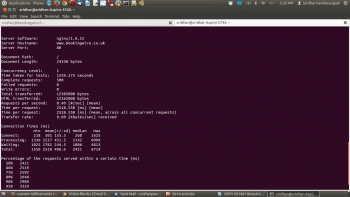

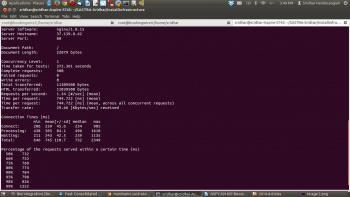

Tests using Apache Bench ab -c1 -n500 http://www.bookingwire.co.uk/ yielded almost the same figuresa mean response time of 2.52 seconds.

We wanted to improve the page load times by caching the content upstream, scaling the site to handle much greater http workloads, and implementing a failsafe mechanism.

The first step was to handle all incoming http requests from anonymous users that were loading our Web server. Since anonymous users are served content that seldom changes, we wanted to prevent these requests from reaching the Web server so that its resources would be available to handle the requests from authenticated users. Varnish was our first choice to handle this.

Our next concern was to find a mechanism to handle the SSL requests mainly on the sign-up pages, where we had interfaces to Paypal. Our aim was to include a second Web server that handled a portion of the load, and we wanted to configure Varnish to distribute http traffic using a round-robin mechanism between these two servers. Subsequently, we planned on configuring Varnish in such a way that even if the Web servers were down, the system would continue to serve pages. During the course of this exercise we documented our experiences and thats what youre reading about here.

A word about Varnish

Varnish is a Web application accelerator or reverse proxy. Its installed in front of the Web server to handle HTTP requests. This way, it speeds up the site and improves the performance significantly. In some cases, it can improve the performance of a site by 300 to 1000 times.

It does this by caching the Web pages and when visitors come to the site, Varnish serves the cached pages rather than requesting the Web server for it. Thus the load on the Web server reduces. This method improves the sites performance and scalability. It can also act as a failsafe method if the Web server goes down because Varnish will continue to serve the cached pages in the absence of the Web server.

With that said, lets begin by installing Varnish on a VPS, and then connect it to a single NGINX Web server. Then lets add another NGINX Web server so that we can implement a failsafe mechanism. This will accomplish the performance goals that we stated. So lets get started. For the rest of the article, lets assume that you are using the Centos 6.4 OS. However, we have provided information for Ubuntu users wherever we felt it was necessary.

Enable the required repositories

First enable the appropriate repositories. For Centos, Varnish is available from the EPEL repository. Add this repository to your repos list, but before you do so, youll need to import the GPG keys. So open a terminal and enter the following commands:

[root@bookingwire sridhar]#wget https://fedoraproject.org/static/0608B895.txt[root@bookingwire sridhar]#mv 0608B895.txt /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-6[root@bookingwire sridhar]#rpm --import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-6[root@bookingwire sridhar]#rpm -qa gpg*gpg-pubkey-c105b9de-4e0fd3a3 |

After importing the GPG keys you can enable the repository.

[root@bookingwire sridhar]#wget http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm[root@bookingwire sridhar]#rpm -Uhv epel-release-6*.rpm |

To verify if the new repositories have been added to the repo list, run the following command and check the output to see if the repository has been added:

[root@bookingwire sridhar]#yum repolist |

If you happen to use an Ubuntu VPS, then you should use the following commands to enable the repositories:

[root@bookingwire sridhar]# wget http://repo.varnish-cache.org/debian/GPG-key.txt [root@bookingwire sridhar]# apt-key add GPG-key.txt [root@bookingwire sridhar]# echo deb http://repo.varnish-cache.org/ubuntu/ precise varnish-3.0 | sudo tee -a /etc/apt/sources.list[root@bookingwire sridhar]# sudo apt-get update |

Installing Varnish

Once the repositories are enabled, we can install Varnish:

[root@bookingwire sridhar]# yum -y install varnish |

On Ubuntu, you should run the following command:

[root@bookingwire sridhar]# sudo apt-get install varnish |

After a few seconds, Varnish will be installed. Lets verify the installation before we go further. In the terminal, enter the following command the output should contain the lines that follow the input command (we have reproduced only a few lines for the sake of clarity).

[root@bookingwire sridhar]## yum info varnish Installed Packages Name : varnish Arch : i686 Version : 3.0.5 Release : 1.el6 Size : 1.1 M Repo : installed |

That looks good; so we can be sure that Varnish is installed. Now, lets configure Varnish to start up on boot. In case you have to restart your VPS, Varnish will be started automatically.

[root@bookingwire sridhar]#chkconfig --level 345 varnish on |

Having done that, lets now start Varnish:

[root@bookingwire sridhar]#/etc/init.d/varnish start |

We have now installed Varnish and its up and running. Lets configure it to cache the pages from our NGINX server.

Basic Varnish configuration

The Varnish configuration file is located in /etc/sysconfig/varnish for Centos and /etc/default/varnish for Ubuntu.

Open the file in your terminal using the nano or vim text editors. Varnish provides us three ways of configuring it. We prefer Option 3. So for our 2GB server, the configuration steps are as shown below (the lines with comments have been stripped off for the sake of clarity):

NFILES=131072 MEMLOCK=82000 RELOAD_VCL=1 VARNISH_VCL_CONF=/etc/varnish/default.vcl VARNISH_LISTEN_PORT=80 , :443VARNISH_ADMIN_LISTEN_ADDRESS=127.0.0.1 VARNISH_ADMIN_LISTEN_PORT=6082 VARNISH_SECRET_FILE=/etc/varnish/secretVARNISH_MIN_THREADS=50VARNISH_MAX_THREADS=1000 VARNISH_STORAGE_FILE=/var/lib/varnish/varnish_storage.bin VARNISH_STORAGE_SIZE=1G VARNISH_STORAGE=malloc,${VARNISH_STORAGE_SIZE} VARNISH_TTL=120 DAEMON_OPTS=-a ${VARNISH_LISTEN_ADDRESS}:${VARNISH_LISTEN_PORT} \ -f ${VARNISH_VCL_CONF} \ -T ${VARNISH_ADMIN_LISTEN_ADDRESS}:${VARNISH_ADMIN_LISTEN_PORT} \ -t ${VARNISH_TTL} \ -w ${VARNISH_MIN_THREADS},${VARNISH_MAX_THREADS},${VARNISH_THREAD_TIMEOUT} \ -u varnish -g varnish \ -p thread_pool_add_delay=2 \ -p thread_pools=2 \ -p thread_pool_min=400 \ -p thread_pool_max=4000 \ -p session_linger=50 \ -p sess_workspace=262144 \ -S ${VARNISH_SECRET_FILE} \ -s ${VARNISH_STORAGE} |

The first line when substituted with the variables will read -a :80,:443 and instruct Varnish to serve all requests made on Ports 80 and 443. We want Varnish to serve all http and https requests.

To set the thread pools, first determine the number of CPU cores that your VPS uses and then update the directives.

[root@bookingwire sridhar]# grep processor /proc/cpuinfo processor : 0 processor : 1 |

This means you have two cores.

The formula to use is:

-p thread_pools=<Number of CPU cores> \-p thread_pool_min=<800 / Number of CPU cores> \ |

The -s ${VARNISH_STORAGE} translates to -s malloc,1G after variable substitution and is the most important directive. This allocates 1GB of RAM for exclusive use by Varnish. You could also specify -s file,/var/lib/varnish/varnish_storage.bin,10G which tells Varnish to use the file caching mechanism on the disk and that 10GB has been allocated to it. Our suggestion is that you should use the RAM.

Configure the default.vcl file

The default.vcl file is where you will have to make most of the configuration changes in order to tell Varnish about your Web servers, assets that shouldnt be cached, etc. Open the default.vcl file in your favourite editor:

[root@bookingwire sridhar]# nano /etc/varnish/default.vcl |

Since we expect to have two NGINX servers running our application, we want Varnish to distribute the http requests between these two servers. If, for any reason, one of the servers fails, then all requests should be routed to the healthy server. To do this, add the following to your default.vcl file:

backend bw1 { .host = 146.185.129.131; .probe = { .url = /google0ccdbf1e9571f6ef.html; .interval = 5s; .timeout = 1s; .window = 5; .threshold = 3; }} backend bw2 { .host = 37.139.24.12; .probe = { .url = /google0ccdbf1e9571f6ef.html; .interval = 5s; .timeout = 1s; .window = 5; .threshold = 3; }}backend bw1ssl { .host = 146.185.129.131; .port = 443; .probe = { .url = /google0ccdbf1e9571f6ef.html; .interval = 5s; .timeout = 1s; .window = 5; .threshold = 3; }} backend bw2ssl { .host = 37.139.24.12; .port = 443; .probe = { .url = /google0ccdbf1e9571f6ef.html; .interval = 5s; .timeout = 1s; .window = 5; .threshold = 3; }}director default_director round-robin { { .backend = bw1; } { .backend = bw2; } }director ssl_director round-robin { { .backend = bw1ssl; } { .backend = bw2ssl; } }sub vcl_recv { if (server.port == 443) { set req.backend = ssl_director; } else { set req.backend = default_director; } } |

You might have noticed that we have used public IP addresses since we had not enabled private networking within our servers. You should define the backends one each for the type of traffic you want to handle. Hence, we have one set to handle http requests and another to handle the https requests.

Its a good practice to perform a health check to see if the NGINX Web servers are up. In our case, we kept it simple by checking if the Google webmaster file was present in the document root. If it isnt present, then Varnish will not include the Web server in the round robin league and wont redirect traffic to it.

.probe = { .url = /google0ccdbf1e9571f6ef.html; |

The above command checks the existence of this file at each backend. You can use this to take an NGINX server out intentionally either to update the version of the application or to run scheduled maintenance checks. All you have to do is to rename this file so that the check fails!

In spite of our best efforts to keep our servers sterile, there are a number of reasons that can cause a server to go down. Two weeks back, we had one of our servers go down, taking more than a dozen sites with it because the master boot record of Centos was corrupted. In such cases, Varnish can handle the incoming requests even if your Web server is down. The NGINX Web server sets an expires header (HTTP 1.0) and the max-age (HTTP 1.1) for each page that it serves. If set, the max-age takes precedence over the expires header. Varnish is designed to request the backend Web servers for new content every time the content in its cache goes stale. However, in a scenario like the one we faced, its impossible for Varnish to obtain fresh content. In this case, setting the Grace in the configuration file allows Varnish to serve content (stale) even if the Web server is down. To have Varnish serve the (stale) content, add the following lines to your default.vcl:

sub vcl_recv { set req.grace = 6h; }sub vcl_fetch { set beresp.grace = 6h; }if (!req.backend.healthy) {unset req.http.Cookie;} |

The last segment tells Varnish to strip all cookies for an authenticated user and serve an anonymous version of the page if all the NGINX backends are down.

Most browsers support encoding but report it differently. NGINX sets the encoding as Vary: Cookie, Accept-Encoding. If you dont handle this, Varnish will cache the same page once each, for each type of encoding, thus wasting server resources. In our case, it would gobble up memory. So add the following commands to the vcl_recv to have Varnish cache the content only once:

if (req.http.Accept-Encoding) { if (req.http.Accept-Encoding ~ gzip) { # If the browser supports it, well use gzip. set req.http.Accept-Encoding = gzip; } else if (req.http.Accept-Encoding ~ deflate) { # Next, try deflate if it is supported. set req.http.Accept-Encoding = deflate; } else { # Unknown algorithm. Remove it and send unencoded. unset req.http.Accept-Encoding; } } |

Now, restart Varnish.

[root@bookingwire sridhar]# service varnish restart |

Additional configuration for content management systems, especially Drupal

A CMS like Drupal throws up additional challenges when configuring the VCL file. Well need to include additional directives to handle the various quirks. You can modify the directives below to suit the CMS that you are using. When using CMSs like Drupal if there are files that you dont want cached for some reason, add the following commands to your default.vcl file in the vcl_recv section:

if (req.url ~ ^/status\.php$ ||req.url ~ ^/update\.php$ ||req.url ~ ^/ooyala/ping$ ||req.url ~ ^/admin/build/features ||req.url ~ ^/info/.*$ ||req.url ~ ^/flag/.*$ ||req.url ~ ^.*/ajax/.*$ ||req.url ~ ^.*/ahah/.*$) {return (pass);} |

Varnish sends the length of the content (see the Varnish log output above) so that browsers can display the progress bar. However, in some cases when Varnish is unable to tell the browser the specified content-length (like streaming audio) you will have to pass the request directly to the Web server. To do this, add the following command to your default.vcl:

if (req.url ~ ^/content/music/$) {return (pipe);} |

Drupal has certain files that shouldnt be accessible to the outside world, e.g., Cron.php or Install.php. However, you should be able to access these files from a set of IPs that your development team uses. At the top of default.vcl include the following by replacing the IP address block with that of your own:

acl internal {192.168.1.38/46;} |

Now to prevent the outside world from accessing these pages well throw an error. So inside of the vcl_recv function include the following:

if (req.url ~ ^/(cron|install)\.php$ && !client.ip ~ internal) { error 404 Page not found.; } |

If you prefer to redirect to an error page, then use this instead:

if (req.url ~ ^/(cron|install)\.php$ && !client.ip ~ internal) { set req.url = /404; } |

Our approach is to cache all assets like images, JavaScript and CSS for both anonymous and authenticated users. So include this snippet inside vcl_recv to unset the cookie set by Drupal for these assets:

if (req.url ~ (?i)\.(png|gif|jpeg|jpg|ico|swf|css|js|html|htm)(\?[a-z0-9]+)?$) { unset req.http.Cookie; } |

Drupal throws up a challenge especially when you have enabled several contributed modules. These modules set cookies, thus preventing Varnish from caching assets. Google analytics, a very popular module, sets a cookie. To remove this, include the following in your default.vcl:

set req.http.Cookie = regsuball(req.http.Cookie, (^|;\s*)(__[a-z]+|has_js)=[^;]* |

If there are other modules that set JavaScript cookies, then Varnish will cease to cache those pages; in which case, you should track down the cookie and update the regex above to strip it.

Once you have done that, head to /admin/config/development/performance, enable the Page Cache setting and set a non-zero time for Expiration of cached pages.

Then update the settings.php with the following snippet by replacing the IP address with that of your machine running Varnish.

$conf[reverse_proxy] = TRUE;$conf[reverse_proxy_addresses] = array(37.139.8.42);$conf[page_cache_invoke_hooks] = FALSE; $conf[cache] = 1; $conf[cache_lifetime] = 0; $conf[page_cache_maximum_age] = 21600; |

You can install the Drupal varnish module (http://www.drupal.org/project/varnish), which provides better integration with Varnish and include the following lines in your settings.php:

$conf[cache_backends] = array(sites/all/modules/varnish/varnish.cache.inc); $conf[cache_class_cache_page] = VarnishCache; |

Checking if Varnish is running and

serving requests

Instead of logging to a normal log file, Varnish logs to a shared memory segment. Run varnishlog from the command line, access your IP address/ URL from the browser and view the Varnish messages. It is not uncommon to see a 503 service unavailable message. This means that Varnish is unable to connect to NGINX. In which case, you will see an error line in the log (only the relevant portion of the log is reproduced for clarity).

[root@bookingwire sridhar]# Varnishlog12 StatSess c 122.164.232.107 34869 0 1 0 0 0 0 0 0 12 SessionOpen c 122.164.232.107 34870 :80 12 ReqStart c 122.164.232.107 34870 1343640981 12 RxRequest c GET 12 RxURL c / 12 RxProtocol c HTTP/1.1 12 RxHeader c Host: 37.139.8.42 12 RxHeader c User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:27.0) Gecko/20100101 Firefox/27.0 12 RxHeader c Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 12 RxHeader c Accept-Language: en-US,en;q=0.5 12 RxHeader c Accept-Encoding: gzip, deflate 12 RxHeader c Referer: http://37.139.8.42/ 12 RxHeader c Cookie: __zlcmid=OAdeVVXMB32GuW 12 RxHeader c Connection: keep-alive 12 FetchError c no backend connection 12 VCL_call c error 12 TxProtocol c HTTP/1.1 12 TxStatus c 503 12 TxResponse c Service Unavailable 12 TxHeader c Server: Varnish 12 TxHeader c Retry-After: 0 12 TxHeader c Content-Type: text/html; charset=utf-8 12 TxHeader c Content-Length: 686 12 TxHeader c Date: Thu, 03 Apr 2014 09:08:16 GMT 12 TxHeader c X-Varnish: 1343640981 12 TxHeader c Age: 0 12 TxHeader c Via: 1.1 varnish 12 TxHeader c Connection: close 12 Length c 686 |

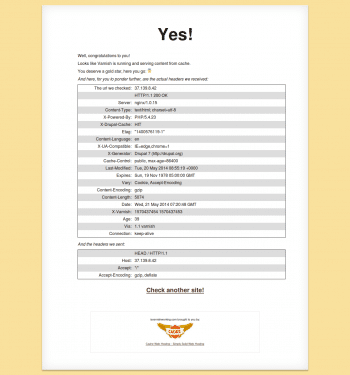

Resolve the error and you should have Varnish running. But that isnt enoughwe should check if its caching the pages. Fortunately, the folks at the following URL have made it simple for us.

Check if Varnish is serving pagesVisit http://www.isvarnishworking.com/, provide your URL/IP address and you should see your Gold Star! (See Figure 3.) If you dont, but instead see other messages, it means that Varnish is running but not caching.

Then you should look at your code and ensure that it sends the appropriate headers. If you are using a content management system, particularly Drupal, you can check the additional parameters in the VCL file and set them correctly. You have to enable caching in the performance page.

Running the tests

Running Pingdom tests showed improved response times of 2.14 seconds. If you noticed, there was an improvement in the response time in spite of having the payload of the page increasing from 2.9MB to 4.1MB. If you are wondering why it increased, remember, we switched the site to a new theme.

Apache Bench reports better figures at 744.722 ms.

Configuring client IP forwarding

Check the IP address for each request in the access logs of your Web servers. For NGINX, the access logs are available at /var/log/nginx and for Apache, they are available at /var/log/httpd or /var/log/apache2, depending on whether you are running Centos or Ubuntu.

Its not surprising to see the same IP address (of the Varnish machine) for each request. Such a configuration will throw all Web analytics out of gear. However, there is a way out. If you run NGINX, try out the following procedure. Determine the NGINX configuration that you currently run by executing the command below in your command line:

[root@bookingwire sridhar]# nginx -V |

Look for the with-http_realip_module. If this is available, add the following to your NGINX configuration file in the http section. Remember to replace the IP address with that of your Varnish machine. If Varnish and NGINX run on the same machine, do not make any changes.

set_real_ip_from 127.0.0.1;real_ip_header X-Forwarded-For; |

Restart NGINX and check the logs once again. You will see the client IP addresses.

If you are using Drupal then include the following line in settings.php:

$conf[reverse_proxy_header] = HTTP_X_FORWARDED_FOR; |

Other Varnish tools

Varnish includes several tools to help you as an administrator.

varnishstat -1 -f n_lru_nuked: This shows the number of objects nuked from the cache.

Varnishtop: This reads the logs and displays the most frequently accessed URLs. With a number of optional flags, it can display a lot more information.

Varnishhist: Reads the shared memory logs, and displays a histogram showing the distribution of the last N requests on the basis of their processing.

Varnishadm: A command line utility for Varnish.

Varnishstat: Displays the statistics.

Dealing with SSL: SSL-offloader, SSL-accelerator and SSL-terminator

SSL termination is probably the most misunderstood term in the whole mix. The mechanism of SSL termination is employed in situations where the Web traffic is heavy. Administrators usually have a proxy to handle SSL requests before they hit Varnish. The SSL requests are decrypted and the unencrypted requests are passed to the Web servers. This is employed to reduce the load on the Web servers by moving the decryption and other cryptographic processing upstream.

Since Varnish by itself does not process or understand SSL, administrators employ additional mechanisms to terminate SSL requests before they reach Varnish. Pound (http://www.apsis.ch/pound) and Stud (https://github.com/bumptech/stud) are reverse proxies that handle SSL termination. Stunnel (https://www.stunnel.org/) is a program that acts as a wrapper that can be deployed in front of Varnish. Alternatively, you could also use another NGINX in front of Varnish to terminate SSL.

However, in our case, since only the sign-in pages required SSL connections, we let Varnish pass all SSL requests to our backend Web server.

Additional repositories

There are other repositories from where you can get the latest release of Varnish:

wget repo.varnish-cache.org/redhat/varnish-3.0/el6/noarch/varnish-release/varnish-release-3.0-1.el6.noarch.rpmrpm nosignature -i varnish-release-3.0-1.el6.noarch.rpm |

If you have the Remi repo enabled and the Varnish cache repo enabled, install them by specifying the defined repository.

Yum install varnish enablerepo=epelYum install varnish enablerepo=varnish-3.0 |

Our experience has been that Varnish reduces the number of requests sent to the NGINX server by caching assets, thus improving page response times. It also acts as a failover mechanism if the Web server fails.

We had over 55 JavaScript files (two as part of the theme and the others as part of the modules) in Drupal and we aggregated JavaScript by setting the flag in the Performance page. We found a 50 per cent drop in the number of requests; however, we found that some of the JavaScript files were not loaded on a few pages and had to disable the aggregation. This is something we are investigating. Our recommendation is not to choose the aggregate JavaScript files in your Drupal CMS. Instead, use the Varnish module (https://drupal.org/project/varnish).

The module allows you to set long object lifetimes (Drupal doesnt set it beyond 24 hours), and use Drupals existing cache expiration logic to dynamically purge Varnish when things change.

You can scale this architecture to handle higher loads either vertically or horizontally. For vertical scaling, resize your VPS to include additional memory and make that available to Varnish using the -s directive.

To scale horizontally, i.e., to distribute the requests between several machines, you could add additional Web servers and update the round robin directives in the VCL file.

You can take it a bit further by including HAProxy right upstream and have HAProxy route requests to Varnish, which then serves the content or passes it downstream to NGINX.

To remove a Web server from the round robin league, you can improve upon the example that we have mentioned by writing a small PHP snippet to automatically shut down or exit() if some checks fail.

References

[1] https://www.varnish-cache.org/

[2] https://www.varnish-software.com/static/book/index.html

[3] http://www.lullabot.com/blog/article/configuring-varnish-high-availability-multiple-web-servers