In the previous article we discussed that as data volumes grow towards the peta-scale and beyond, most traditional databases find it difficult to scale up to the needs of cloud computing and Big Data. While Big Data drives the need for databases to handle huge volumes of data, cloud computing requires dynamic scalability.

Dynamic scalability

Dynamic scalability, at first glance, appears simple to achieve in databases with the “Shared Nothing” architecture. This architecture splits the database into multiple partitions, with one partition per server. Therefore, it should be possible to dynamically scale by adding another server and repartitioning the database across all servers. However, database partitioning is an important factor in the performance of database access.

Database queries frequently access related sets of information. If a frequently accessed related set of data gets partitioned across multiple servers, data from multiple partitions needs to be accessed before the query results can be returned — a process known as data shipping. This can have a large adverse impact on query access times.

Data partitioning needs to take data access patterns into account in order to reduce data shipping costs. Hence, “Shared Nothing” traditional relational databases typically find it difficult to achieve dynamic scalability.

The limitation of the traditional RDBMS in being able to scale dynamically led to the NoSQL movement, and the development of special-purpose data-stores (since the term database is typically used to represent an ACID-compliant traditional database, we use the term data-store for the NoSQL databases). They proposed data persistent stores typically built as replicated data tables, which trade off strict ACID semantics for improved scalability.

These systems are characterised by eventual consistency (which we discussed in an earlier article) instead of the strict ACID consistency of RDBMSs. While NoSQL databases such as BigTable, Cassandra or SimpleDB have shown excellent performance, it is not yet clear whether they can scale to the demands of the next decade’s peta-scale computing.

Big data challenges

Over the past few decades, there have been two major camps of database technology. The first one is that of OnLine Transaction Processing (OLTP) workloads, and the second that of OnLine Analytical Processing (OLAP) workloads.

An OLTP workload typically consists of a number of concurrent transactions that perform insertions, deletions and updates of the data stored in the database. OLTP workloads are both read- and write-intensive. Popular examples that we encounter in everyday life are bank transactions (deposits and withdrawals) and credit-card transactions.

OLAP workloads, on the other hand, typically perform complicated and compute-intensive analysis on the data stored in the database. For example, the CEO of a supermarket chain wants to know what the total volume of sales over the Christmas holiday period is, from each store. This requires OLAP query processing.

OLAP queries are typically read-intensive. An OLTP transaction typically accesses one or a few records, but it accesses all fields of the accessed records. On the other hand, OLAP queries access a large number of records of the database, but only access one, or a very few, of the fields of each record.

The differences in the very nature of OLTP and OLAP workloads demand certain design differences in the databases for each of these workloads. An example of these differences is the row-oriented record layout of Row-Store Databases, versus the columnar layout of Column-Store Databases.

In row stores, all the fields of the record are stored contiguously, with one record completely laid out before another record starts. In columnar stores, the column fields are laid out contiguously.

Recall that OLTP typically operates on one or a very few number of records, and hence benefits from the row layout. On the other hand, OLAP workloads access a large number of records, accessing only one or a small number of columns in each record. Hence they benefit from the columnar layout. With a column-store architecture, a DBMS needs only to read the values of the columns required for processing a given query, and can avoid bringing into memory irrelevant fields that are not needed for the current query.

In warehouse environments where typical queries involve aggregates performed over large numbers of data items, a column store has a sizeable performance advantage over a row store. A number of popular column oriented databases have been developed, such as VoltDB and Vertica.

As mentioned earlier, OLAP is read-intensive; the database performance needs to be optimised for read operations, but at the same time, it needs to support database updates while maintaining ACID consistency. Some modern databases try to optimise for both, by providing both read- and write-optimised stores.

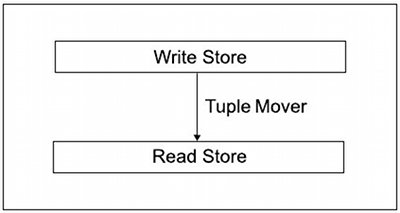

C-store is the academic project on which the popular database analytics product, Vertica, is based. C-store provides both a read-optimised column store and an update/insert-oriented writeable store, connected by a tuple mover.

C-Store supports a small Writeable Store (WS) component, which provides extremely fast insert and update operations. It also supports a large Read-optimised Store (RS), which is well optimised for query operations. Read-Store supports only a very restricted form of insert, namely the batch movement of records from WS to RS, a task that is performed by the tuple mover.

While till now the buzz in the database industry has been about “Big Data”, which denotes the huge volumes of data expected to be generated by peta-scale computing, it is not just the data that matters. After all, DBMS and data analytics are not just about raw data; they are about converting data into information, which is then converted by analytics tools into business insights. Therefore, the term “Big Data” internally covers the following requirements as well:

- Any future database system should be able to handle huge volumes of data (as denoted by “Big Data” needs).

- They should be able to process and query the data at great speeds (hence the need for fast data). This requirement brings forth the need for complex programming models that can query and process large data, such as Map-Reduce.

- They should be able to analyse the data and convert it into business intelligence using complex analytics (hence the need for deep analytics).

Therefore the challenge for future database systems is not just about big data, but about handling big data that requires fast query responses and deep analytics.

Taking advantage of emerging hardware

It is not only the database industry that is undergoing many changes to address the twin challenges of big data and cloud computing. The hardware industry is at an inflection point today as we discuss below.

In 1965, Dr Gordon Moore from Intel predicted that the transistor density of semiconductor chips would double approximately every 18 to 24 months — this is popularly referred to as Moore’s Law. For many decades, till now, such an exponential growth in the number of transistors on the chip has roughly translated into improvements in processor performance. Moore’s Law enabled processor performance, more specifically single-thread performance, to double every 18 months — thereby allowing software developers to deliver increasingly complex functionality and greater performance without having to rewrite their code.

Though Moore’s Law itself continues to hold good even now, the diminishing returns from hardware ILP extraction techniques, and the constraints on increasing the clock frequencies due to the limitations of power consumption and heat dissipation of the chip have forced chip manufacturers to look towards multi-core processor designs to improve overall system performance, instead of the traditional single-core processor designs.

With multiple cores on a single chip executing simultaneously, it is possible to meet the throughput demands of an application while keeping the processor clock frequencies moderate, to contain power consumption and the heat generated. So, what do multi-core processors mean to future database systems?

Multi-core systems require database systems that can extract greater parallelism, in both intra- and inter-query processing. This requires Massively Parallel Databases (MPP Databases), which support “Shared Nothing” architectures.

While parallel databases are known to scale to hundreds of nodes, they have not been shown to scale to thousands of nodes and beyond. On the other hand, non-traditional database architectures, like data stores supporting Map-Reduce, have been scaled to thousands of nodes with extremely high availability and fault resiliency.

Hence, the question that needs to be answered is: Are massively parallel databases the right solution for tomorrow’s multi-core era or is it the Map-Reduce data stores?

A detailed discussion that compares the relative merits of these two systems was described in the paper, “A Comparison of Approaches to Large-Scale Data Analysis” [PDF].

Current state-of-the-art approaches have also proposed combining massively parallel databases with the Hadoop architecture, as proposed in HadoopDB, which is described in the paper, “HadoopDB: An Architectural Hybrid of MapReduce and DBMS Technologies for Analytical Workloads” [PDF].

Another interesting direction for the future of database technology is to take advantage of the emerging GPU Many-Core architectures. There has been work on off-loading many of the data query operators to GPU architectures. Techniques for mapping many database operations to GPUs are discussed in the paper, “Fast Computation of Database Operations using Graphics Processors” [PDF].

The most important hardware innovation for future databases is, of course, the introduction of Non-Volatile RAM. Databases have traditionally been using the buffer pool in memory to speed up database operations, while also maintaining the ACID properties.

The introduction of NVRAM takes away the need for the buffer pool, and requires a database redesign. While current databases have been designed for optimising disk reads, which are much slower than main-memory accesses, this will change drastically with NVRAM, since RAM is going to be persistent. Therefore, newer database systems explicitly designed for NVRAM are the need of the day.

While we have been discussing the challenges for future databases, I would like to bring to the reader’s attention an informative blog on state-of-the-art database design — dbmsmusings.blogspot.com — which is maintained by Prof Daniel Abadi, who is a pioneer in modern database design. His insightful articles on database research are a must-read for database programmers.