How many times have you found yourself in a situation where your Web application is not performing well? Or, should I say, the database you used isn’t performing well? How often have you discovered that the performance issues arise only in the production environment, and you never experience them in the development environment? Or that the query on a good-looking, well-indexed table is taking hours to complete? This article will help you understand what generally goes wrong, and how to avoid many common and not-so-common traps.

Some common fallacies and problems

Do not use InnoDB if you never use transactions on the table in question. InnoDB provides full ACID compliance — but that comes at a cost. Use MyISAM if transactions aren’t needed. Do not use tables of mixed types in a transaction. In other words, in a transaction, if one or more tables uses InnoDB, and other (one or more) tables use MyISAM, the entire subject is nullified.

In case of failure of the transaction, only the ones with transactions will be brought back to original state — the rest will have committed data, which is, finally, a highly inconsistent state. MySQL issues warning messages in such cases, which are mostly ignored by people.

Rule No. 2

Do not use COUNT(*) to find out the total number of rows in a table that uses a storage engine that supports transactions (for example, InnoDB).

For years, programmers didn’t notice that the default storage engine in MySQL is MyISAM, which doesn’t support transactions. Issuing a COUNT(*) on InnoDB is extremely expensive, and on a working (live/production class) database, will most likely give you an approximate value. No application should be designed to depend on a COUNT(*) result.

A typical example is finding the next free number to be used as a unique identifier. This is just bad application design. There are much more elegant techniques that can be implemented to achieve this. Should you ever need to know the exact number of rows in a table, think of an alternate way to do so. If you can’t find any solution, remember that COUNT(*), in the case of InnoDB on a production database, may not give you the exact value since some transactions may be running at that point of time. Having said that, the result of COUNT(*), if put to some serious use, will anyway lead to bugs.

There are many known instances where applications, for whatever reasons, have been designed with MyISAM tables, with heavy usage of COUNT(*). With the reputation of not being a highly fault-tolerant storage engine, people tended to change the storage engine from MyISAM to InnoDB on running databases. The result is total disaster in terms of performance, and often leads to nasty bugs.

There are infinite forums and comparison articles on the Internet, where PostgreSQL and MySQL are compared. A common, childish, comment that’s made is that COUNT(*) in MySQL gets results much quicker compared to PostgreSQL — and hence, MySQL is faster. MySQL may or may not be faster — but this observation is absolutely misleading. Change the table’s storage engine to InnoDB, and you will find MySQL taking the same amount of time as PostgreSQL.

Rule No. 3

If there is a full-table scan, poor performance will soon result. This is the fundamental evil in the vast majority of cases when it comes to queries with abysmal performance. You will find people suggesting you reindex your tables to solve such problems, but unfortunately, that won’t work.

Understand what a full-table scan entails. A table-scan occurs when, for any reason, MySQL has to scan all the records (well, almost all) in a table, to complete a query. This definition holds true in case of any relational database, not only MySQL. The common wisdom is to index tables to solve the problem of table scans and consequent slow queries. However, this doesn’t hold true in all cases; we will look at that later. Attacking table-scans and related problems will remain the prime focus of this and forthcoming articles.

Rule No. 4

If you have not tested your application/database with thousands and millions of records, rest assured that you will have a rude awakening some day, being called upon to solve the problem of poorly performing queries. No matter how much care you take while writing queries, something or the other is left in bad shape — call it a bug. This isn’t the real problem. The problem is getting to know about it when the application has gone live.

Any seasoned programmer with intense experience of algorithms will tell you why test/sample data is so important. A poor algorithm may appear to perform superbly with an insignificant amount of data. Samples running on huge datasets are the real test of any algorithm. This holds equally true in case of database queries.

Rule No. 5

Make EXPLAIN your best friend. Use it every now and then. This is an excellent tool to see what may go wrong.

A clichéd scenario

Here is a simple table to take this discussion forward. Create a table with the following script:

CREATE TABLE 'student_master' (

'student_id' INT(10) NOT NULL DEFAULT '0',

'full_name' VARCHAR(100) NOT NULL,

'email_address' VARCHAR(100) NOT NULL,

'password' VARCHAR(100) NOT NULL,

'deleted' TINYINT(4) NOT NULL,

PRIMARY KEY ('student_id')

)

COLLATE='utf8_general_ci'

ENGINE=InnoDB

ROW_FORMAT=DEFAULT

This table has five columns: student_id, full_name, email_address, password and deleted. The last column, deleted, is used as a Boolean flag, with the values 0 or 1 to indicate if an account is deleted. Populate this table with 100 records.

The design of this table seems quite sufficient. We have a primary key on student_id, which may also act as the foreign key for other tables which we will create later. Now let’s discuss some common scenarios. As we have the email address and password in this table, let’s create the SQL query to validate the login. Couldn’t be simpler, right? Let’s see…

SELECT COUNT (*) FROM student_master WHERE email_address = 'whatever' AND password = 'whatever' AND deleted = 0

Ah! Common wisdom! Avoid using COUNT (*), it kills the database. Let’s rewrite the following instead:

SELECT student_id FROM student_master WHERE email_address = 'whatever' AND password = 'whatever' AND deleted = 0

Brilliant! We got rid of COUNT (*) in the second query. Does it help? Let’s take a moment to analyse the two queries.

In the first one, we are asking MySQL to find the rows where email_address is equal to whatever, and password is equal to whatever, and return the number of such rows. In the second query, we are asking for almost the same thing, but instead of asking for the number of rows found, we ask for the value of the column student_id for all rows that match the criteria. As far as basic judgement goes, the second query appears more expensive.

The fact of the matter is that both queries are almost equally expensive, and both are database-killers. Why? Knowingly or not, we are causing a table scan in both queries. Refer to Rule No. 3; a table scan is whenever all the rows of the table are scanned. The WHERE email_address = '<user supplied>' AND password = '<user supplied>' AND deleted = 0 part of the queries tell MySQL to check the values of the three columns in question (email_address, password, and deleted).

Is this going to cause a table scan? Yes, sir! Let’s see how. Execute the following query:

EXPLAIN SELECT student_id FROM student_master WHERE email_address = 'whatever' AND password = 'whatever' AND deleted = 0

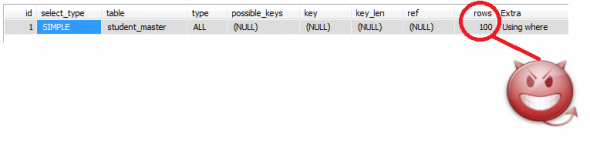

Look at the output; pay attention to the second-last column, titled rows. It shows 100 in the first row — the number of rows that MySQL guesses it will have to scan, in order to evaluate the result of this query. Remember that we have 100 rows in this table. This is the full-table scan devil that we need to handle. I seriously suggest all readers refer to the MySQL Manual to learn how to use EXPLAIN and EXPLAIN EXTENDED. Ignore other columns for the moment; we will shortly discuss their meaning and importance.

This result is a clear indication of a table scan. To overcome this, we need to add indexes.

Rule No. 6

Do not add indexes as a “solution to everything mankind may face”. Analyse the nature of the queries that are performed on a table, and index accordingly. Badly created indexes are of no use; in fact, they cause serious overhead, leading to slow insertions and updates of data in the table.

In the above queries, we asked MySQL to check the values of three columns. For the sake of this discussion, let’s create an index on the column email_address, with the following query:

ALTER TABLE `student_master` ADD INDEX `LoginValidationIndex` (`email_address`)

Once again, run the query given below:

EXPLAIN SELECT student_id FROM student_master WHERE email_address = 'whatever' AND password = 'whatever' AND deleted = 0

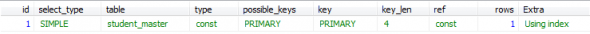

Notice the value in the column row — it now shows 1, where previously it was 100. This means that now MySQL will have to scan only 1 row in order to evaluate the result of this query. How did that happen?

The answer is, the index that we created. This tells MySQL to store/sort the data in a manner such that searching records on the basis of email_address becomes very, very fast. But we’re searching based on values of other columns too; why isn’t that causing a table scan?

That’s because MySQL is smart enough to find out if any column in the WHERE clause is indexed, and use that index as the first filter; only the set of records that’s left after this filter is applied has the remaining criteria (the remaining parts of the WHERE clause) applied to them. (Like for email_address, if the values in the indexed column are unique in the table, we are left with only one row. If the field could have duplicate values — for example, a person’s birth-date — then there could be multiple records left after the first filter. Still, this is immensely better than a full-table scan!)

Another critical and widely-held misconception is that the order of the columns in the WHERE clause plays some role in the performance of a query. The fact is that if the index(s) are properly used, the order of the columns doesn’t make any difference.

If you want to verify this, try changing the order in which the columns are mentioned, from: email_address = 'whatever' AND password = 'whatever' AND deleted = 0 to password = 'whatever' AND deleted = 0 AND email_address = 'whatever' or even deleted = 0 AND password = 'whatever' AND email_address = 'whatever'.

Run EXPLAIN on the queries with the changed column order, and you will find you get the same results — because MySQL applies the same query optimisation using the index for email_address.

Making it a bit more interesting

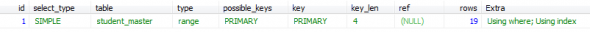

Execute the following EXPLAIN query; you can see the results in Figure 3:

Explain query no.1

EXPLAIN SELECT student_id FROM student_master WHERE student_id = 1

The value in the type column of the results is const. The following information about const is copied from the MySQL Reference Manual for version 5.1:

The table has at most one matching row, which is read at the start of the query. Because there is only one row, values from the column in this row can be regarded as constants by the rest of the optimiser. const tables are very fast because they are read only once. const is used when you compare all parts of a PRIMARY KEY or UNIQUE index to constant values.

This is fairly descriptive and easily understood; we are comparing the value of the primary key column with a single value. The value of the rows column is 1, which is always a very good sign.

Now, run the next EXPLAIN query (No. 2). Before you view the results (also seen in Figure 4), do a bit of thinking, and guess what they could be:

Explain query no.2

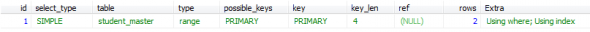

EXPLAIN SELECT student_id FROM student_master WHERE student_id = 1 OR student_id = 2

The value in the type column indicates range. Once again, I quote from the MySQL Reference Manual:

Only rows that are in a given range are retrieved, using an index to select the rows. The key column in the output row indicates which index is used. The key_len contains the longest key part that was used. The ref column is NULL for this type. range can be used when a key column is compared to a constant using any of the =, <>, >, >=, <, <=, IS NULL, <=>, BETWEEN, or IN() operators.

This time, the type isn’t const because we are comparing the primary key with two values. MySQL identifies this, and retrieves rows whose primary key value is within the range of 1 and 2. Similarly, EXPLAIN queries 3 and 4 (results shown in Figures 5 and 6) show the type as range.

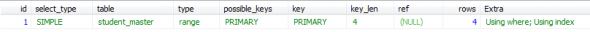

Explain query no.3

EXPLAIN SELECT student_id FROM student_master WHERE student_id = 1 OR student_id = 2 OR student_id = 19 OR student_id = 20

Explain query no.4

EXPLAIN SELECT student_id FROM student_master WHERE student_id >= 1 AND student_id <= 20

Now let’s make EXPLAIN query No 5 (see Figure 7) a little more complicated:

Explain query no.5

EXPLAIN SELECT student_id FROM student_master WHERE student_id IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 20)

The type is range, and rows indicates 19. This is fairly understandable. We have mentioned 19 values in the list — 1 to 18, and 20. Now let’s rerun the same query, with a very small change; we will include the value 19 in the list.

EXPLAIN query No 6 (results visible in Figure 8):

Explain query no.6

EXPLAIN SELECT student_id FROM student_master WHERE student_id IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20)

Do the results surprise you? The type now indicates index, and rows indicates 100. Huh?!

Now, for a bigger “Huh”, let’s understand the meaning of the value index in type. Here’s another quote from the MySQL Reference Manual:

This join type is the same as ALL, except that only the index tree is scanned. This usually is faster than ALL because the index file is normally smaller than the data file. MySQL can use this join type when the query uses only columns that are part of a single index.

Now here is the description for type ALL:

A full-table scan is done for each combination of rows from the previous tables. This is normally not good if the table is the first one not marked const, and usually very bad in all other cases. Normally, you can avoid ALL by adding indexes that enable row retrieval from the table based on constant values, or column values from earlier tables.

Certainly this is bad news. The entire index tree is scanned in this case, and that too only with a small change — the inclusion of the numeric value 19 in the list. We will discuss the answer to this situation later; however, there is a great lesson here. There are endless instances where the IN clause is used believing this is a safe practice. As we just saw, this isn’t the case.

One thing worth noticing here is the value of the possible_keys and key columns. The possible_keys column indicates the index(es) that MySQL estimates it can use — but this is before the execution of the query. The key column indicates the actual index(es) used in executing the query. The value of possible_keys in this case is PRIMARY, and key is LoginValidationIndex (this is the index we created on the column email_address). There is no obvious relationship between the IN clause used with WHERE, and LoginValidationIndex. To understand this, let’s read the following excerpt (once again, from the MySQL Reference Manual):

The key column indicates the key (index) that MySQL actually decided to use. If MySQL decides to use one of the possible_keys indexes to look up rows, that index is listed as the key value. It is possible that key will name an index that is not present in the possible_keys value. This can happen if none of the possible_keys are suitable for looking up rows, but all the columns selected by the query are columns of some other index. This implies that the named index covers the selected columns, so although it is not used to determine which rows to retrieve, an index scan is more efficient than a data row scan. For InnoDB, a secondary index might cover the selected columns even if the query also selects the primary key, because InnoDB stores the primary key value with each secondary index. If key is NULL, MySQL found no index to use for executing the query more efficiently. To force MySQL to use, or ignore, an index listed in the possible_keys column, use FORCE INDEX, USE INDEX, or IGNORE INDEX in your query.

Right; this answers at least half the question. Since LoginValidationIndex is a secondary index, and the table in question uses InnoDB, the value of the primary key is also stored with LoginValidationIndex. This means that if need be, MySQL can use LoginValidationIndex to retrieve rows against values related to the primary key. However, the question is why the type has changed from range to index — which is causing a full index tree scan.

Let’s try and force MySQL to use the PRIMARY index only; let’s issue the following EXPLAIN query No 7, and view the result (Figure 9):

Explain query no.7

EXPLAIN EXTENDED SELECT student_id FROM student_master FORCE INDEX (PRIMARY) WHERE student_id IN (1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20)

Bad news! This is getting more complex. Now MySQL is using only the PRIMARY index, yet scanning the entire index tree!

For now, let’s take a break and continue the discussion in the next article in this series. I suggest that all you MySQL enthusiasts try and find an answer to the question of why the type has changed from range to index. In the next part of this series, we will discuss more complicated scenarios.

This article is very good . Optimising the DB can be learnt by reading this .