Today, information is being created faster than ever by businesses across the world. The information growth rate is not uniform across industry segments. In some verticals, growth follows a sedate pace, whereas an information explosion awaits other industries like financial services, health care, etc. In such a complex environment of information management, today’s IT infrastructure managers face the challenge of enabling the right amount of infrastructure that can help organisations in leveraging information and creatively managing the data centres optimally. And all this needs to be achieved in the most economical way.

On the one hand is the non-linear growth of data, regulatory compliance and the decreasing cost of storage, and on the other is the impact of the industry slowdown. All of this has combined to further speed up the process of doing more with less. In order to keep up with these dynamics, IT managers are faced with the challenge of cutting IT budgets while risking the probability of not addressing business needs effectively, both from the resourcing as well as the infrastructure perspectives.

Inadequacy in addressing business needs includes exposing business-critical data to risks such as loss of data, theft and downtime of business applications.

This is a pain point for the industry and can be addressed by designing a ‘tier-ed infrastructure’ model, which is a sub-set of Information Life Cycle Management (ILM) for data centres aiming to bring in a systematic approach to deploy servers, storage and business applications.

What is ‘tiering’?

A tier-ed infrastructure brings about a systematic approach of providing the right amount of horsepower to fuel the infrastructure and to handle the business needs in the most optimal way. This is done by leveraging the existing infrastructure to ensure optimal utilisation of the equipment, ensuring the right Quality of Service (QoS), and harnessing the infrastructure and the tools to load-balance the resources, thereby providing high availability.

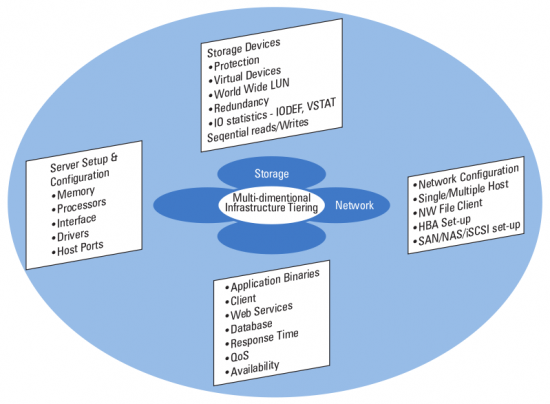

As shown in Figure 1, a ‘tiering’ exercise involves a holistic view of all business applications as well as data centre inventory. This enables IT managers to formulate the right strategy by creating tiered buckets and fitting the applications, server and storage appropriately. This is done based on their functional and non-functional characteristics and has proved to be an effective means to reduce the overall cost and, at the same time, ensure higher grades of service.

Why is tiering important?

Tiering ensures optimisation of the data-centre infrastructure to handle business data requirements in the most effective manner, which is essentially the process of considering the functional and non-functional attributes of the different infrastructure components to enable the creation of tiers in IT infrastructure.

The following components form the building blocks of a tiered infrastructure model for organisations:

- Classification of data

- Storage, server and network tiering

- Data heat map

One of the key considerations of a tiering model is that the service should be provided in tiers (varying the levels of service), which fits the purpose and is not necessarily the best of the breed. Various commercially available Storage Resource Management (SRM) applications may be used to move the data to the appropriate tier of storage, as required.

How does tiering benefit data-centre managers?

Tiering enables IT managers to understand the requirements of the application data in a holistic way. For example, live production data from a financial services application needs greater bandwidth on a high-performance network with a high Quality of Service (QoS) to be de-staged at the back-end storage, providing equivalent performance levels, whereas data that needs to be archived can have a lower priority and a lesser performance requirement from an availability perspective.

Apart from cost-savings, organisations that use tier-based archiving benefit by:

- Highly reduced turnaround times in the back-up and restoration of their application data

- Application data is optimised for storage

- Availability of guidance on the cost to the application, based on the aforesaid storage tiers

- Dependence of the service levels associated with a given application on the environment in which it is running

- Heterogeneous storage and server management to increase agility, quality of service and the optimising of application performance

On the flip side, there have been several instances where businesses have invested in a tiered storage model, but have failed to reap the benefits and have actually ended up dealing with much larger chunks of unstructured data. This highlights the need for investing in a detailed design and implementation approach to tiering, including a thorough benchmarking of the infrastructure.

How to implement tiering?

The fundamental reason for the classification of business data in a tier-ed infrastructure model is to address the following industry issues:

- Data classification as one of the important attributes of a comprehensive IT data centre strategy

- The need to reduce IT costs and improve effectiveness

- A means to maximise a business’ current infrastructure investments and support its Information Life Cycle Management efforts

- The need to create a data heat map, which comprises the data life cycle management with details on the different phases of data creation, storage, retrieval and archival. The data heat map is also supposed to showcase the changing importance of data to the business at various stages.

The process of implementing tiered infrastructure involves understanding the data requirements of the applications in a holistic way, relative to the performance requirements of the other applications. One of the main tasks of a tiering initiative is to classify the data created based on several attributes such as age of the data, its business value, criticality and its usage.

The value of the information created in businesses changes with time. This is not to mean that it isn’t useful. The emergence of regulatory compliance laws such as the Sarbanes Oxley (SOX) and other corporate best practices has necessitated the need for archiving old data from organisational e-mails, sales data, and so on, for a specified period of time. Though this is a low priority segment of data and has a low frequency of usage, it nevertheless needs to be handled in a specified manner, with the right archival policies.

Business-critical data requires a high priority and an equally robust mechanism to ensure the reliability and availability of such data. This type of data includes sales reporting data, IP, clinical research data, and so on, which demand high priority in terms of data back-up, restoration, etc.

Every organisation creates different types of data to support its business. There is primary data, which is critical to the business, whereas the other forms are purely for business support. Some of the secondary and tertiary types of data are created through tools such as BI applications for business analysis. Hence, it is important to categorise the information generated based on the usage of the information for different applications within the organisation.

http://sourceforge.net/projects/ohsm/